Uh oh!

There was an error while loading.Please reload this page.

- Notifications

You must be signed in to change notification settings - Fork148

A Node.js PostgreSQL client with runtime and build time type safety, and composable SQL.

License

gajus/slonik

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Abattle-tested Node.js PostgreSQL client with strict types, detailed logging and assertions.

(The above GIF shows Slonik producingquery logs. Slonik produces logs usingRoarr. Logs include stack trace of the actual query invocation location and values used to execute the query.)

If you value my work and want to see Slonik andmany other of my Open-Source projects to be continuously improved, then please consider becoming a patron:

- Promotes writing raw SQL.

- Discourages ad-hoc dynamic generation of SQL.

Read:Stop using Knex.js

Note: Using this project does not require TypeScript. It is a regular ES6 module. Ignore the type definitions used in the documentation if you do not use a type system.

- Runtime validation.

- Assertions and type safety.

- Safe connection handling.

- Safe transaction handling.

- Safe value interpolation.

- Transaction nesting.

- Transaction events.

- Transaction retrying.

- Query retrying.

- Detailedlogging.

- Asynchronous stack trace resolution.

- Middlewares.

- Mapped errors.

- ESLint plugin.

- Slonik

- Sponsors

- Principles

- Features

- Contents

- About Slonik

- Documentation

- Usage

- How are they different?

- Type parsers

- Interceptors

- Recipes

- Runtime validation

sqltag- Value placeholders

- Query building

- Query methods

- Utilities

- Error handling

- Original

node-postgreserror - Handling

BackendTerminatedError - Handling

CheckIntegrityConstraintViolationError - Handling

ConnectionError - Handling

DataIntegrityError - Handling

ForeignKeyIntegrityConstraintViolationError - Handling

NotFoundError - Handling

NotNullIntegrityConstraintViolationError - Handling

StatementCancelledError - Handling

StatementTimeoutError - Handling

UniqueIntegrityConstraintViolationError - Handling

TupleMovedToAnotherPartitionError

- Original

- Migrations

- Types

- Debugging

- Syntax Highlighting

- Development

Slonik began as a collection of utilities designed for working withnode-postgres. It continues to usenode-postgres driver as it provides a robust foundation for interacting with PostgreSQL. However, what once was a collection of utilities has since grown into a framework that abstracts repeating code patterns, protects against unsafe connection handling and value interpolation, and provides a rich debugging experience.

Slonik has beenbattle-tested with large data volumes and queries ranging from simple CRUD operations to data-warehousing needs.

"Słonik" is a Polish diminutive of"słoń," meaning “little elephant” or “baby elephant.” The word"słoń" itself comes from Proto-Slavic *slonъ, which was likely borrowed from a Germanic language and may ultimately trace back to Latin.

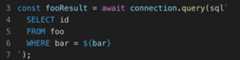

Among the primary reasons for developing Slonik, was the motivation to reduce the repeating code patterns and add a level of type safety. This is primarily achieved through the methods such asone,many, etc. But what is the issue? It is best illustrated with an example.

Suppose the requirement is to write a method that retrieves a resource ID given values defining (what we assume to be) a unique constraint. If we did not have the aforementioned helper methods available, then it would need to be written as:

import{sql,typeDatabaseConnection}from'slonik';typeDatabaseRecordIdType=number;constgetFooIdByBar=async(connection:DatabaseConnection,bar:string):Promise<DatabaseRecordIdType>=>{constfooResult=awaitconnection.query(sql.typeAlias('id')` SELECT id FROM foo WHERE bar =${bar} `);if(fooResult.rowCount===0){thrownewError('Resource not found.');}if(fooResult.rowCount>1){thrownewError('Data integrity constraint violation.');}returnfooResult[0].id;};

oneFirst method abstracts all of the above logic into:

constgetFooIdByBar=(connection:DatabaseConnection,bar:string):Promise<DatabaseRecordIdType>=>{returnconnection.oneFirst(sql.typeAlias('id')` SELECT id FROM foo WHERE bar =${bar} `);};

oneFirst throws:

NotFoundErrorif query returns no rowsDataIntegrityErrorif query returns multiple rowsDataIntegrityErrorif query returns multiple columns

In the absence of helper methods, the overhead of repeating code becomes particularly visible when writing routines where multiple queries depend on the proceeding query results. Using methods with inbuilt assertions ensures that in case of an error, the error points to the source of the problem. In contrast, unless assertions for all possible outcomes are typed out as in the previous example, the unexpected result of the query will be fed to the next operation. If you are lucky, the next operation will simply break; if you are unlucky, you are risking data corruption and hard-to-locate bugs.

Furthermore, using methods that guarantee the shape of the results allows us to leverage static type checking and catch some of the errors even before executing the code, e.g.

constfooId=awaitconnection.many(sql.typeAlias('id')` SELECT id FROM foo WHERE bar =${bar}`);awaitconnection.query(sql.typeAlias('void')` DELETE FROM baz WHERE foo_id =${fooId}`);

Static type check of the above example will produce a warning as thefooId is guaranteed to be an array and binding of the last query is expecting a primitive value.

Slonik only allows to check out a connection for the duration of the promise routine supplied to thepool#connect() method.

The primary reason for implementingonly this connection pooling method is because the alternative is inherently unsafe, e.g.

// This is not valid Slonik APIconstmain=async()=>{constconnection=awaitpool.connect();awaitconnection.query(sql.typeAlias('foo')`SELECT foo()`);awaitconnection.release();};

In this example, ifSELECT foo() produces an error, then connection is never released, i.e. the connection hangs indefinitely.

A fix to the above is to ensure thatconnection#release() is always called, i.e.

// This is not valid Slonik APIconstmain=async()=>{constconnection=awaitpool.connect();letlastExecutionResult;try{lastExecutionResult=awaitconnection.query(sql.typeAlias('foo')`SELECT foo()`);}finally{awaitconnection.release();}returnlastExecutionResult;};

Slonik abstracts the latter pattern intopool#connect() method.

constmain=()=>{returnpool.connect((connection)=>{returnconnection.query(sql.typeAlias('foo')`SELECT foo()`);});};

Using this pattern, we guarantee that connection is always released as soon as theconnect() routine resolves or is rejected.

After the connection is released, Slonik resets the connection state. This is to prevent connection state from leaking between queries.

The default behaviour is to executeDISCARD ALL command. This behaviour can be adjusted by configuringresetConnection routine, e.g.

import{createPool,sql,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=createPool('postgres://',{driverFactory:createPgDriverFactory(),resetConnection:async(connection)=>{awaitconnection.query('DISCARD ALL');}});

Note

Resetting a connection is a heavy operation. Depending on the application requirements, it may make sense to disable connection reset, e.g.

import{createPool,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=createPool('postgres://',{driverFactory:createPgDriverFactory(),resetConnection:async()=>{}});

Just like in theunsafe connection handling example, Slonik only allows to create a transaction for the duration of the promise routine supplied to theconnection#transaction() method.

connection.transaction(async(transactionConnection)=>{awaittransactionConnection.query(sql.typeAlias('void')`INSERT INTO foo (bar) VALUES ('baz')`);awaittransactionConnection.query(sql.typeAlias('void')`INSERT INTO qux (quux) VALUES ('quuz')`);});

This pattern ensures that the transaction is either committed or aborted the moment the promise is either resolved or rejected.

Note

If you receive an errorUnexpectedForeignConnectionError, then you are trying to execute a query using a connection that is not associated with the transaction. This error is thrown to prevent accidental unsafe transaction handling, e.g.

pool.transaction(async(transactionConnection)=>{awaitpool.query(sql.typeAlias('void')`INSERT INTO foo (bar) VALUES ('baz')`);});

In this example, the query is executed using theconnection that is not associated with the transaction. This is unsafe because the query is not part of the transaction and will not be rolled back if the transaction is aborted.This behaviour can be disabled by settingdangerouslyAllowForeignConnections totrue in theClientConfiguration.

SQL injections are one of the most well known attack vectors. Some of thebiggest data leaks were the consequence of improper user-input handling. In general, SQL injections are easily preventable by using parameterization and by restricting database permissions, e.g.

// This is not valid Slonik APIconnection.query('SELECT $1',[userInput]);

In this example, the query text (SELECT $1) and parameters (userInput) are passed separately to the PostgreSQL server where the parameters are safely substituted into the query. This is a safe way to execute a query using user-input.

The vulnerabilities appear when developers cut corners or when they do not know about parameterization, i.e. there is a risk that someone will instead write:

// This is not valid Slonik APIconnection.query('SELECT \''+userInput+'\'');

As evident by the history of the data leaks, this happens more often than anyone would like to admit. This security vulnerability is especially a significant risk in Node.js community, where a predominant number of developers are coming from frontend and have not had training working with RDBMSes. Therefore, one of the key selling points of Slonik is that it adds multiple layers of protection to prevent unsafe handling of user input.

To begin with, Slonik does not allow running plain-text queries.

// This is not valid Slonik APIconnection.query('SELECT 1');

The above invocation would produce an error:

TypeError: Query must be constructed using

sqltagged template literal.

This means that the only way to run a query is by constructing it usingsql tagged template literal, e.g.

connection.query(sql.unsafe`SELECT 1`);

To add a parameter to the query, user must usetemplate literal placeholders, e.g.

connection.query(sql.unsafe`SELECT${userInput}`);

Slonik takes over from here and constructs a query with value bindings, and sends the resulting query text and parameters to PostgreSQL. There is no other way of passing parameters to the query – this adds a strong layer of protection against accidental unsafe user input handling due to limited knowledge of the SQL client API.

As Slonik restricts user's ability to generate and execute dynamic SQL, it provides helper functions used to generate fragments of the query and the corresponding value bindings, e.g.sql.identifier,sql.join andsql.unnest. These methods generate tokens that the query executor interprets to construct a safe query, e.g.

connection.query(sql.unsafe` SELECT${sql.identifier(['foo','a'])} FROM ( VALUES (${sql.join([sql.join(['a1','b1','c1'],sql.fragment`, `),sql.join(['a2','b2','c2'],sql.fragment`, `)],sql.fragment`), (`)} ) ) foo(a, b, c) WHERE foo.b IN (${sql.join(['c1','a2'],sql.fragment`, `)})`);

This (contrived) example generates a query equivalent to:

SELECT"foo"."a"FROM (VALUES ($1, $2, $3), ($4, $5, $6)) foo(a, b, c)WHEREfoo.bIN ($7, $8)

This query is executed with the parameters provided by the user.

To sum up, Slonik is designed to prevent accidental creation of queries vulnerable to SQL injections.

Slonik client is configured using a custom connection URI (DSN).

postgresql://[user[:password]@][host[:port]][/database name][?name=value[&...]]Supported parameters:

| Name | Meaning | Default |

|---|---|---|

application_name | application_name | |

options | options | |

sslcert | The location of thecertificate. | - |

sslkey | The location of thecertificate. | - |

sslmode | sslmode (supported values:disable,no-verify,require) | disable |

sslrootcert | The location of the root certificate file. |

Note that unless listed above, otherlibpq parameters are not supported.

Examples of valid DSNs:

postgresql://postgresql://localhostpostgresql://localhost:5432postgresql://localhost/foopostgresql://foo@localhostpostgresql://foo:bar@localhostpostgresql://foo@localhost/bar?application_name=bazUnix-domain socket connection is chosen if the host part is either empty or looks like an absolute path name.

postgresql:///dbname?host=/var/lib/postgresqlpostgresql://%2Fvar%2Flib%2Fpostgresql/dbnameOther configurations are available through theclientConfiguration parameter.

UsecreatePool to create a connection pool, e.g.

import{createPool,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://',{driverFactory:createPgDriverFactory(),});

Note: If you are new to Slonik, then you should readIntegrating Slonik with Express.js.

Instance of Slonik connection pool can be then used to create a new connection, e.g.

pool.connect(async(connection)=>{awaitconnection.query(sql.typeAlias('id')`SELECT 1 AS id`);});

The connection will be kept alive until the promise resolves (the result of the method supplied toconnect()).

Refer toquery method documentation to learn about the connection methods.

If you do not require having a persistent connection to the same backend, then you can directly usepool to run queries, e.g.

pool.query(sql.typeAlias('id')`SELECT 1 AS id`);

Beware that in the latter example, the connection picked to execute the query is a random connection from the connection pool, i.e. using the latter method (without explicitconnect()) does not guarantee that multiple queries will refer to the same backend.

Usepool.end() to end idle connections and prevent creation of new connections.

The result ofpool.end() is a promise that is resolved when all connections are ended.

import{createPool,sql,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://',{driverFactory:createPgDriverFactory(),});constmain=async()=>{awaitpool.query(sql.typeAlias('id')` SELECT 1 AS id `);awaitpool.end();};main();

Note:pool.end() does not terminate active connections/ transactions.

Usepool.state() to find out if pool is alive and how many connections are active and idle, and how many clients are waiting for a connection.

import{createPool,sql,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://',{driverFactory:createPgDriverFactory(),});constmain=async()=>{pool.state();// {// acquiredConnections: 0,// idleConnections: 0,// pendingDestroyConnections: 0,// pendingReleaseConnections: 0,// state: 'ACTIVE',// waitingClients: 0,// }awaitpool.connect(()=>{pool.state();// {// acquiredConnections: 1,// idleConnections: 0,// pendingDestroyConnections: 0,// pendingReleaseConnections: 0,// state: 'ACTIVE',// waitingClients: 0,// }});pool.state();// {// acquiredConnections: 0,// idleConnections: 1,// pendingDestroyConnections: 0,// pendingReleaseConnections: 0,// state: 'ACTIVE',// waitingClients: 0,// }awaitpool.end();pool.state();// {// acquiredConnections: 0,// idleConnections: 0,// pendingDestroyConnections: 0,// pendingReleaseConnections: 0,// state: 'ENDED',// waitingClients: 0,// }};main();

Note:pool.end() does not terminate active connections/ transactions.

/** *@param connectionUri PostgreSQL [Connection URI](https://www.postgresql.org/docs/current/libpq-connect.html#LIBPQ-CONNSTRING). */createPool(connectionUri: string,clientConfiguration:ClientConfiguration):DatabasePool;/** *@property captureStackTrace Dictates whether to capture stack trace before executing query. Middlewares access stack trace through query execution context. (Default: false) *@property connectionRetryLimit Number of times to retry establishing a new connection. (Default: 3) *@property connectionTimeout Timeout (in milliseconds) after which an error is raised if connection cannot be established. (Default: 5000) *@property dangerouslyAllowForeignConnections Allow using connections that are not associated with the transaction. (Default: false) *@property driverFactory Overrides the default DriverFactory. (Default: "pg" driver factory) *@property gracefulTerminationTimeout Timeout (in milliseconds) that kicks in after a connection with an active query is requested to end. This is the amount of time that is allowed for query to complete before terminating it. (Default: 5000) *@property idleInTransactionSessionTimeout Timeout (in milliseconds) after which idle clients are closed. Use 'DISABLE_TIMEOUT' constant to disable the timeout. (Default: 60000) *@property idleTimeout Timeout (in milliseconds) after which idle clients are closed. Use 'DISABLE_TIMEOUT' constant to disable the timeout. (Default: 5000) *@property interceptors An array of [Slonik interceptors](https://github.com/gajus/slonik#interceptors). *@property maximumConnectionAge The maximum age of a connection allowed in the pool. After this age, the connection will be destroyed. (Default: 30 minutes) *@property maximumPoolSize Do not allow more than this many connections. (Default: 10) *@property minimumPoolSize Ensure that at least this many connections are available in the pool. (Default: 0) *@property queryRetryLimit Number of times a query failing with Transaction Rollback class error, that doesn't belong to a transaction, is retried. (Default: 5) *@property ssl [tls.connect options](https://nodejs.org/api/tls.html#tlsconnectoptions-callback) *@property statementTimeout Timeout (in milliseconds) after which database is instructed to abort the query. Use 'DISABLE_TIMEOUT' constant to disable the timeout. (Default: 60000) *@property transactionRetryLimit Number of times a transaction failing with Transaction Rollback class error is retried. (Default: 5) *@property typeParsers An array of [Slonik type parsers](https://github.com/gajus/slonik#type-parsers). */typeClientConfiguration={captureStackTrace?:boolean,connectionRetryLimit?:number,connectionTimeout?:number|'DISABLE_TIMEOUT',driverFactory?:DriverFactory,gracefulTerminationTimeout?:number,idleInTransactionSessionTimeout?:number|'DISABLE_TIMEOUT',idleTimeout?:number|'DISABLE_TIMEOUT',interceptors?:Interceptor[],maximumConnectionAge?:number,maximumPoolSize?:number,minimumPoolSize?:number,queryRetryLimit?:number,ssl?:Parameters<tls.connect>[0],statementTimeout?:number|'DISABLE_TIMEOUT',transactionRetryLimit?:number,typeParsers?:TypeParser[],};

Example:

import{createPool}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://',{driverFactory:createPgDriverFactory(),});awaitpool.query(sql.typeAlias('id')`SELECT 1 AS id`);

None.

Check outslonik-interceptor-preset for an opinionated collection of interceptors.

These type parsers are enabled by default:

| Type name | Implementation |

|---|---|

date | Produces a literal date as a string (format: YYYY-MM-DD). |

int8 | Produces an integer. |

interval | Produces interval in seconds (integer). |

numeric | Produces a float. |

timestamp | Produces a unix timestamp (in milliseconds). |

timestamptz | Produces a unix timestamp (in milliseconds). |

To disable the default type parsers, pass an empty array, e.g.

createPool('postgres://',{typeParsers:[]});

You can create default type parser collection usingcreateTypeParserPreset, e.g.

import{createTypeParserPreset}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';createPool('postgres://',{driverFactory:createPgDriverFactory(),typeParsers:[ ...createTypeParserPreset()]});

There are 4 types of configurable timeouts:

| Configuration | Description | Default |

|---|---|---|

connectionTimeout | Timeout (in milliseconds) after which an error is raised if connection cannot be established. | 5000 |

idleInTransactionSessionTimeout | Timeout (in milliseconds) after which idle clients are closed. Use 'DISABLE_TIMEOUT' constant to disable the timeout. | 60000 |

idleTimeout | Timeout (in milliseconds) after which idle clients are closed. Use 'DISABLE_TIMEOUT' constant to disable the timeout. | 5000 |

statementTimeout | Timeout (in milliseconds) after which database is instructed to abort the query. Use 'DISABLE_TIMEOUT' constant to disable the timeout. | 60000 |

Slonik sets aggressive timeouts by default. These timeouts are designed to provide safe interface to the database. These timeouts might not work for all programs. If your program has long running statements, consider adjusting timeouts just for those statements instead of changing the defaults.

pg-native is not officially supported by Slonik.

Slonik only allows to check out a connection for the duration of the promise routine supplied to thepool#connect() method.

import{createPool,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://localhost',{driverFactory:createPgDriverFactory(),});constresult=awaitpool.connect(async(connection)=>{awaitconnection.query(sql.typeAlias('id')`SELECT 1 AS id`);awaitconnection.query(sql.typeAlias('id')`SELECT 2 AS id`);return'foo';});result;// 'foo'

Connection is released back to the pool after the promise produced by the function supplied toconnect() method is either resolved or rejected.

Read:Protecting against unsafe connection handling.

TheDatabasePool extendsDatabasePoolEventEmitter and exposes the following events:

error:(error: SlonikError) => void– emitted for all errors that happen within the pool.

import{createPool,typeDatabasePoolEventEmitter,}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constpool=awaitcreatePool('postgres://localhost',{driverFactory:createPgDriverFactory(),});pool.on('error',(error)=>{console.error(error);});

pg is built intentionally to provide unopinionated, minimal abstraction and encourages use of other modules to implement convenience methods.

Slonik is built on top ofpg and it provides convenience methods forbuilding queries andquerying data.

Work onpg began onTue Sep 28 22:09:21 2010. It is authored byBrian Carlson.

As the name suggests,pg-promise was originally built to enable use ofpg module with promises (at the time,pg only supported Continuation Passing Style (CPS), i.e. callbacks). Since thenpg-promise added features for connection/ transaction handling, a powerful query-formatting engine and a declarative approach to handling query results.

The primary difference between Slonik andpg-promise:

- Slonik does not allow to execute raw text queries. Slonik queries can only be constructed using

sqltagged template literals. This designprotects against unsafe value interpolation. - Slonik implementsinterceptor API (middleware). Middlewares allow to modify connection handling, override queries and modify the query results. Example Slonik interceptors includefield name transformation,query normalization andquery benchmarking.

Note: Author ofpg-promise hasobjected to the above claims. I have removed a difference that was clearly wrong. I maintain that the above two differences remain valid differences: even thoughpg-promise might have substitute functionality for variable interpolation and interceptors, it implements them in a way that does not provide the same benefits that Slonik provides, namely: guaranteed security and support for extending library functionality using multiple plugins.

Other differences are primarily in how the equivalent features are implemented, e.g.

pg-promise | Slonik |

|---|---|

| Custom type formatting. | Not available in Slonik. The current proposal is to create an interceptor that would have access to thequery fragment constructor. |

| formatting filters | Slonik tagged templatevalue expressions to construct query fragments and bind parameter values. |

| Query files. | Useslonik-sql-tag-raw. |

| Tasks. | Usepool.connect. |

| Configurable transactions. | Not available in Slonik. Trackthis issue. |

| Events. | Useinterceptors. |

When weighting which abstraction to use, it would be unfair not to consider thatpg-promise is a mature project with dozens of contributors. Meanwhile, Slonik is a young project (started in March 2017) that until recently was developed without active community input. However, if you do support the unique features that Slonik adds, the opinionated API design, and are not afraid of adopting a technology in its young days, then I warmly invite you to adopt Slonik and become a contributor to what I intend to make the standard PostgreSQL client in the Node.js community.

Work onpg-promise beganWed Mar 4 02:00:34 2015. It is authored byVitaly Tomilov.

postgres recently gained in popularity due to its performance benefits when compared topg. In terms of API, it has a pretty bare-bones API that heavily relies on using ES6 tagged templates and abstracts away many concepts of connection pool handling. Whilepostgres API might be preferred by some, projects that already usepg may have difficulty migrating.

Type parsers describe how to parse PostgreSQL types.

typeTypeParser={name:string,parse:(value:string)=>*};

Example:

{name:'int8',parse:(value)=>{returnparseInt(value,10);}}

Note: Unlikepg-types that uses OIDs to identify types, Slonik identifies types using their names.

Use this query to find type names:

SELECT typnameFROM pg_typeORDER BY typnameASC

Type parsers are configured usingtypeParsers client configuration.

Read:Default type parsers.

| Type name | Implementation | Factory function name |

|---|---|---|

date | Produces a literal date as a string (format: YYYY-MM-DD). | createDateTypeParser |

int8 | Produces an integer. | createBigintTypeParser |

interval | Produces interval in seconds (integer). | createIntervalTypeParser |

numeric | Produces a float. | createNumericTypeParser |

timestamp | Produces a unix timestamp (in milliseconds). | createTimestampTypeParser |

timestamptz | Produces a unix timestamp (in milliseconds). | createTimestampWithTimeZoneTypeParser |

Built-in type parsers can be created using the exported factory functions, e.g.

import{createTimestampTypeParser}from'slonik';createTimestampTypeParser();// {// name: 'timestamp',// parse: (value) => {// return value === null ? value : Date.parse(value + ' UTC');// }// }

Functionality can be added to Slonik client by adding interceptors (middleware).

Interceptors are configured usingclient configuration, e.g.

import{createPool}from'slonik';import{createPgDriverFactory,}from'@slonik/pg-driver';constinterceptors=[];constconnection=awaitcreatePool('postgres://',{driverFactory:createPgDriverFactory(), interceptors});

Interceptors are executed in the order they are added.

Read:Default interceptors.

Interceptor is an object that implements methods that can change the behaviour of the database client at different stages of the connection life-cycle

typeInterceptor={afterPoolConnection?:(connectionContext:ConnectionContext,connection:DatabasePoolConnection)=>MaybePromise<null>,afterQueryExecution?:(queryContext:QueryContext,query:Query,result:QueryResult<QueryResultRow>)=>MaybePromise<QueryResult<QueryResultRow>>,beforePoolConnection?:(connectionContext:ConnectionContext)=>MaybePromise<?DatabasePool>,beforePoolConnectionRelease?:(connectionContext:ConnectionContext,connection:DatabasePoolConnection)=>MaybePromise<null>,beforeQueryExecution?:(queryContext:QueryContext,query:Query)=>MaybePromise<QueryResult<QueryResultRow>>|MaybePromise<null>,beforeQueryResult?:(queryContext:QueryContext,query:Query,result:QueryResult<QueryResultRow>)=>MaybePromise<null>,beforeTransformQuery?:(queryContext:QueryContext,query:Query)=>MaybePromise<null>,dataIntegrityError?:(queryContext:QueryContext,query:Query,error:DataIntegrityError,result:QueryResult<QueryResultRow>)=>MaybePromise<null>,queryExecutionError?:(queryContext:QueryContext,query:Query,error:SlonikError)=>MaybePromise<null>,transformQuery?:(queryContext:QueryContext,query:Query)=>Query,transformRow?:(queryContext:QueryContext,query:Query,row:QueryResultRow,fields:Field[],)=>MaybePromise<QueryResultRow>};

Executed after a connection is acquired from the connection pool (or a new connection is created), e.g.

constpool=awaitcreatePool('postgres://');// Interceptor is executed here. ↓pool.connect();

Executed after query has been executed and before rows were transformed usingtransformRow.

Note: When query is executed usingstream, thenafterQuery is called with empty result set.

This function can optionally return a direct result of the query which will cause the actual query never to be executed.

Executed just before the result is returned to the client.

Use this method to capture the result that will be returned to the client.

Executed beforetransformQuery. Use this interceptor to capture the original query (e.g. for logging purposes).

Executed before connection is created.

This function can optionally return a pool to another database, causing a connection to be made to the new pool.

Executed before connection is released back to the connection pool, e.g.

constpool=awaitcreatePool('postgres://');pool.connect(async()=>{await1;// Interceptor is executed here. ↓});

Executed if query execution produces an error.

UsequeryExecutionError to log and/ or re-throw another error.

Executed when a data integrity validation fails (e.g., when a query returns an unexpected number of rows or columns).

UsedataIntegrityError to log data integrity violations, perform custom validation logic, or handle data consistency issues. This middleware is called before theDataIntegrityError is thrown, allowing you to inspect the query result and error details.

Executed beforebeforeQueryExecution.

Transforms query.

Executed for each row.

Transforms row.

UsetransformRow to modify the query result.

| Name | Description |

|---|---|

slonik-interceptor-field-name-transformation | Transforms Slonik query result field names. |

slonik-interceptor-query-benchmarking | Benchmarks Slonik queries. |

slonik-interceptor-query-cache | Caches Slonik queries. |

slonik-interceptor-query-logging | Logs Slonik queries. |

slonik-interceptor-query-normalisation | Normalises Slonik queries. |

Check outslonik-interceptor-preset for an opinionated collection of interceptors.

Usesql.unnest to create a set of rows usingunnest. Using theunnest approach requires only 1 variable per every column; values for each column are passed as an array, e.g.

awaitconnection.query(sql.unsafe` INSERT INTO foo (bar, baz, qux) SELECT * FROM${sql.unnest([[1,2,3],[4,5,6]],['int4','int4','int4'])}`);

Produces:

{sql:'INSERT INTO foo (bar, baz, qux) SELECT * FROM unnest($1::int4[], $2::int4[], $3::int4[])',values:[[1,4],[2,5],[3,6]]}

Inserting data this way ensures that the query is stable and reduces the amount of time it takes to parse the query.

A typical load balancing requirement is to route all "logical" read-only queries to a read-only instance. This requirement can be implemented in 2 ways:

- Create two instances of Slonik (read-write and read-only) and pass them around the application as needed.

- Use

beforePoolConnectionmiddleware to assign query to a connection pool based on the query itself.

First option is preferable as it is the most explicit. However, it also has the most overhead to implement.

On the other hand,beforePoolConnection makes it easy to route based on conventions, but carries a greater risk of accidentally routing queries with side-effects to a read-only instance.

The first option is self-explanatory to implement, but this recipe demonstrates my convention for usingbeforePoolConnection to route queries.

Note: How you determine which queries are safe to route to a read-only instance is outside of scope for this documentation.

Note:beforePoolConnection only works for connections initiated by a query, i.e.pool#query and notpool#connect().

Note:pool#transaction triggersbeforePoolConnection but has noquery.

Note: This particular implementation does not handleSELECT INTO.

constreadOnlyPool=awaitcreatePool('postgres://read-only');constpool=awaitcreatePool('postgres://main',{interceptors:[{beforePoolConnection:(connectionContext)=>{if(!connectionContext.query?.sql.trim().startsWith('SELECT ')){// Returning null falls back to using the DatabasePool from which the query originates.returnnull;}// This is a convention for the edge-cases where a SELECT query includes a volatile function.// Adding a@volatile comment anywhere into the query bypasses the read-only route, e.g.// sql.unsafe`// /*@volatile */// SELECT write_log()// `if(connectionContext.query?.sql.includes('@volatile')){returnnull;}// Returning an instance of DatabasePool will attempt to run the query using the other connection pool.// Note that all other interceptors of the pool that the query originated from are short-circuited.returnreadOnlyPool;}}]});// This query will use `postgres://read-only` connection.pool.query(sql.typeAlias('id')`SELECT 1 AS id`);// This query will use `postgres://main` connection.pool.query(sql.typeAlias('id')`UPDATE 1 AS id`);

Parameter symbols only work in optimizable SQL commands (SELECT, INSERT, UPDATE, DELETE, and certain commands containing one of these). In other statement types (generically called utility statements, e.g. ALTER, CREATE, DROP and SET), you must insert values textually even if they are just data values.

In the context of Slonik, if you are building utility statements you must use query building methods that interpolate values directly into queries:

sql.identifier– for identifiers.sql.literalValue– for values.

Example:

awaitconnection.query(sql.typeAlias('void')` CREATE USER${sql.identifier(['foo'])} WITH PASSWORD${sql.literalValue('bar')}`);

If you are usingpgvector and need to insert vector data, you can use the following helper function:

constvector=(embeddings:number[])=>{returnsql.fragment`${sql.array(Array.from(embeddings),sql.fragment`real[]`,)}::vector`;};

Now you can use thevector helper function to insert vector data:

awaitconnection.query(sql.typeAlias('void')` INSERT INTO embeddings (id, vector) VALUES (1,${vector(embedding.data)})`);

You can also use thepgvector NPM package to achieve the same result.

Slonik integrateszod to provide runtime query result validation and static type inference.

Validating queries requires to:

- Add aresult parser interceptor during slonik initiialization

- For every query define a Zodobject and pass it to

sql.typetagged template (see below)

Build-time type safety guarantees that your application will work as expected at the time of the build (assuming that the types are correct in the first place).

The problem is that once you deploy the application, the database schema might change independently of the codebase. This drift may result in your application behaving in unpredictable and potentially dangerous ways, e.g., imagine if tableproduct changedprice fromnumeric totext. Without runtime validation, this would cause a cascade of problems and potential database corruption. Even worse, without runtime checks, this could go unnoticed for a long time.

In contrast, by using runtime checks, you can ensure that the contract between your codebase and the database is always respected. If there is a breaking change, the application fails with a loud error that is easy to debug.

By usingzod, we get the best of both worlds: type safety and runtime checks.

Slonik works without the interceptor, but it doesn't validate the query results. To validate results, you must implement an interceptor that parses the results.

For context, when Zod parsing was first introduced to Slonik, it was enabled for all queries by default. However, I eventually realized that the baked-in implementation is not going to suit everyone's needs. For this reason, I decided to take out the built-in interceptor in favor of providing examples for common use cases. What follows is based on the original default implementation.

import{typeInterceptor,typeQueryResultRow,SchemaValidationError,}from"slonik";constcreateResultParserInterceptor=():Interceptor=>{return{// If you are not going to transform results using Zod, then you should use `afterQueryExecution` instead.// Future versions of Zod will provide a more efficient parser when parsing without transformations.// You can even combine the two – use `afterQueryExecution` to validate results, and (conditionally)// transform results as needed in `transformRow`.transformRow:async(executionContext,actualQuery,row)=>{const{ log, resultParser}=executionContext;if(!resultParser){returnrow;}// It is recommended (but not required) to parse async to avoid blocking the event loop during validationconstvalidationResult=awaitresultParser.safeParseAsync(row);if(!validationResult.success){thrownewSchemaValidationError(actualQuery,row,validationResult.error.issues);}returnvalidationResult.dataasQueryResultRow;},};};

To use it, simply add it as a middleware:

import{createPool}from"slonik";import{createPgDriverFactory,}from'@slonik/pg-driver';createPool("postgresql://",{driverFactory:createPgDriverFactory(),interceptors:[createResultParserInterceptor()],});

Let's assume that you have a PostgreSQL tableperson:

CREATETABLE "public"."person" ("id"integer GENERATED ALWAYSAS IDENTITY,"name"textNOT NULL,PRIMARY KEY ("id"));

and you want to retrieve all persons in the database, along with theirid andname:

connection.any(sql.unsafe` SELECT id, name FROM person`);

With your knowledge of the database schema, define a zod object:

constpersonObject=z.object({id:z.number(),name:z.string(),});

Update your query to usesql.type tag and passpersonObject:

constpersonQuery=sql.type(personObject)` SELECT id, name FROM person`;

Finally, query the database using typedsql tagged template:

constpersons=awaitconnection.any(personQuery);

With this information, Slonik guarantees that every member ofpersons is an object that has propertiesid andname, which are a non-nullnumber and a non-nullstring respectively.

In the context of the network overhead, validation accounts for a tiny amount of the total execution time.

Just to give an idea, in our sample of data, it takes sub 0.1ms to validate 1 row, ~3ms to validate 1,000 and ~25ms to validate 100,000 rows.

By default Zod object schemas strip unknown keys. If you want to disallow unknown keys, you can add.strict() to your schema:

z.object({foo:z.string()}).strict()

Using therecommended parser pattern, this will produce aSchemaValidationError, when a query result includes unknown keys.

Conversely you can allow unknown keys to be passed through by using.passthrough():

z.object({foo:z.string()}).passthrough();

Note: Using.passthrough() is not recommended as it reduces type safety and may lead to unexpected behaviour.

If query produces a row that does not satisfy zod object, thenSchemaValidationError error is thrown.

SchemaValidationError includes properties that describe the query and validation errors:

sql– SQL of the query that produced unexpected row.row– row data that did not satisfy the schema.issues– array of unmet expectations.

Whenever this error occurs, the same information is also included in thelogs.

In most cases, you shouldn't attempt to handle these errors at individual query level – allow to propagate to the top of the application and fix the issue when you become aware of it.

However, in cases such as dealing with unstructured data, it might be useful to handle these errors at a query level, e.g.

import{SchemaValidationError}from'slonik';try{}catch(error){if(errorinstanceofSchemaValidationError){// Handle scheme validation error}}

You can infer the TypeScript type of the query result. There are couple of ways of doing it:

// Infer using StandardSchemaV1.<typeof yourSchema>// https://github.com/colinhacks/zod#type-inferencetypePerson=StandardSchemaV1.InferOutput<typeofpersonObject>;// from sql tagged template `parser` propertytypePerson=StandardSchemaV1.InferOutput<personQuery.parser>;

Using zodtransform you can refine the result shape and its type, e.g.

constcoordinatesType=z.string().transform((subject)=>{const[x,y,]=subject.split(',');return{x:Number(x),y:Number(y),};});constzodObject=z.object({foo:coordinatesType,});constquery=sql.type(zodObject)`SELECT '1,2' as foo`;constresult=awaitpool.one(query);expectTypeOf(result).toMatchTypeOf<{foo:{x:number,y:number,},}>();t.deepEqual(result,{foo:{x:1,y:2,},});

sql tag serves two purposes:

- It is used to construct queries with bound parameter values (seeValue placeholders).

- It used to generate dynamic query fragments (seeQuery building).

sql tag can be imported from Slonik package:

import{sql}from'slonik';

Sometimes it may be desirable to construct a custom instance ofsql tag. In those cases, you can use thecreateSqlTag factory, e.g.

import{createSqlTag}from'slonik';constsql=createSqlTag();

You can create asql tag with a predefined set of Zod type aliases that can be later referenced when creating a query withruntime validation.

Slonik documentation assumes that these type aliases are defined:

constsql=createSqlTag({typeAliases:{// `foo` is a documentation specific examplefoo:z.object({foo:z.string(),}),id:z.object({id:z.number(),}),void:z.object({}).strict(),}})

These are documentation specific examples that you are not expected to blindly copy. However,id andvoid are recommended aliases as they reflect common patterns, e.g.

constpersonId=awaitpool.oneFirst(sql.typeAlias('id')` SELECT id FROM person `);awaitpool.query(sql.typeAlias('void')` INSERT INTO person_view (person_id) VALUES (${personId})`);

Slonik query methods can only be executed usingsqltagged template literal, e.g.

import{sql}from'slonik'connection.query(sql.typeAlias('id')` SELECT 1 AS id FROM foo WHERE bar =${'baz'}`);

The above is equivalent to evaluating:

SELECT1AS idFROM fooWHERE bar= $1

query with 'baz' value binding.

Manually constructing queries is not allowed.

There is an internal mechanism that checks to see if query was created usingsql tagged template literal, i.e.

constquery={sql:'SELECT 1 AS id FROM foo WHERE bar = $1',type:'SQL',values:['baz']};connection.query(query);

Will result in an error:

Query must be constructed using

sqltagged template literal.

This is a security measure designed to prevent unsafe query execution.

Furthermore, a query object constructed usingsql tagged template literal isfrozen to prevent further manipulation.

sql tagged template literals can be nested, e.g.

constquery0=sql.unsafe`SELECT${'foo'} FROM bar`;constquery1=sql.unsafe`SELECT${'baz'} FROM (${query0})`;

Produces:

{sql:'SELECT $1 FROM (SELECT $2 FROM bar)',values:['baz','foo']}

Queries are built using methods of thesql tagged template literal.

If this is your first time using Slonik, readDynamically generating SQL queries using Node.js.

(values:readonlyPrimitiveValueExpression[],memberType:SqlFragment|TypeNameIdentifier,)=>ArraySqlToken,

Creates an array value binding, e.g.

awaitconnection.query(sql.typeAlias('id')` SELECT (${sql.array([1,2,3],'int4')}) AS id`);

Produces:

{sql:'SELECT $1::"int4"[]',values:[[1,2,3]]}

IfmemberType is a string (TypeNameIdentifier), then it is treated as a type name identifier and will be quoted using double quotes, i.e.sql.array([1, 2, 3], 'int4') is equivalent to$1::"int4"[]. The implication is that keywords that are often used interchangeably with type names are not going to work, e.g.int4 is a type name identifier and will work. However,int is a keyword and will not work. You can either use type name identifiers or you can construct custom member usingsql.fragment tag, e.g.

awaitconnection.query(sql.typeAlias('id')` SELECT (${sql.array([1,2,3],sql.fragment`int[]`)}) AS id`);

Produces:

{sql:'SELECT $1::int[]',values:[[1,2,3]]}

Unlikesql.join,sql.array generates a stable query of a predictable length, i.e. regardless of the number of values in the array, the generated query remains the same:

- Having a stable query enables

pg_stat_statementsto aggregate all query execution statistics. - Keeping the query length short reduces query parsing time.

Example:

sql.typeAlias('id')` SELECT id FROM foo WHERE id IN (${sql.join([1,2,3],sql.fragment`, `)})`;sql.typeAlias('id')` SELECT id FROM foo WHERE id NOT IN (${sql.join([1,2,3],sql.fragment`, `)})`;

Is equivalent to:

sql.typeAlias('id')` SELECT id FROM foo WHERE id = ANY(${sql.array([1,2,3],'int4')})`;sql.typeAlias('id')` SELECT id FROM foo WHERE id != ALL(${sql.array([1,2,3],'int4')})`;

Furthermore, unlikesql.join,sql.array can be used with an empty array of values. In short,sql.array should be preferred oversql.join when possible.

(data:Buffer)=>BinarySqlToken;

Binds binary (bytea) data, e.g.

awaitconnection.query(sql.unsafe` SELECT${sql.binary(Buffer.from('foo'))}`);

Produces:

{sql:'SELECT $1',values:[Buffer.from('foo')]}

(date:Date)=>DateSqlToken;

Inserts a date, e.g.

awaitconnection.query(sql.unsafe` SELECT${sql.date(newDate('2022-08-19T03:27:24.951Z'))}`);

Produces:

{sql:'SELECT $1::date',values:['2022-08-19']}

(template:TemplateStringsArray, ...values:ValueExpression[])=>SqlFragment;

A SQL fragment, e.g.

sql.fragment`FOO`

Produces:

{sql:'FOO',values:[]}

SQL fragments can be used to build more complex queries, e.g.

constwhereFragment=sql.fragment` WHERE bar = 'baz';`;sql.typeAlias('id')` SELECT id FROM foo${whereFragment}`

There are two primary differences:

- Fragments are untyped and they cannot be used as inputs to query methods (use

sql.typeinstead). - Queries are expected to be valid SQL if executed (e.g.

SELECT * FROM foo); fragments are expected to be validfragments of SQL (e.g.WHERE bar = 1).

Warning

Due to the way that Slonik internally represents SQL fragments, your query must not contain$slonik_ literals.

(names:string[],)=>IdentifierSqlToken;

Delimited identifiers are created by enclosing an arbitrary sequence of characters in double-quotes ("). To create a delimited identifier, create ansql tag function placeholder value usingsql.identifier, e.g.

sql.typeAlias('id')` SELECT 1 AS id FROM${sql.identifier(['bar','baz'])}`;

Produces:

{sql:'SELECT 1 FROM "bar"."baz"',values:[]}

(interval:{years?:number,months?:number,weeks?:number,days?:number,hours?:number,minutes?:number,seconds?:number,})=>IntervalSqlToken;

Inserts aninterval, e.g.

sql.typeAlias('id')` SELECT 1 AS id FROM${sql.interval({days:3})}`;

Produces:

{sql:'SELECT make_interval("days" => $1)',values:[3]}

You can usesql.interval exactly how you would use PostgreSQLmake_interval function. However, notice that Slonik does not use abbreviations, i.e. "secs" is seconds and "mins" is minutes.

make_interval | sql.interval | Interval output |

|---|---|---|

make_interval("days" => 1, "hours" => 2) | sql.interval({days: 1, hours: 2}) | 1 day 02:00:00 |

make_interval("mins" => 1) | sql.interval({minutes: 1}) | 00:01:00 |

make_interval("secs" => 120) | sql.interval({seconds: 120}) | 00:02:00 |

make_interval("secs" => 0.001) | sql.interval({seconds: 0.001}) | 00:00:00.001 |

If you need a dynamic interval (e.g. X days), you can achieve this using multiplication, e.g.

sql.unsafe` SELECT${2} * interval '1 day'`

The above is equivalent tointerval '2 days'.

You could also usemake_interval() directly, e.g.

sql.unsafe` SELECT make_interval("days" =>${2})`

sql.interval was added mostly as a type-safe alternative.

(members:SqlSqlToken[],glue:SqlSqlToken)=>ListSqlToken;

Concatenates SQL expressions usingglue separator, e.g.

awaitconnection.query(sql.unsafe` SELECT${sql.join([1,2,3],sql.fragment`, `)}`);

Produces:

{sql:'SELECT $1, $2, $3',values:[1,2,3]}

sql.join is the primary building block for most of the SQL, e.g.

Boolean expressions:

sql.unsafe` SELECT${sql.join([1,2],sql.fragment` AND `)}`// SELECT $1 AND $2

Tuple:

sql.unsafe` SELECT (${sql.join([1,2],sql.fragment`, `)})`// SELECT ($1, $2)

Tuple list:

sql.unsafe` SELECT${sql.join([sql.fragment`(${sql.join([1,2],sql.fragment`, `)})`,sql.fragment`(${sql.join([3,4],sql.fragment`, `)})`,],sql.fragment`, `)}`// SELECT ($1, $2), ($3, $4)

(value:SerializableValue)=>JsonSqlToken;

Serializes value and binds it as a JSON string literal, e.g.

awaitconnection.query(sql.unsafe` SELECT (${sql.json([1,2,3])})`);

Produces:

{sql:'SELECT $1::json',values:['[1,2,3]']}

(value:SerializableValue)=>JsonBinarySqlToken;

Serializes value and binds it as a JSON binary, e.g.

awaitconnection.query(sql.unsafe` SELECT (${sql.jsonb([1,2,3])})`);

Produces:

{sql:'SELECT $1::jsonb',values:['[1,2,3]']}

⚠️ Do not use. This method interpolates values as literals and it must be used only forbuilding utility statements. You are most likely looking forvalue placeholders.

(value:string,)=>SqlSqlToken;

Escapes and interpolates a literal value into a query.

awaitconnection.query(sql.unsafe` CREATE USER "foo" WITH PASSWORD${sql.literalValue('bar')}`);

Produces:

{sql:'CREATE USER "foo" WITH PASSWORD \'bar\''}

(date:Date)=>TimestampSqlToken;

Inserts a timestamp, e.g.

awaitconnection.query(sql.unsafe` SELECT${sql.timestamp(newDate('2022-08-19T03:27:24.951Z'))}`);

Produces:

{sql:'SELECT to_timestamp($1)',values:['1660879644.951']}

(tuples:ReadonlyArray<readonlyany[]>,columnTypes:Array<[...string[],TypeNameIdentifier]>|Array<SqlSqlToken|TypeNameIdentifier>):UnnestSqlToken;

Creates anunnest expressions, e.g.

awaitconnection.query(sql.unsafe` SELECT bar, baz FROM${sql.unnest([[1,'foo'],[2,'bar']],['int4','text'])} AS foo(bar, baz)`);

Produces:

{sql:'SELECT bar, baz FROM unnest($1::"int4"[], $2::"text"[]) AS foo(bar, baz)',values:[[1,2],['foo','bar']]}

IfcolumnType array member type isstring, it will treat it as a type name identifier (and quote with double quotes; illustrated in the example above).

IfcolumnType array member type isSqlToken, it will inline type name without quotes, e.g.

awaitconnection.query(sql.unsafe` SELECT bar, baz FROM${sql.unnest([[1,'foo'],[2,'bar']],[sql.fragment`integer`,sql.fragment`text`])} AS foo(bar, baz)`);

Produces:

{sql:'SELECT bar, baz FROM unnest($1::integer[], $2::text[]) AS foo(bar, baz)',values:[[1,2],['foo','bar']]}

IfcolumnType array member type is[...string[], TypeNameIdentifier], it will act assql.identifier, e.g.

awaitconnection.query(sql.unsafe` SELECT bar, baz FROM${sql.unnest([[1,3],[2,4]],[['foo','int4'],['foo','int4']])} AS foo(bar, baz)`);

Produces:

{sql:'SELECT bar, baz FROM unnest($1::"foo"."int4"[], $2::"foo"."int4"[]) AS foo(bar, baz)',values:[[1,2],[3,4]]}

(template:TemplateStringsArray, ...values:ValueExpression[])=>QuerySqlToken;

Creates a query with Zodany type. The result of such a query has TypeScript typeany.

constresult=awaitconnection.one(sql.unsafe` SELECT foo FROM bar`);// `result` type is `any`

sql.unsafe is effectively a shortcut tosql.type(z.any()).

sql.unsafe is as a convenience method for development. Your production code must not usesql.unsafe. Instead,

- Use

sql.typeto type the query result - Use

sql.typeAliasto alias an existing type - Use

sql.fragmentif you are writing a fragment of a query

(uuid:string)=>TimestampSqlToken;

Inserts a UUID, e.g.

awaitconnection.query(sql.unsafe` SELECT${sql.uuid('00000000-0000-0000-0000-000000000000')}`);

Produces:

{sql:'SELECT $1::uuid',values:['00000000-0000-0000-0000-000000000000']}

Returns result rows.

Example:

constrows=awaitconnection.any(sql.typeAlias('foo')`SELECT foo`);

#any is similar to#query except that it returns rows without fields information.

Returns value of the first column of every row in the result set.

- Throws

DataIntegrityErrorif query returns multiple columns.

Example:

constfooValues=awaitconnection.anyFirst(sql.typeAlias('foo')`SELECT foo`);

Returns a boolean value indicating whether query produces results.

The query that is passed to this function is wrapped inSELECT exists() prior to it getting executed, i.e.

pool.exists(sql.typeAlias('void')` SELECT LIMIT 1`)

is equivalent to:

pool.oneFirst(sql.unsafe` SELECT exists( SELECT LIMIT 1 )`)

Returns result rows.

- Throws

NotFoundErrorif query returns no rows.

Example:

constrows=awaitconnection.many(sql.typeAlias('foo')`SELECT foo`);

Returns value of the first column of every row in the result set.

- Throws

NotFoundErrorif query returns no rows. - Throws

DataIntegrityErrorif query returns multiple columns.

Example:

constfooValues=awaitconnection.manyFirst(sql.typeAlias('foo')`SELECT foo`);

Selects the first row from the result.

- Returns

nullif row is not found. - Throws

DataIntegrityErrorif query returns multiple rows.

Example:

constrow=awaitconnection.maybeOne(sql.typeAlias('foo')`SELECT foo`);// row.foo is the result of the `foo` column value of the first row.

Returns value of the first column from the first row.

- Returns

nullif row is not found. - Throws

DataIntegrityErrorif query returns multiple rows. - Throws

DataIntegrityErrorif query returns multiple columns.

Example:

constfoo=awaitconnection.maybeOneFirst(sql.typeAlias('foo')`SELECT foo`);// foo is the result of the `foo` column value of the first row.

Selects the first row from the result.

- Throws

NotFoundErrorif query returns no rows. - Throws

DataIntegrityErrorif query returns multiple rows.

Example:

constrow=awaitconnection.one(sql.typeAlias('foo')`SELECT foo`);// row.foo is the result of the `foo` column value of the first row.

Note:

I've been asked "What makes this different fromknex.js

knex('foo').limit(1)?".knex('foo').limit(1)simply generates "SELECT * FROM foo LIMIT 1" query.knexis a query builder; it does not assert the value of the result.Slonik#oneadds assertions about the result of the query.

Returns value of the first column from the first row.

- Throws

NotFoundErrorif query returns no rows. - Throws

DataIntegrityErrorif query returns multiple rows. - Throws

DataIntegrityErrorif query returns multiple columns.

Example:

constfoo=awaitconnection.oneFirst(sql.typeAlias('foo')`SELECT foo`);// foo is the result of the `foo` column value of the first row.

API and the result shape are equivalent topg#query.

Example:

awaitconnection.query(sql.typeAlias('foo')`SELECT foo`);// {// command: 'SELECT',// fields: [],// notices: [],// rowCount: 1,// rows: [// {// foo: 'bar'// }// ]// }

Streams query results.

Example:

awaitconnection.stream(sql.typeAlias('foo')`SELECT foo`,(stream)=>{stream.on('data',(row)=>{row;// {// data: {// foo: 'bar'// },// fields: [// {// name: 'foo',// dataTypeId: 23,// }// ]// }});});

You can also use theAsyncIterable interface:

awaitconnection.stream(sql.typeAlias('foo')`SELECT foo`,async(stream)=>{forawait(constrowofstream){row;// {// data: {// foo: 'bar'// },// fields: [// {// name: 'foo',// dataTypeId: 23,// }// ]// }}});

transaction method is used wrap execution of queries inSTART TRANSACTION andCOMMIT orROLLBACK.COMMIT is called if the transaction handler returns a promise that resolves;ROLLBACK is called otherwise.

transaction method can be used together withcreatePool method. When used to create a transaction from an instance of a pool, a new connection is allocated for the duration of the transaction.

constresult=awaitconnection.transaction(async(transactionConnection)=>{awaittransactionConnection.query(sql.unsafe`INSERT INTO foo (bar) VALUES ('baz')`);awaittransactionConnection.query(sql.unsafe`INSERT INTO qux (quux) VALUES ('corge')`);return'FOO';});result==='FOO';

Slonik usesSAVEPOINT to automatically nest transactions, e.g.

awaitconnection.transaction(async(t1)=>{awaitt1.query(sql.unsafe`INSERT INTO foo (bar) VALUES ('baz')`);returnt1.transaction((t2)=>{returnt2.query(sql.unsafe`INSERT INTO qux (quux) VALUES ('corge')`);});});

is equivalent to:

START TRANSACTION;INSERT INTO foo (bar)VALUES ('baz');SAVEPOINT slonik_savepoint_1;INSERT INTO qux (quux)VALUES ('corge');COMMIT;

Slonik automatically rollsback to the last savepoint if a query belonging to a transaction results in an error, e.g.

awaitconnection.transaction(async(t1)=>{awaitt1.query(sql.unsafe`INSERT INTO foo (bar) VALUES ('baz')`);try{awaitt1.transaction(async(t2)=>{awaitt2.query(sql.unsafe`INSERT INTO qux (quux) VALUES ('corge')`);returnPromise.reject(newError('foo'));});}catch(error){}});

is equivalent to:

START TRANSACTION;INSERT INTO foo (bar)VALUES ('baz');SAVEPOINT slonik_savepoint_1;INSERT INTO qux (quux)VALUES ('corge');ROLLBACK TO SAVEPOINT slonik_savepoint_1;COMMIT;

If error is unhandled, then the entire transaction is rolledback, e.g.

awaitconnection.transaction(async(t1)=>{awaitt1.query(sql.typeAlias('void')`INSERT INTO foo (bar) VALUES ('baz')`);awaitt1.transaction(async(t2)=>{awaitt2.query(sql.typeAlias('void')`INSERT INTO qux (quux) VALUES ('corge')`);awaitt1.transaction(async(t3)=>{awaitt3.query(sql.typeAlias('void')`INSERT INTO uier (grault) VALUES ('garply')`);returnPromise.reject(newError('foo'));});});});

is equivalent to:

START TRANSACTION;INSERT INTO foo (bar)VALUES ('baz');SAVEPOINT slonik_savepoint_1;INSERT INTO qux (quux)VALUES ('corge');SAVEPOINT slonik_savepoint_2;INSERT INTO uier (grault)VALUES ('garply');ROLLBACK TO SAVEPOINT slonik_savepoint_2;ROLLBACK TO SAVEPOINT slonik_savepoint_1;ROLLBACK;

Transactions that are failing withTransaction Rollback class errors are automatically retried.

A failing transaction will be rolled back and the callback function passed to the transaction method call will be executed again. Nested transactions are also retried until the retry limit is reached. If the nested transaction keeps failing with aTransaction Rollback error, then the parent transaction will be retried until the retry limit is reached.

How many times a transaction is retried is controlled usingtransactionRetryLimit configuration (default: 5) and thetransactionRetryLimit parameter of thetransaction method (default: undefined). If atransactionRetryLimit is given to the method call then it is used otherwise thetransactionRetryLimit configuration is used.

Transaction connections provide event emitter functionality to monitor transaction lifecycle events. This allows you to listen for transaction commits, rollbacks, and savepoint operations.

awaitconnection.transaction(async(transactionConnection)=>{// Listen for commit eventstransactionConnection.on('commit',({transactionId, transactionDepth})=>{console.log(`Transaction${transactionId} committed at depth${transactionDepth}`);});// Listen for rollback eventstransactionConnection.on('rollback',({transactionId, transactionDepth, error})=>{console.log(`Transaction${transactionId} rolled back:`,error.message);});// Access transaction metadataconsole.log('Transaction ID:',transactionConnection.transactionId);console.log('Transaction Depth:',transactionConnection.transactionDepth);awaittransactionConnection.query(sql.unsafe`INSERT INTO foo (bar) VALUES ('baz')`);// Commit event will be emitted automatically when transaction completes});

Available Events:

commit- Emitted when a top-level transaction (depth = 0) commits successfully- Parameters:

(event: {transactionId: string, transactionDepth: number})

- Parameters:

rollback- Emitted when any transaction rolls back due to an error- Parameters:

(event: {transactionId: string, transactionDepth: number, error: Error})

- Parameters:

savepoint- Emitted when a nested transaction creates a savepoint (depth > 0)- Parameters:

(event: {transactionId: string, transactionDepth: number})

- Parameters:

rollbackToSavepoint- Emitted when a nested transaction rolls back to its savepoint- Parameters:

(event: {transactionId: string, transactionDepth: number, error: Error})

- Parameters:

Nested Transaction Events:

awaitconnection.transaction(async(outerTransaction)=>{outerTransaction.on('savepoint',({transactionId, transactionDepth})=>{console.log(`Savepoint created at depth${transactionDepth}`);});outerTransaction.on('commit',({transactionId, transactionDepth})=>{console.log(`Transaction committed at depth${transactionDepth}`);});awaitouterTransaction.transaction(async(innerTransaction)=>{// This will emit a 'savepoint' event// innerTransaction shares the same event emitter as outerTransactionawaitinnerTransaction.query(sql.unsafe`INSERT INTO nested (value) VALUES ('test')`);});// Only the outer transaction will emit a 'commit' event});

Transaction Metadata:

Transaction connections provide access to transaction metadata:

transactionId- Unique identifier for the transaction (consistent across nested levels)transactionDepth- Current nesting level (0 for top-level, 1+ for nested transactions)

All standard EventEmitter methods are available:on(),off(),once(),removeListener(),removeAllListeners(), etc.

A single query (not part of a transaction) failing with aTransaction Rollback class error is automatically retried.

How many times it is retried is controlled by using thequeryRetryLimit configuration (default: 5).

(dsn:string,)=>ConnectionOptions;

Parses DSN toConnectionOptions type.

Example:

import{parseDsn,}from'slonik';parseDsn('postgresql://foo@localhost/bar?application_name=baz');

(connectionOptions:ConnectionOptions,)=>string;

StringifiesConnectionOptions to a DSN.

Example:

import{stringifyDsn,}from'slonik';stringifyDsn({host:'localhost',username:'foo',databaseName:'bar',applicationName:'baz',});

All Slonik errors extend fromSlonikError, i.e. You can catch Slonik specific errors using the following logic.

import{SlonikError}from'slonik';try{awaitquery();}catch(error){if(errorinstanceofSlonikError){// This error is thrown by Slonik.}}

When error originates fromnode-postgres, the original error is available undercause property.

This property is exposed for debugging purposes only. Do not use it for conditional checks – it can change.

If you require to extract meta-data about a specific type of error (e.g. constraint violation name), raise a GitHub issue describing your use case.

BackendTerminatedError is thrown when the backend is terminated by the user, i.e.pg_terminate_backend.

BackendTerminatedError must be handled at the connection level, i.e.

awaitpool.connect(async(connection0)=>{try{awaitpool.connect(async(connection1)=>{constbackendProcessId=awaitconnection1.oneFirst(sql.typeAlias('id')`SELECT pg_backend_pid() AS id`);setTimeout(()=>{connection0.query(sql.typeAlias('void')`SELECT pg_cancel_backend(${backendProcessId})`)},2000);try{awaitconnection1.query(sql.typeAlias('void')`SELECT pg_sleep(30)`);}catch(error){// This code will not be executed.}});}catch(error){if(errorinstanceofBackendTerminatedError){// Handle backend termination.}else{throwerror;}}});

CheckIntegrityConstraintViolationError is thrown when PostgreSQL responds withcheck_violation (23514) error.

ConnectionError is thrown when connection cannot be established to the PostgreSQL server.

To handle the case where the data result does not match the expectations, catchDataIntegrityError error.

import{DataIntegrityError}from'slonik';letrow;try{row=awaitconnection.one(sql.typeAlias('foo')`SELECT foo`);}catch(error){if(errorinstanceofDataIntegrityError){console.error('There is more than one row matching the select criteria.');}else{throwerror;}}

ForeignKeyIntegrityConstraintViolationError is thrown when PostgreSQL responds withforeign_key_violation (23503) error.

Note

NotFoundError extends fromDataIntegrityError.

To handle the case where query returns less than one row, catchNotFoundError error.

import{NotFoundError}from'slonik';letrow;try{row=awaitconnection.one(sql.typeAlias('foo')`SELECT foo`);}catch(error){if(!(errorinstanceofNotFoundError)){throwerror;}}if(row){// row.foo is the result of the `foo` column value of the first row.}

NotNullIntegrityConstraintViolationError is thrown when PostgreSQL responds withnot_null_violation (23502) error.

StatementCancelledError is thrown when a query is cancelled by the user (i.e.pg_cancel_backend) or in case of a timeout.

It should be safe to use the same connection ifStatementCancelledError is handled, e.g.

awaitpool.connect(async(connection0)=>{awaitpool.connect(async(connection1)=>{constbackendProcessId=awaitconnection1.oneFirst(sql.typeAlias('id')`SELECT pg_backend_pid() AS id`);setTimeout(()=>{connection0.query(sql.typeAlias('void')`SELECT pg_cancel_backend(${backendProcessId})`)},2000);try{awaitconnection1.query(sql.typeAlias('void')`SELECT pg_sleep(30)`);}catch(error){if(errorinstanceofStatementCancelledError){// Safe to continue using the same connection.}else{throwerror;}}});});

StatementTimeoutError inherits fromStatementCancelledError and it is called only in case of a timeout.

UniqueIntegrityConstraintViolationError is thrown when PostgreSQL responds withunique_violation (23505) error.

TupleMovedToAnotherPartitionError is thrown whenaffecting tuple moved into different partition.

This library intentionally doesn't handle migrations, because a database client and migrations are conceptually distinct problems.

My personal preference is to useFlyway – it is a robust solution that many DBAs are already familiar with.

The Slonik community has also shared their successes with these Node.js frameworks:

This package is usingTypeScript types.

Refer to./packages/slonik/src/types.ts.

The public interface exports the following types:

CommonQueryMethods(most generic)DatabaseConnection(DatabasePool | DatabasePoolConnection)DatabasePoolDatabasePoolConnectionDatabaseTransactionConnection

Use these types to annotateconnection instance in your code base, e.g.

import{typeDatabaseConnection}from'slonik';exportdefaultasync(connection:DatabaseConnection,code:string):Promise<number>=>{returnawaitconnection.oneFirst(sql.typeAlias('id')` SELECT id FROM country WHERE code =${code} `);};

Slonik usesroarr to log queries.

To enable logging, defineROARR_LOG=true environment variable.

By default, Slonik logs only connection events, e.g. when connection is created, connection is acquired and notices.

Query-level logging can be added usingslonik-interceptor-query-logging interceptor.

Note: Requiresslonik-interceptor-query-logging.

EnablingcaptureStackTrace configuration will create a stack trace before invoking the query and include the stack trace in the logs, e.g.

{ "context": { "package": "slonik", "namespace": "slonik", "logLevel": 20, "executionTime": "357 ms", "queryId": "01CV2V5S4H57KCYFFBS0BJ8K7E", "rowCount": 1, "sql": "SELECT schedule_cinema_data_task();", "stackTrace": [ "/node_modules/slonik/dist:162:28", "/node_modules/slonik/dist:314:12", "/node_modules/slonik/dist:361:20", "/node_modules/slonik/dist/utilities:17:13", "/src/bin/commands/do-cinema-data-tasks.js:59:21", "/src/bin/commands/do-cinema-data-tasks.js:590:45", "internal/process/next_tick.js:68:7" ], "values": [] }, "message": "query", "sequence": 4, "time": 1540915127833, "version": "1.0.0"}{ "context": { "package": "slonik", "namespace": "slonik", "logLevel": 20, "executionTime": "66 ms", "queryId": "01CV2V5SGS0WHJX4GJN09Z3MTB", "rowCount": 1, "sql": "SELECT cinema_id \"cinemaId\", target_data \"targetData\" FROM cinema_data_task WHERE id = ?", "stackTrace": [ "/node_modules/slonik/dist:162:28", "/node_modules/slonik/dist:285:12", "/node_modules/slonik/dist/utilities:17:13", "/src/bin/commands/do-cinema-data-tasks.js:603:26", "internal/process/next_tick.js:68:7" ], "values": [ 17953947 ] }, "message": "query", "sequence": 5, "time": 1540915127902, "version": "1.0.0"}Use@roarr/cli to pretty-print the output.

UsingAtom IDE you can leverage thelanguage-babel package in combination with thelanguage-sql to enable highlighting of the SQL strings in the codebase.

To enable highlighting, you need to:

- Install

language-babelandlanguage-sqlpackages. - Configure

language-babel"JavaScript Tagged Template Literal Grammar Extensions" setting to uselanguage-sqlto highlight template literals withsqltag (configuration value:sql:source.sql). - Use

sqlhelper to construct the queries.

For more information, refer to theJavaScript Tagged Template Literal Grammar Extensions documentation oflanguage-babel package.

Thevscode-sql-lit extension provides syntax highlighting for VS Code:

Running Slonik tests requires having a local PostgreSQL instance.

The easiest way to setup a temporary instance for testing is using Docker, e.g.

docker run --name slonik-test --rm -it -e POSTGRES_PASSWORD=postgres -p 5432:5432 postgres -N 1000

About

A Node.js PostgreSQL client with runtime and build time type safety, and composable SQL.

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Sponsor this project

Uh oh!

There was an error while loading.Please reload this page.