- Notifications

You must be signed in to change notification settings - Fork121

Official PyTorch implementation of Superpoint Transformer introduced in [ICCV'23] "Efficient 3D Semantic Segmentation with Superpoint Transformer" and SuperCluster introduced in [3DV'24 Oral] "Scalable 3D Panoptic Segmentation As Superpoint Graph Clustering"

License

drprojects/superpoint_transformer

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Official implementation for

Efficient 3D Semantic Segmentation with Superpoint Transformer (ICCV 2023)

Scalable 3D Panoptic Segmentation As Superpoint Graph Clustering (3DV 2024 Oral)

EZ-SP: Fast and Lightweight Superpoint-Based 3D Segmentation (arXiv)

If you ❤️ or simply use this project, don't forget to give the repository a ⭐,it means a lot to us !

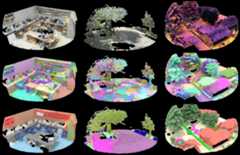

Superpoint Transformer (SPT) is a superpoint-based transformer 🤖 architecture that efficiently ⚡performssemantic segmentation on large-scale 3D scenes. This method includes afast algorithm that partitions 🧩 point clouds into a hierarchical superpointstructure, as well as a self-attention mechanism to exploit the relationshipsbetween superpoints at multiple scales.

| ✨ SPT in numbers ✨ |

|---|

| 📊S3DIS 6-Fold (76.0 mIoU) |

| 📊KITTI-360 Val (63.5 mIoU) |

| 📊DALES (79.6 mIoU) |

| 🦋212k parameters (PointNeXt ÷ 200,Stratified Transformer ÷ 40) |

| ⚡ S3DIS training in3h on 1 GPU (PointNeXt ÷ 7,Stratified Transformer ÷ 70) |

| ⚡Preprocessing x7 faster thanSPG |

SuperCluster is a superpoint-based architecture forpanoptic segmentation of (very) large 3D scenes 🐘 based on SPT.We formulate the panoptic segmentation task as ascalable superpoint graph clustering task.To this end, our model is trained to predict the input parameters of a graph optimization problem whose solution is a panoptic segmentation 💡.This formulation allows supervising our model with per-node and per-edge objectives only, circumventing the need for computing an actual panoptic segmentation and associated matching issues at train time.At inference time, our fast parallelized algorithm solves the small graph optimization problem, yielding object instances 👥.Due to its lightweight backbone and scalable formulation, SuperCluster can process scenes of unprecedented scale at once, on a single GPU 🚀, with fewer than 1M parameters 🦋.

| ✨ SuperCluster in numbers ✨ |

|---|

| 📊S3DIS 6-Fold (55.9 PQ) |

| 📊S3DIS Area 5 (50.1 PQ) |

| 📊ScanNet Val (58.7 PQ) |

| 📊KITTI-360 Val (48.3 PQ) |

| 📊DALES (61.2 PQ) |

| 🦋212k parameters (PointGroup ÷ 37) |

| ⚡ S3DIS training in4h on 1 GPU |

| ⚡7.8km² tile of18M points in10.1s on1 GPU |

EZ-SP brings two main improvements over SPT:

- Much faster preprocessing and inference

- Easier and learnable parametrization of the partition

EZ-SP replaces the costly, CPU-based, cut-pursuit partitioning step of SPTwith afast and learnable GPU-based partitioning.First, we train a small convolutional backbone to embed each point of the inputscene into a low-dimensional space, where adjacent points from differentsemantic classes are pushed apart.Next, our new GPU-accelerated graph clustering algorithm groups neighboringpoints with similar embeddings, while encouraging simple cluster contours, thusproduces semantically homogeneous superpoints.These superpoints can then be used in the SPT semantic segmentation framework.

| ✨ EZ-SP in numbers ✨ |

|---|

| 📊S3DIS 6-Fold (76.1 mIoU) |

| 📊S3DIS Area 5 (69.6 mIoU) |

| 📊KITTI-360 Val (62.0 mIoU) |

| 📊DALES (79.4 mIoU) |

| 🦋392k parameters |

| ⚡️72× faster thanPTv3 for end-to-end semantic segmentation |

| ⚡️5.3x faster than SPT for end-to-end semantic segmentation |

- 27.11.2025 Major code release for ourlearnable, GPU-acceleratedpartition, implementingEZ-SP: Fast and Lightweight Superpoint-Based 3D Segmentation.This new version introduces some changes to the codebase which arenon-backward compatible.We strived to document the breaking changes and provideinstructions andscripts to help users of previous versions move to the new codebase.Please refer to theCHANGELOG for more details❗

- 27.06.2024 Released our Superpoint Transformer 🧑🏫 tutorialslides,notebook, andvideo.Check these out if you are getting started with the project !

- 21.06.2024Damien will be giving a🧑🏫 tutorial on Superpoint Transformer on 📅 27.06.2024 at 1pm CEST.Make sure to come if you want to gain some hands-on experience with the project !Registration here.

- 28.02.2024 Major code release forpanoptic segmentation, implementingScalable 3D Panoptic Segmentation As Superpoint Graph Clustering.This new version also implements long-awaited features such as lightning's

predict()behavior,voxel-resolution and full-resolution prediction.Some changes in the dependencies and repository structure arenotbackward-compatible. If you were already using anterior code versions, thismeans we recommend re-installing your conda environment and re-running thepreprocessing or your datasets❗ - 15.10.2023 Our paperScalable 3D Panoptic Segmentation As Superpoint Graph Clustering was accepted for anoral presentation at3DV 2024 🥳

- 06.10.2023 Come see our poster forEfficient 3D Semantic Segmentation with Superpoint Transformer atICCV 2023

- 14.07.2023 Our paperEfficient 3D Semantic Segmentation with Superpoint Transformer was accepted atICCV 2023 🥳

- 15.06.2023 Official release 🌱

This project was tested with:

- Linux OS

- 64G RAM

- NVIDIA GTX 1080 Ti11G, NVIDIA V10032G, NVIDIA A4048G

- CUDA 11.8 and 12.1

- conda 23.3.1

Simply runinstall.sh to install all dependencies in a newconda environment namedspt.

# Creates a conda env named 'spt' env and installs dependencies./install.shOptional dependency:TorchSparseis an optional dependency that enables sparse 3D convolutions, used in theEZ-SP models. To install an environment with this, use:

# Creates a conda env named 'spt' env and installs all dependencies + TorchSparse./install.sh with_torchsparseNote: See theDatasets page for setting up your datasetpath and file structure.

└── superpoint_transformer │ ├── configs # Hydra configs │ ├── callbacks # Callbacks configs │ ├── data # Data configs │ ├── debug # Debugging configs │ ├── experiment # Experiment configs │ ├── extras # Extra utilities configs │ ├── hparams_search # Hyperparameter search configs │ ├── hydra # Hydra configs │ ├── local # Local configs │ ├── logger # Logger configs │ ├── model # Model configs │ ├── paths # Project paths configs │ ├── trainer # Trainer configs │ │ │ ├── eval.yaml # Main config for evaluation │ └── train.yaml # Main config for training │ ├── data # Project data (see docs/datasets.md) │ ├── docs # Documentation │ ├── logs # Logs generated by hydra and lightning loggers │ ├── media # Media illustrating the project │ ├── notebooks # Jupyter notebooks │ ├── scripts # Shell scripts │ ├── src # Source code │ ├── data # Data structure for hierarchical partitions │ ├── datamodules # Lightning DataModules │ ├── datasets # Datasets │ ├── dependencies # Compiled dependencies │ ├── loader # DataLoader │ ├── loss # Loss │ ├── metrics # Metrics │ ├── models # Model architecture │ ├── nn # Model building blocks │ ├── optim # Optimization │ ├── transforms # Functions for transforms, pre-transforms, etc │ ├── utils # Utilities │ ├── visualization # Interactive visualization tool │ │ │ ├── eval.py # Run evaluation │ └── train.py # Run training │ ├── tests # Tests of any kind │ ├── .env.example # Example of file for storing private environment variables ├── .gitignore # List of files ignored by git ├── .pre-commit-config.yaml # Configuration of pre-commit hooks for code formatting ├── install.sh # Installation script ├── LICENSE # Project license └── README.mdNote: See theDatasets page for further details on

data/.

Note: See theLogs page for further details on

logs/.

See theDatasets page to set up your datasets.

Use the following command structure for evaluating our models from a checkpointfilecheckpoint.ckpt, where<task> should besemantic for using SPT andpanoptic for usingSuperCluster:

# Evaluate for <task> segmentation on <dataset>python src/eval.py experiment=<task>/<dataset> ckpt_path=/path/to/your/checkpoint.ckpt

Some examples:

# Evaluate SPT on S3DIS Fold 5python src/eval.py experiment=semantic/s3dis datamodule.fold=5 ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SPT on KITTI-360 Valpython src/eval.py experiment=semantic/kitti360 ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SPT on DALESpython src/eval.py experiment=semantic/dales ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SuperCluster on S3DIS Fold 5python src/eval.py experiment=panoptic/s3dis datamodule.fold=5 ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SuperCluster on S3DIS Fold 5 with {wall, floor, ceiling} as 'stuff'python src/eval.py experiment=panoptic/s3dis_with_stuff datamodule.fold=5 ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SuperCluster on ScanNet Valpython src/eval.py experiment=panoptic/scannet ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SuperCluster on KITTI-360 Valpython src/eval.py experiment=panoptic/kitti360 ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate SuperCluster on DALESpython src/eval.py experiment=panoptic/dales ckpt_path=/path/to/your/checkpoint.ckpt# Evaluate EZ-SP on DALESpython src/eval.py experiment=semantic/dales_ezsp ckpt_path=/path/to/your/checkpoint.ckpt datamodule.pretrained_cnn_ckpt_path=/path/to/your/partition_checkpoint.ckpt

Note:

The pretrained weights of theSPT andSPT-nano models forS3DIS 6-Fold,KITTI-360 Val, andDALES are available at:

The pretrained weights of theSuperCluster models forS3DIS 6-Fold,S3DIS 6-Fold with stuff,ScanNet Val,KITTI-360 Val, andDALES are available at:

The pretrained weights of theEZ-SP models forS3DIS 6-Fold,KITTI-360 Val, andDALES are available at:

Use the following command structure fortrain our models on a 32G-GPU,where<task> should besemantic for using SPT andpanoptic for usingSuperCluster:

# Train for <task> segmentation on <dataset>python src/train.py experiment=<task>/<dataset>

Some examples:

# Train SPT on S3DIS Fold 5python src/train.py experiment=semantic/s3dis datamodule.fold=5# Train SPT on KITTI-360 Valpython src/train.py experiment=semantic/kitti360# Train SPT on DALESpython src/train.py experiment=semantic/dales# Train SuperCluster on S3DIS Fold 5python src/train.py experiment=panoptic/s3dis datamodule.fold=5# Train SuperCluster on S3DIS Fold 5 with {wall, floor, ceiling} as 'stuff'python src/train.py experiment=panoptic/s3dis_with_stuff datamodule.fold=5# Train SuperCluster on ScanNet Valpython src/train.py experiment=panoptic/scannet# Train SuperCluster on KITTI-360 Valpython src/train.py experiment=panoptic/kitti360# Train SuperCluster on DALESpython src/train.py experiment=panoptic/dales

Use the following totrain on a 11G-GPU 💾 (training time and performancemay vary):

# Train SPT on S3DIS Fold 5python src/train.py experiment=semantic/s3dis_11g datamodule.fold=5# Train SPT on KITTI-360 Valpython src/train.py experiment=semantic/kitti360_11g# Train SPT on DALESpython src/train.py experiment=semantic/dales_11g# Train SuperCluster on S3DIS Fold 5python src/train.py experiment=panoptic/s3dis_11g datamodule.fold=5# Train SuperCluster on S3DIS Fold 5 with {wall, floor, ceiling} as 'stuff'python src/train.py experiment=panoptic/s3dis_with_stuff_11g datamodule.fold=5# Train SuperCluster on ScanNet Valpython src/train.py experiment=panoptic/scannet_11g# Train SuperCluster on KITTI-360 Valpython src/train.py experiment=panoptic/kitti360_11g# Train SuperCluster on DALESpython src/train.py experiment=panoptic/dales_11g

Note: Encountering CUDA Out-Of-Memory errors 💀💾 ? See our dedicatedtroubleshooting section.

Note: Other ready-to-use configs are provided in

configs/experiment/. You can easily design your ownexperiments by composingconfigs:# Train Nano-3 for 50 epochs on DALESpython src/train.py datamodule=dales model=nano-3 trainer.max_epochs=50SeeLightning-Hydra for moreinformation on how the config system works and all the awesome perks of theLightning+Hydra combo.

Note: By default, your logs will automatically be uploaded toWeights and Biases, from where you can track and compareyour experiments. Other loggers are available in

configs/logger/. SeeLightning-Hydra for moreinformation on the logging options.

NB: Current EZ-SP implementation supports<dataset> amongs3dis,kitti360 anddales.

To train an EZ-SP model for semantic segmentation, you can either :

a) first train the small backbone for partition, then train the full model for segmentation,

b) use a checkpoint for the partition model and directly train the full model for segmentation

Train the partition model:

python src/train.py experiment=partition/<dataset>_ezsp

The checkpoint path will be logged (usually in

logs/train/runs/<run_dir>/checkpoints/last.ckpt).Train the semantic model with the learned partition:

python src/train.py experiment=semantic/<dataset>_ezsp datamodule.pretrained_cnn_ckpt_path=<partition_ckpt_path>

Replace

<partition_ckpt_path>with the checkpoint from step 1.

- Set partition model checkpoints: Download ourreleased checkpoints of the partition model (named

ezsp_partition_<dataset>.ckpt) and place the checkpoints in theckpt/folder at the root of the project (and runpython src/train.py experiment=semantic/<dataset>_ezsp). - Train the semantic model:

python src/train.py experiment=semantic/<dataset>_ezsp

The following provides technical details about the two stages described above:

Launching an experiment from

config/experiments/partitiontrains a small sparse CNN for EZ-SP that embeds every point into a low-dimensional space where adjacent points from different semantic classes are pushed apart. This first training stage is controlled by themodel.training_partition_stageparameter.Launching an experiment from

config/experiments/semanticthat ends with_ezsptrains the full EZ-SP model for semantic segmentation. Note that these configurations require a checkpoint path to a partition model, specified via thedatamodule.pretrained_cnn_ckpt_pathparameter. The pretrained partition model is used to compute the hierarchical superpoint partition during preprocessing, on which the full model reasons during training.

Both SPT and SuperCluster inherit fromLightningModule and implementpredict_step(), which permits usingPyTorch Lightning'sTrainer.predict() mechanism.

fromsrc.models.semanticimportSemanticSegmentationModulefromsrc.datamodules.s3disimportS3DISDataModulefrompytorch_lightningimportTrainer# Predict behavior for semantic segmentation from a torch DataLoaderdataloader=DataLoader(...)model=SemanticSegmentationModule(...)trainer=Trainer(...)batch,output=trainer.predict(model=model,dataloaders=dataloader)

This, however, still requires you to instantiate aTrainer, aDataLoader,and a model with relevant parameters.

For a little more simplicity, all our datasets inherit fromLightningDataModule and implementpredict_dataloader() by pointing to theircorresponding test set by default. This permits directly passing a datamodule toPyTorch Lightning'sTrainer.predict()without explicitly instantiating aDataLoader.

fromsrc.models.semanticimportSemanticSegmentationModulefromsrc.datamodules.s3disimportS3DISDataModulefrompytorch_lightningimportTrainer# Predict behavior for semantic segmentation on S3DISdatamodule=S3DISDataModule(...)model=SemanticSegmentationModule(...)trainer=Trainer(...)batch,output=trainer.predict(model=model,datamodule=datamodule)

For more details on how to instantiate these, as well as the output formatof our model, we strongly encourage you to play with ourdemo notebook and have a look at thesrc/eval.py script.

By design, our models only need to produce predictions for the superpoints ofthe

At inference time, however, we often need thepredictions on the voxels of the

See ourdemo notebook for more details on these.

For running a pretrained model on your own point cloud, please refer to ourtutorialslides,notebook,andvideo.

Our hierarchical superpoint partition is computed at preprocessing time. Itsconstruction involves several steps whose parametrization must be adapted toyour specific dataset and task. Please refer to ourtutorialslides,notebook,andvideo for betterunderstanding this process and tuning it to your needs.

One specificity of SuperCluster is that the model is not trained to explicitlydo panoptic segmentation, but to predict the input parameters of a superpointgraph clustering problem whose solution is a panoptic segmentation.

For this reason, the hyperparameters for this graph optimization problem areselected after training, with a grid search on the training or validation set.We find that fairly similar hyperparameters yield the best performance on allour datasets (see ourpaper's appendix). Yet, you may want to explorethese hyperparameters for your own dataset. To this end, see ourdemo notebook forparameterizing the panoptic segmentation.

We providenotebooks to help you get started with manipulating ourcore data structures, configs loading, dataset and model instantiation,inference on each dataset, and visualization.

In particular, we created an interactive visualization tool ✨ which can be usedto produce shareable HTMLs. Demos of how to use this tool are provided inthenotebooks. Additionally, examples of such HTML files areprovided inmedia/visualizations.7z

| Location | Content |

|---|---|

| README | General introduction to the project |

docs/data_structures | Introduction to the core data structures of this project:Data,NAG,Cluster, andInstanceData |

docs/datasets | Introduction to our implemented datasets, to ourBaseDataset class, and how to create your own dataset inheriting from it |

docs/logging | Introduction to logging and the project'slogs/ structure |

docs/visualization | Introduction to our interactive 3D visualization tool |

Note: We endeavoured tocomment our code as much as possible to makethis project usable. If you don't find the answer you are looking for in the

docs/, make sure tohave a look at the source code and past issues.Still, if you find some parts are unclear or some more documentation would beneeded, feel free to let us know by creating an issue !

Here are some common issues and tips for tackling them.

Our default configurations are designed for a 32G-GPU. Yet, SPT and SuperCluster can runon an11G-GPU 💾, with minor time and performance variations.

We provide configs inconfigs/experiment/semantic fortraining SPT on an11G-GPU 💾:

# Train SPT on S3DIS Fold 5python src/train.py experiment=semantic/s3dis_11g datamodule.fold=5# Train SPT on KITTI-360 Valpython src/train.py experiment=semantic/kitti360_11g# Train SPT on DALESpython src/train.py experiment=semantic/dales_11g

Similarly, we provide configs inconfigs/experiment/panoptic fortraining SuperCluster on an11G-GPU 💾:

# Train SuperCluster on S3DIS Fold 5python src/train.py experiment=panoptic/s3dis_11g datamodule.fold=5# Train SuperCluster on S3DIS Fold 5 with {wall, floor, ceiling} as 'stuff'python src/train.py experiment=panoptic/s3dis_with_stuff_11g datamodule.fold=5# Train SuperCluster on ScanNet Valpython src/train.py experiment=panoptic/scannet_11g# Train SuperCluster on KITTI-360 Valpython src/train.py experiment=panoptic/kitti360_11g# Train SuperCluster on DALESpython src/train.py experiment=panoptic/dales_11g

Having some CUDA OOM errors 💀💾 ? Here are some parameters you can playwith to mitigate GPU memory use, based on when the error occurs.

Parameters affecting CUDA memory.

Legend: 🟡 Preprocessing | 🔴 Training | 🟣 Inference (including validation and testing during training)

| Parameter | Description | When |

|---|---|---|

datamodule.xy_tiling | Splits dataset tiles into xy_tiling^2 smaller tiles, based on a regular XY grid. Ideal square-shaped tiles à la DALES. Note this will affect the number of training steps. | 🟡🟣 |

datamodule.pc_tiling | Splits dataset tiles into 2^pc_tiling smaller tiles, based on a their principal component. Ideal for varying tile shapes à la S3DIS and KITTI-360. Note this will affect the number of training steps. | 🟡🟣 |

datamodule.max_num_nodes | Limits the number of | 🔴 |

datamodule.max_num_edges | Limits the number of | 🔴 |

datamodule.voxel | Increasing voxel size will reduce preprocessing, training and inference times but will reduce performance. | 🟡🔴🟣 |

datamodule.pcp_regularization | Regularization for partition levels. The larger, the fewer the superpoints. | 🟡🔴🟣 |

datamodule.pcp_spatial_weight | Importance of the 3D position in the partition. The smaller, the fewer the superpoints. | 🟡🔴🟣 |

datamodule.pcp_cutoff | Minimum superpoint size. The larger, the fewer the superpoints. | 🟡🔴🟣 |

datamodule.graph_k_max | Maximum number of adjacent nodes in the superpoint graphs. The smaller, the fewer the superedges. | 🟡🔴🟣 |

datamodule.graph_gap | Maximum distance between adjacent superpoints int the superpoint graphs. The smaller, the fewer the superedges. | 🟡🔴🟣 |

datamodule.graph_chunk | Reduce to avoid OOM whenRadiusHorizontalGraph preprocesses the superpoint graph. | 🟡 |

datamodule.dataloader.batch_size | Controls the number of loaded tiles. Eachtrain batch is composed ofbatch_size*datamodule.sample_graph_k spherical samplings. Inference is performed onentire validation and test tiles, without spherical sampling. | 🔴🟣 |

datamodule.sample_segment_ratio | Randomly drops a fraction of the superpoints at each partition level. | 🔴 |

datamodule.sample_graph_k | Controls the number of spherical samples in thetrain batches. | 🔴 |

datamodule.sample_graph_r | Controls the radius of spherical samples in thetrain batches. Set tosample_graph_r<=0 to use the entire tile without spherical sampling. | 🔴 |

datamodule.sample_point_min | Controls the minimum number of | 🔴 |

datamodule.sample_point_max | Controls the maximum number of | 🔴 |

callbacks.gradient_accumulator.scheduling | Gradient accumulation. Can be used to train with smaller batches, with more training steps. | 🔴 |

If your work uses all or part of the present code, please include the following a citation:

@article{robert2023spt, title={Efficient 3D Semantic Segmentation with Superpoint Transformer}, author={Robert, Damien and Raguet, Hugo and Landrieu, Loic}, journal={Proceedings of the IEEE/CVF International Conference on Computer Vision}, year={2023}}@article{robert2024scalable, title={Scalable 3D Panoptic Segmentation as Superpoint Graph Clustering}, author={Robert, Damien and Raguet, Hugo and Landrieu, Loic}, journal={Proceedings of the IEEE International Conference on 3D Vision}, year={2024}}@article{geist2025ezsp, title={EZ-SP: Fast and Lightweight Superpoint-Based 3D Segmentation}, author={Geist, Louis and Landrieu, Loic and Robert, Damien}, journal={arXiv}, year={2025},}📄 You can find our papers on arxiv:

Also,if you ❤️ or simply use this project, don't forget to give therepository a ⭐, it means a lot to us !

- This project was built usingLightning-Hydra template.

- The main data structures of this work rely onPyTorch Geometric

- Some point cloud operations were inspired from theTorch-Points3D framework, although not merged with the official projectat this point.

- For the KITTI-360 dataset, some code from the officialKITTI-360 wasused.

- Some superpoint-graph-related operations were inspired fromSuperpoint Graph.

- Parallel Cut-Pursuit was used in SPT and SPC to compute thehierarchical superpoint partition and graph clustering. Note that this step isreplaced by our GPU-based algorithm in EZ-SP.

This project has greatly benefited from the support ofRomain Janvier.This collaboration was made possible thanks tothe3DFin project.3DFin has been developed at the Centre of WildfireResearch of Swansea University (UK) in collaboration with the Research Instituteof Biodiversity (CSIC, Spain) and the Department of Mining Exploitation of theUniversity of Oviedo (Spain).Funding provided by the UK NERC project (NE/T001194/1):Advancing 3D Fuel Mapping for Wildfire Behaviour and Risk Mitigation Modellingand by the Spanish Knowledge Generation project (PID2021-126790NB-I00):Advancing carbon emission estimations from wildfires applying artificialintelligence to 3D terrestrial point clouds.

This project has also benefited from contributions byLouis Geist,whose work was funded by thePEPR IA SHARP.

About

Official PyTorch implementation of Superpoint Transformer introduced in [ICCV'23] "Efficient 3D Semantic Segmentation with Superpoint Transformer" and SuperCluster introduced in [3DV'24 Oral] "Scalable 3D Panoptic Segmentation As Superpoint Graph Clustering"

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Packages0

Uh oh!

There was an error while loading.Please reload this page.

Contributors8

Uh oh!

There was an error while loading.Please reload this page.