- Notifications

You must be signed in to change notification settings - Fork37

A flexible, adaptive classification system for dynamic text classification

License

codelion/adaptive-classifier

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

🚀 Dynamic text classification with continuous learning, strategic defense, and zero-downtime adaptation

- 📚HuggingFace Organization - Pre-trained models and datasets

- 📖Articles & Tutorials:

Adaptive Classifier is a PyTorch-based machine learning library that revolutionizes text classification withcontinuous learning,dynamic class addition, andstrategic defense against adversarial inputs. Built on HuggingFace transformers, it enables zero-downtime model updates and enterprise-grade robustness.

- 🚀 Universal Compatibility - Works with any HuggingFace transformer model

- ⚡ Optimized Inference - Built-in ONNX Runtime for 2-4x faster CPU predictions

- 📈 Continuous Learning - Add new examples without catastrophic forgetting

- 🔄 Dynamic Classes - Add new classes at runtime without retraining

- ⏱️ Zero Downtime - Update models in production without service interruption

- 🎮 Strategic Classification - Game-theoretic defense against adversarial manipulation

- 🔒 Anti-Gaming Protection - Robust predictions under strategic behavior

- ⚖️ Multiple Prediction Modes - Regular, strategic, and robust inference options

- 💾 Prototype Memory - FAISS-powered efficient similarity search

- 🔬 Adaptive Neural Layer - Trainable classification head with EWC protection

- 🎯 Hybrid Predictions - Combines prototype similarity and neural network outputs

- 📊 HuggingFace Integration - Push/pull models directly from the Hub

Tested on adversarial examples from AI-Secure/adv_glue dataset:

| Metric | Regular Classifier | Strategic Classifier | Improvement |

|---|---|---|---|

| Clean Data Accuracy | 80.00% | 82.22% | +2.22% |

| Adversarial Data Accuracy | 60.00% | 82.22% | +22.22% |

| Robustness (vs attack) | -20.00% drop | 0.00% drop | Perfect |

Evaluated on RAGTruth benchmark across multiple task types:

| Task Type | Precision | Recall | F1 Score |

|---|---|---|---|

| QA | 35.50% | 45.11% | 39.74% |

| Summarization | 22.18% | 96.91% | 36.09% |

| Data-to-Text | 65.00% | 100.0% | 78.79% |

| Overall | 40.89% | 80.68% | 51.54% |

Tested on arena-hard-auto-v0.1 dataset (500 queries):

| Metric | Without Adaptation | With Adaptation | Improvement |

|---|---|---|---|

| Cost Savings | 25.60% | 32.40% | +6.80% |

| Efficiency Ratio | 1.00x | 1.27x | +27% |

| Resource Utilization | Standard | Optimized | Better |

Key Insight: Adaptive classification maintains quality while significantly improving cost efficiency and robustness across all tested scenarios.

pip install adaptive-classifier

Includes: ONNX Runtime for 2-4x faster CPU inference out-of-the-box

# Clone the repositorygit clone https://github.com/codelion/adaptive-classifier.gitcd adaptive-classifier# Install in development modepip install -e.# Install test dependencies (optional)pip install pytest pytest-cov pytest-randomly

Get started with adaptive classification in under 30 seconds:

fromadaptive_classifierimportAdaptiveClassifier# 🎯 Step 1: Initialize with any HuggingFace modelclassifier=AdaptiveClassifier("bert-base-uncased")# 📝 Step 2: Add training examplestexts= ["The product works great!","Terrible experience","Neutral about this purchase"]labels= ["positive","negative","neutral"]classifier.add_examples(texts,labels)# 🔮 Step 3: Make predictionspredictions=classifier.predict("This is amazing!")print(predictions)# Output: [('positive', 0.85), ('neutral', 0.12), ('negative', 0.03)]

Classify texts into multiple categories simultaneously with automatic threshold adaptation:

fromadaptive_classifierimportMultiLabelAdaptiveClassifier# Initialize multi-label classifierclassifier=MultiLabelAdaptiveClassifier("bert-base-uncased",min_predictions=1,# Ensure at least 1 predictionmax_predictions=5# Limit to top 5 predictions)# Multi-label training data (each text can have multiple labels)texts= ["AI researchers study climate change using machine learning","Tech startup develops healthcare solutions"]labels= [ ["technology","science","climate","ai"], ["technology","business","healthcare"]]classifier.add_examples(texts,labels)# Make multi-label predictionspredictions=classifier.predict_multilabel("Medical AI breakthrough announced")# Output: [('healthcare', 0.72), ('technology', 0.68), ('ai', 0.45)]

# Save locallyclassifier.save("./my_classifier")loaded_classifier=AdaptiveClassifier.load("./my_classifier")# 🤗 HuggingFace Hub Integrationclassifier.push_to_hub("adaptive-classifier/my-model")hub_classifier=AdaptiveClassifier.from_pretrained("adaptive-classifier/my-model")

# Enable strategic classification for adversarial robustnessconfig= {'enable_strategic_mode':True}strategic_classifier=AdaptiveClassifier("bert-base-uncased",config=config)# Robust predictions against manipulationpredictions=strategic_classifier.predict("This product has amazing quality features!")# Returns predictions that consider potential gaming attempts

Adaptive Classifier includesbuilt-in ONNX Runtime support for2-4x faster CPU inference with zero code changes required.

ONNX Runtime is automatically used on CPU for optimal performance:

# Automatically uses ONNX on CPU, PyTorch on GPUclassifier=AdaptiveClassifier("bert-base-uncased")# That's it! Predictions are 2-4x faster on CPUpredictions=classifier.predict("Fast inference!")

| Configuration | Speed | Use Case |

|---|---|---|

| PyTorch (GPU) | Fastest | GPU servers |

| ONNX (CPU) | 2-4x faster | Production CPU deployments |

| PyTorch (CPU) | Baseline | Development, training |

# Save with ONNX export (both quantized & unquantized versions)classifier.save("./model")# Push to Hub with ONNX (both versions included by default)classifier.push_to_hub("username/model")# Load automatically uses quantized ONNX on CPU (fastest, 4x smaller)fast_classifier=AdaptiveClassifier.load("./model")# Choose unquantized ONNX for maximum accuracyaccurate_classifier=AdaptiveClassifier.load("./model",prefer_quantized=False)# Force PyTorch (no ONNX)pytorch_classifier=AdaptiveClassifier.load("./model",use_onnx=False)# Opt-out of ONNX export when savingclassifier.save("./model",include_onnx=False)

ONNX Model Versions:

- Quantized (default): INT8 quantized, 4x smaller, ~1.14x faster on ARM, 2-4x faster on x86

- Unquantized: Full precision, maximum accuracy, larger file size

By default, models are saved with both versions, and the quantized version is automatically loaded for best performance. Useprefer_quantized=False if you need maximum accuracy.

# Compare PyTorch vs ONNX performancepython scripts/benchmark_onnx.py --model bert-base-uncased --runs 100Example Results:

Model: bert-base-uncased (CPU)PyTorch: 8.3ms/query (baseline)ONNX: 2.1ms/query (4.0x faster) ✓Note: ONNX optimization is included by default. For GPU inference, PyTorch is automatically used for best performance.

# Add a completely new classnew_texts= ["Error code 404 appeared","System crashed after update"]new_labels= ["technical"]*2classifier.add_examples(new_texts,new_labels)

# Add more examples to existing classesmore_examples= ["Best purchase ever!","Highly recommend this"]more_labels= ["positive"]*2classifier.add_examples(more_examples,more_labels)

fromadaptive_classifierimportMultiLabelAdaptiveClassifier# Configure advanced multi-label settingsclassifier=MultiLabelAdaptiveClassifier("bert-base-uncased",default_threshold=0.5,# Base threshold for predictionsmin_predictions=1,# Minimum labels to returnmax_predictions=10# Maximum labels to return)# Training with diverse multi-label examplestexts= ["Scientists develop AI for medical diagnosis and climate research","Tech company launches sustainable energy and healthcare products","Olympic athletes use sports science and nutrition technology"]labels= [ ["science","ai","healthcare","research"], ["technology","business","environment","healthcare"], ["sports","science","health","technology"]]classifier.add_examples(texts,labels)# Advanced prediction optionspredictions=classifier.predict_multilabel("New research on AI applications in environmental science",threshold=0.3,# Custom thresholdmax_labels=5# Limit results)# Get detailed statisticsstats=classifier.get_label_statistics()print(f"Adaptive threshold:{stats['adaptive_threshold']}")print(f"Label-specific thresholds:{stats['label_thresholds']}")

# Enable strategic mode to defend against adversarial inputsconfig= {'enable_strategic_mode':True,'cost_function_type':'linear','cost_coefficients': {'sentiment_words':0.5,# Cost to change sentiment-bearing words'length_change':0.1,# Cost to modify text length'word_substitution':0.3# Cost to substitute words },'strategic_blend_regular_weight':0.6,# Weight for regular predictions'strategic_blend_strategic_weight':0.4# Weight for strategic predictions}classifier=AdaptiveClassifier("bert-base-uncased",config=config)classifier.add_examples(texts,labels)# Robust predictions that consider potential manipulationtext="This product has amazing quality features!"# Dual prediction (automatic blend of regular + strategic)predictions=classifier.predict(text)# Pure strategic prediction (simulates adversarial manipulation)strategic_preds=classifier.predict_strategic(text)# Robust prediction (assumes input may already be manipulated)robust_preds=classifier.predict_robust(text)print(f"Dual:{predictions}")print(f"Strategic:{strategic_preds}")print(f"Robust:{robust_preds}")

TheMultiLabelAdaptiveClassifier extends adaptive classification to handle scenarios where each text can belong to multiple categories simultaneously. It automatically handles threshold adaptation for scenarios with many labels.

- 🎯 Automatic Threshold Adaptation: Dynamically adjusts thresholds based on the number of labels to prevent empty predictions

- 📊 Sigmoid Activation: Uses proper multi-label architecture with BCE loss instead of softmax

- ⚙️ Configurable Limits: Set minimum and maximum number of predictions per input

- 📈 Label-Specific Thresholds: Automatically adjusts thresholds based on label frequency

- 🔄 Incremental Learning: Add new labels and examples without retraining from scratch

fromadaptive_classifierimportMultiLabelAdaptiveClassifier# Initialize with configurationclassifier=MultiLabelAdaptiveClassifier("distilbert/distilbert-base-cased",default_threshold=0.5,min_predictions=1,max_predictions=5)# Multi-label training datatexts= ["Breaking: Scientists discover AI can help predict climate change patterns","Tech giant announces breakthrough in quantum computing for healthcare","Olympic committee adopts new sports technology for athlete performance"]labels= [ ["science","technology","climate","news"], ["technology","healthcare","quantum","business"], ["sports","technology","performance","news"]]# Train the classifierclassifier.add_examples(texts,labels)# Make predictionspredictions=classifier.predict_multilabel("Revolutionary medical AI system launched by tech startup")# Results: [('technology', 0.85), ('healthcare', 0.72), ('business', 0.45)]

The classifier automatically adjusts prediction thresholds based on the number of labels:

| Number of Labels | Threshold | Benefit |

|---|---|---|

| 2-4 labels | 0.5 (default) | Standard precision |

| 5-9 labels | 0.4 (20% lower) | Balanced recall |

| 10-19 labels | 0.3 (40% lower) | Better coverage |

| 20-29 labels | 0.2 (60% lower) | Prevents empty results |

| 30+ labels | 0.1 (80% lower) | Ensures predictions |

This solves the common "No labels met the threshold criteria" issue when dealing with many-label scenarios.

Detect when LLMs generate information not supported by provided context (51.54% F1, 80.68% recall):

detector=AdaptiveClassifier.from_pretrained("adaptive-classifier/llm-hallucination-detector")context="France is in Western Europe. Capital: Paris. Population: ~67 million."response="Paris is the capital. Population is 70 million."# Contains hallucinationprediction=detector.predict(f"Context:{context}\nAnswer:{response}")# Returns: [('HALLUCINATED', 0.72), ('NOT_HALLUCINATED', 0.28)]

Optimize costs by routing queries to appropriate model tiers (32.40% cost savings):

router=AdaptiveClassifier.from_pretrained("adaptive-classifier/llm-router")query="Write a function to calculate Fibonacci sequence"predictions=router.predict(query)# Returns: [('HIGH', 0.92), ('LOW', 0.08)]# Route to GPT-4 for complex tasks, GPT-3.5 for simple ones

Automatically predict optimal LLM settings (temperature, top_p) for different query types:

config_optimizer=AdaptiveClassifier.from_pretrained("adaptive-classifier/llm-config-optimizer")query="Explain quantum physics concepts"predictions=config_optimizer.predict(query)# Returns: [('BALANCED', 0.85), ('CREATIVE', 0.10), ...]# Automatically suggests temperature range: 0.6-1.0 for balanced responses

Deploy enterprise-ready classifiers for various moderation tasks:

# Available pre-trained enterprise classifiers:classifiers= ["adaptive-classifier/content-moderation",# Content safety"adaptive-classifier/business-sentiment",# Business communications"adaptive-classifier/pii-detection",# Privacy protection"adaptive-classifier/fraud-detection",# Financial security"adaptive-classifier/email-priority",# Email routing"adaptive-classifier/compliance-classification"# Regulatory compliance]# Easy deploymentmoderator=AdaptiveClassifier.from_pretrained("adaptive-classifier/content-moderation")result=moderator.predict("User generated content here...")

💡 Pro Tip: All enterprise models support continuous adaptation - add your domain-specific examples to improve performance over time.

The Adaptive Classifier combines four key components in a unified architecture:

Transformer Embeddings: Uses state-of-the-art language models for text representation

Prototype Memory: Maintains class prototypes for quick adaptation to new examples

Adaptive Neural Layer: Learns refined decision boundaries through continuous training

Strategic Classification: Defends against adversarial manipulation using game-theoretic principles. When strategic mode is enabled, the system:

- Models potential strategic behavior of users trying to game the classifier

- Uses cost functions to represent the difficulty of manipulating different features

- Combines regular predictions with strategic-aware predictions for robustness

- Provides multiple prediction modes: dual (blended), strategic (simulates manipulation), and robust (anti-manipulation)

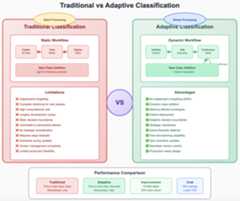

Traditional classification approaches face significant limitations when dealing with evolving requirements and adversarial environments:

The Adaptive Classifier overcomes these limitations through:

- Dynamic class addition without full retraining

- Strategic robustness against adversarial manipulation

- Memory-efficient prototypes with FAISS optimization

- Zero downtime updates for production systems

- Game-theoretic defense mechanisms

The system evolves through distinct phases, each building upon previous knowledge without catastrophic forgetting:

The learning process includes:

- Initial Training: Bootstrap with basic classes

- Dynamic Addition: Seamlessly add new classes as they emerge

- Continuous Learning: Refine decision boundaries with EWC protection

- Strategic Enhancement: Develop robustness against manipulation

- Production Deployment: Full capability with ongoing adaptation

When using the adaptive classifier for true online learning (adding examples incrementally), be aware that the order in which examples are added can affect predictions. This is inherent to incremental neural network training.

# These two scenarios may produce slightly different models:# Scenario 1classifier.add_examples(["fish example"], ["aquatic"])classifier.add_examples(["bird example"], ["aerial"])# Scenario 2classifier.add_examples(["bird example"], ["aerial"])classifier.add_examples(["fish example"], ["aquatic"])

While we've implemented sorted label ID assignment to minimize this effect, the neural network component still learns incrementally, which can lead to order-dependent behavior.

For applications requiring strict order independence, you can configure the classifier to rely solely on prototype-based predictions:

# Configure to use only prototypes (order-independent)config= {'prototype_weight':1.0,# Use only prototypes'neural_weight':0.0# Disable neural network contribution}classifier=AdaptiveClassifier("bert-base-uncased",config=config)

With this configuration:

- Predictions are based solely on similarity to class prototypes (mean embeddings)

- Results are completely order-independent

- Trade-off: May have slightly lower accuracy than the hybrid approach

- For maximum consistency: Use prototype-only configuration

- For maximum accuracy: Accept some order dependency with the default hybrid approach

- For production systems: Consider batching updates and retraining periodically if strict consistency is required

- Model selection matters: Some models (e.g.,

google-bert/bert-large-cased) may produce poor embeddings for single words. For better results with short inputs, consider:bert-base-uncasedsentence-transformers/all-MiniLM-L6-v2- Or any model specifically trained for semantic similarity

- OpenEvolve - Open-source evolutionary coding agent for algorithm discovery

- OptiLLM - Optimizing inference proxy with 20+ techniques for 2-10x accuracy improvements

- 🐛 Issues & Bug Reports:GitHub Issues

- 💬 Discussions:GitHub Discussions

- 📖 Documentation:API Reference

- 🛠️ Contributing:CONTRIBUTING.md

- Strategic Classification

- RouteLLM: Learning to Route LLMs with Preference Data

- Transformer^2: Self-adaptive LLMs

- Lamini Classifier Agent Toolkit

- Protoformer: Embedding Prototypes for Transformers

- Overcoming catastrophic forgetting in neural networks

- RAGTruth: A Hallucination Corpus for Developing Trustworthy Retrieval-Augmented Language Models

- LettuceDetect: A Hallucination Detection Framework for RAG Applications

If you use this library in your research, please cite:

@software{adaptive-classifier,title ={Adaptive Classifier: Dynamic Text Classification with Continuous Learning},author ={Asankhaya Sharma},year ={2025},publisher ={GitHub},url ={https://github.com/codelion/adaptive-classifier}}

Made with ❤️ byAdaptive Classifier Team

About

A flexible, adaptive classification system for dynamic text classification

Topics

Resources

License

Contributing

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Packages0

Contributors2

Uh oh!

There was an error while loading.Please reload this page.