- Notifications

You must be signed in to change notification settings - Fork12

Deep Reinforcement Learning based autonomous navigation for quadcopters using PPO algorithm.

License

bilalkabas/PPO-based-Autonomous-Navigation-for-Quadcopters

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

This repository contains an implementation of Proximal Policy Optimization (PPO) for autonomous navigation in a corridor environment with a quadcopter. There are blocks having circular opening for the drone to go through for each 4 meters. The expectation is that the agent navigates through these openings without colliding with blocks.This project currently runs only on Windows since Unreal environments were packaged for Windows.

The training environment has 9 sections with different textures and hole positions. The agent starts at these sections randomly. The starting point of the agent is also random within a specific region in the yz-plane.

- State is in the form of a RGB image taken by the front camera of the agent.

- Image shape: 50 x 50 x 3

- There are 9 discrete actions.

#️⃣1. Clone the repository

git clone https://github.com/bilalkabas/PPO-based-Autonomous-Navigation-for-Quadcopters#️⃣2. From Anaconda command prompt, create a new conda environment

I recommend you to useAnaconda or Miniconda to create a virtual environment.

conda create -n ppo_drone python==3.8#️⃣ 3.Install required libraries

Inside the main directory of the repo

conda activate ppo_dronepip install -r requirements.txt#️⃣ 4.(Optional) Install Pytorch for GPU

You must have a CUDA supported NVIDIA GPU.

Details for installation

For this project, I used CUDA 11.0 and the following conda installation command to install Pytorch:

conda install pytorch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 cudatoolkit=11.0 -c pytorch#️⃣4. Editsettings.json

Content of the settings.json should be as below:

The

setting.jsonfile is located atDocuments\AirSimfolder.

{"SettingsVersion":1.2,"LocalHostIp":"127.0.0.1","SimMode":"Multirotor","ClockSpeed":20,"ViewMode":"SpringArmChase","Vehicles": {"drone0": {"VehicleType":"SimpleFlight","X":0.0,"Y":0.0,"Z":0.0,"Yaw":0.0 } },"CameraDefaults": {"CaptureSettings": [ {"ImageType":0,"Width":50,"Height":50,"FOV_Degrees":120 } ] } }Make sure you followed the instructions above to setup the environment.

#️⃣1. Download the training environment

Go to thereleases and downloadTrainEnv.zip. After downloading completed, extract it.

#️⃣2. Now, you can open up environment's executable file and start the training

So, inside the repository

python main.pyMake sure you followed the instructions above to setup the environment. To speed up the training, the simulation runs at 20x speed. You may consider to change the"ClockSpeed" parameter insettings.json to 1.

#️⃣1. Download the test environment

Go to thereleases and downloadTestEnv.zip. After downloading completed, extract it.

#️⃣2. Now, you can open up environment's executable file and run the trained model

So, inside the repository

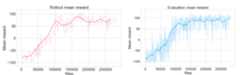

python policy_run.pyThe trained model insaved_policy folder was trained for 280k steps.

The test environment has different textures and hole positions than that of the training environment. For 100 episodes, the trained model is able to travel 17.5 m on average and passes through 4 holes on average without any collision. The agent can pass through at most 9 holes in test environment without any collision.

This project is licensed under theGNU Affero General Public License.

About

Deep Reinforcement Learning based autonomous navigation for quadcopters using PPO algorithm.

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Uh oh!

There was an error while loading.Please reload this page.