- Notifications

You must be signed in to change notification settings - Fork2

Jmeter JTL parsing with Logstash for Elasticseacrh and Influxdb ...

License

anasoid/jmeter-logstash

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Jmeter JTL ans statiscts file parsing with Logstash and elasticsearch, you can find imageonDocker Hub, statistics.json file is generated with jmeter htmlreport.

- Where to get help:

- Parse Standard JTL (CSV Format).

- Possibility to filter requests based on regex filter (include and exclude filter) .

- Flag samplers generated by TransactionController based on regex (by default '.+').

- For TransactionController, calculate number of failing sampler and total.

- Add relative time to compare different Executions, variable TESTSTART.MS should be logged withpropertysample_variables

- Add Project name, test name, environment and executionId to organize results and compare different execution.

- Split Label name to have multi tags by request (by default split by '/').

- Flag subresult, when there is a redirection 302,.. Subrequest has a have a suffix like "-xx" when xx is the ordernumber

- Supporting ElasticSearch and can be adapted for other tools.

- can also index custom field logged in file withproperty :sample_variables

- 1. jmeter-logstash

- 2. Quick reference

- 3. Getting Started

- 4. Troubleshooting & Limitation

Thejmeter-logstash images come in many flavors, each designed for a specific use case.The images version are based on component used to build image, default use elasticsearch output:

- Logstash Version: 7.17.9 -> default for 7.17.

- Create Optional docker network (Called jmeter). If not used remove "--net jmeter " from all following docker commandand adapt Elasticsearch url.

docker network create jmeter

- Start elastic search container , Or use any Elasticsearch instance you have already installed.

docker run --name jmeter-elastic --net jmeter \-p 9200:9200 -p 9300:9300 \-e"ES_JAVA_OPTS=-Xms1024m -Xmx1024m" \-e"xpack.security.enabled=false" \-e"discovery.type=single-node" \docker.elastic.co/elasticsearch/elasticsearch:8.4.1

- Start Kibana and connect it to elastic search Using environnement variableELASTICSEARCH_HOSTS.

docker run --name jmeter-kibana --net jmeter -p 5601:5601 -e"ELASTICSEARCH_HOSTS=http://jmeter-elastic:9200" docker.elastic.co/kibana/kibana:8.4.1- In the project folder create a folder named 'input' or you can use any input folder in your machine.

- If you choose to use a different input folder, you should change"${PWD}/input" on the following command by yourinput folder.

#Run Imagedocker run --rm -it --net jmeter -e"ELASTICSEARCH_HOSTS=http://jmeter-elastic:9200" -v${PWD}/input:/input/ anasoid/jmeter-logstash

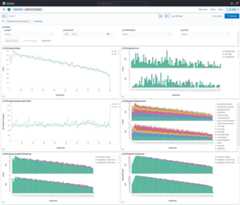

Download Dashboards fromKibana Dashboards and go to Stack management kibana ->saved object for import.

| Main Dashboard | Compare Dashboard |

|---|---|

|  |

- To exit after all files parsed use (-e "FILE_EXIT_AFTER_READ=true") should be used with (-e "FILE_READ_MODE=read") ..

- To not remove container logstash after execution not use --rm from arguments.

- Logstash keep information on position f last line parsed in a file sincedb, this file by default is on the path/usr/share/logstash/data/plugins/inputs/file, if you use the same container this file will be persisted even yourestart logstash cotainer, and if you need to maintain this file even you remove container you can mount volumefolder in the path (/usr/share/logstash/data/plugins/inputs/file)

- To have relative time for comparison test start time should be logged :in user.properties file (sample_variables=TESTSTART.MS,...) or add properties with file using -q argument or directlyin command line with (-Jsample_variables=TESTSTART.MS,..)seeFull list of command-line options

Run Logstash without remove container after stop.

docker run -it --net jmeter -e"ELASTICSEARCH_HOSTS=http://jmeter-elastic:9200" \-v${PWD}/input:/input/ \anasoid/jmeter-logstash

Run Logstash and remove file after the end of reading.

docker run --rm -it --net jmeter -e"ELASTICSEARCH_HOSTS=http://jmeter-elastic:9200" \-v${PWD}/input:/input/ \-e"FILE_READ_MODE=read" \-e"FILE_COMPLETED_ACTION=delete" \anasoid/jmeter-logstashRun Logstash with external sincedb folder.```shelldocker run --rm -it --net jmeter -e"ELASTICSEARCH_HOSTS=http://jmeter-elastic:9200" \-v${PWD}/input:/input/ \-v${PWD}/.sincedb:/usr/share/logstash/data/plugins/inputs/file \anasoid/jmeter-logstash

| Environment variables | Description | Default |

|---|---|---|

ELASTICSEARCH_HOSTS | Elasticsearch output configurationhosts (ex:http://elasticsearch:9200 ) | |

ELASTICSEARCH_INDEX | Elasticsearch output configurationindex | jmeter-jtl-%{+YYYY.MM.dd} |

ELASTICSEARCH_USER | Elasticsearch output configurationuser | |

ELASTICSEARCH_PASSWORD | Elasticsearch output configurationpassword | |

ELASTICSEARCH_SSL_VERIFICATION | Elasticsearch output configurationssl_certificate_verification | true |

ELASTICSEARCH_HTTP_COMPRESSION | Elasticsearch output configurationhttp_compression | false |

ELASTICSEARCH_VERSION | Elasticsearch template version, shoud be the same as elasticsearch version (not logstash version), valid values are 7 and 8. Only logstash 7 who can work with Elasticsearch 7 and 8, logstash 8 work only with elasticsearch 8. | 7 for logstash 7.x et 8 for logstash 8.x |

| Environment variables | Description | Default |

|---|---|---|

INPUT_PATH | Default folder input used for JTL and statistics file. | /input |

INPUT_PATH_JTL | Default folder input used for JTL, pattern : (["${INPUT_PATH:/input}/**.jtl","${INPUT_PATH_JTL:/input}/**.jtl"]) | /input |

INPUT_PATH_STAT | Default folder input used statistics , pattern : (["${INPUT_PATH:/input}/**.json","${INPUT_PATH_STAT:/input}/**.json"]) | /input |

PROJECT_NAME | Project name | undefined |

ENVIRONMENT_NAME | Environment name, if not provided will try to extract value from file name ( {test_name}-{environment-name}-{execution_id} ) | undefined |

TEST_NAME | Test name, if not provided will try to extract value from file name ( {test_name}-{environment-name}-{execution_id} or {test_name}-{execution_id} or {test_name}) | undefined |

TEST_METADATA | Test metadata as key value ex : (version=v1,type=daily,region=europe). | |

TEST_TAGS | Test tags, can add testtags fields as liste of value ex : (v1,daily,europe) version. | |

EXECUTION_ID | Execution Id, if not provided will try to extract value from file name ( {test_name}-{environment-name}-{execution_id} or {test_name}-{execution_id} ) | undefined |

FILE_READ_MODE | File input configurationmode | tail |

FILE_START_POSITION | File input configurationstart_position | beginning |

FILE_EXIT_AFTER_READ | File input configurationexit_after_read | false |

FILE_COMPLETED_ACTION | File input configurationfile_completed_action | log |

MISSED_RESPONSE_CODE | Default response code when not present in response like on timeout case | 510 |

PARSE_LABELS_SPLIT_CHAR | Char to split label into labels | / |

PARSE_TRANSACTION_REGEX | Regex to identify transaction Label | _.+_ |

PARSE_TRANSACTION_AUTO | Detect transaction controller based on URL null, and message format. | true |

PARSE_FILTER_INCLUDE_SAMPLER_REGEX | Regex used to include samplers and transactions. | |

PARSE_FILTER_EXCLUDE_SAMPLER_REGEX | Regex used to exclude samplers and transactions. | |

PARSE_REMOVE_TRANSACTION | Remove transaction. | false |

PARSE_REMOVE_SAMPLER | Remove sampler, not transaction. | false |

PARSE_REMOVE_MESSAGE_FIELD | Remove field message. | true |

PARSE_CLEANUP_FIELDS | Remove fields : host, path. | true |

PARSE_WITH_FLAG_SUBRESULT | Flag result with prefix like have a suffix like "-xx" when xx is the order number | true |

PCT1 | percent 1 value for statistics report | 90 |

PCT2 | percent 1 value for statistics report | 95 |

PCT3 | percent 1 value for statistics report | 99 |

Common field are integrated during file parsing for both JTL ans statistics file.

| Fields | Type ELK | source | Description |

|---|---|---|---|

origin | string | - | Origin of messagejtl orstat |

@timestamp | date | elk | Insertion time in Elastic search |

environment | string | input/parsing | Target environment (Ex: dev, stage ..), as input using environment variable or extracted from filename. |

executionid | string | input/parsing | Unique id to identify data for a test, as input using environment variable or extracted from filename. |

filename | string | parsing | file name without extension. |

path | string | logstash | Path of file. |

project | string | input | Project name. |

testname | string | parsing | Test name, as input using environment variable or extracted from filename. |

testtags | string | parsing | List of keywords extracted by splitting environnement variable "TEST_TAGS" from environment variable |

timestamp | date | csv | Request time. Accept timestamp format in ms or "yyyy/MM/dd HH:mm:ss.SSS" . |

label | string | csv | sampler label |

labels | string | parsing | List of keywords extracted by splitting label using the char "PARSE_LABELS_SPLIT_CHAR" from environment variable , default to "/" |

globalLabel | string | parsing | Normalized label for subresults, when there redirection (ex 302) jmeter log all redirects requests and the parent one by default (jmeter.save.saveservice.subresults=false to disable), the parent will have the normal label and other subresut will have a suffix like "-xx" when xx is the order number, in this field you will find the original label for all subresult without the number suffix (see field : subresult, redirectLevel ) |

Fields for JTL File.For csv field see documentation onCSV Log format.

For additional fields see documentationonResults file configuration.

| Fields | Type ELK | source | Description |

|---|---|---|---|

Connect | long | csv | time to establish connection |

IdleTime | long | csv | number of milliseconds of 'Idle' time (normally 0) |

Latency | long | csv | time to first response |

URL | string | csv | |

allThreads | long | csv | total number of active threads in all groups |

bytes | long | csv | number of bytes in the sample |

dataType | string | csv | e.g. text |

domain | string | parsing | domain name or ip which is extracted from url. |

elapsed | long | csv | elapsed - in milliseconds |

failureMessage | string | csv | |

grpThreads | long | csv | number of active threads in this thread group |

host | string | elk | hostname of logstash node. |

redirectLevel | long | parsing | redirect number (see field:globalLabel) |

relativetime | float | parsing | Number of milliseconds from test started. Useful to compare test. this field need to have started test time logged to csv (add this variable nameTESTSTART.MS to propertysample_variables) |

request | string | parsing | Request path if Http/s request. |

responseCode | string | csv | |

responseMessage | string | csv | |

responseStatus | long | parsing | Numeric responseCode , if responseCode is not numeric (case on timeout) using value "MISSED_RESPONSE_CODE" from environment variable , default to 510. |

sentBytes | long | csv | number of bytes sent for the sample. |

subresult | boolean | parsing | true if sample is a sub result (see field:globalLabel) |

success | boolean | csv | true or false. |

teststart | date | csv | Test start time. This field need to have started test time logged to csv (add this variable nameTESTSTART.MS to propertysample_variables) |

threadGrpId | long | parsing | The number of thread group. (Extract from threadName) . |

threadGrpName | string | parsing | The name of thread group.(Extract from threadName) . |

workerNode | string | parsing | host port of worker node. (Extract from threadName) . |

threadNumber | long | parsing | The number of thread (unique in thread group). (Extract from threadName) . |

threadName | string | csv | Thread name, unique on test. |

transaction | boolean | parsing | IS Sampler transaction , generated by transaction controller, to identify transaction label should start and and with "_", the regex used to this is "_.+_" |

transactionFailingSampler | long | parsing | If sample is transaction, this value represent number of failing sampler. |

transactionTotalSampler | long | parsing | If sample is transaction, this value represent count of total sampler. |

Fields for Statistics

For percentiles configuration (pc1,pc2,pc3)seePercentiles configuration.

| Fields | Type ELK | source | Description |

|---|---|---|---|

isTotal | boolean | Parsing | Is total line, label will be "Total" |

sampleCount | long | Parsing | Sample count |

errorPct | long | Parsing | Percent of error |

errorCount | long | Parsing | Error count |

receivedKBytesPerSec | long | Parsing | Received Kilo Bytes per seconds |

sentKBytesPerSec | long | Parsing | Sent Kilo Bytes per seconds |

throughput | long | Parsing | Throughput |

pct1ResTime | long | Parsing | Percentile 1 response time (aggregate_rpt_pct1) |

pct90 | long | Parsing | percentile 1 value, field name can be changed with PCT1 seeconfiguration |

pct2ResTime | long | Parsing | Percentile 2 response time (aggregate_rpt_pct2) |

pct95 | long | Parsing | percentile 2 value, field name can be changed with PCT1 seeconfiguration |

pct3ResTime | long | Parsing | Percentile 3 response time (aggregate_rpt_pct3) |

pct99 | long | Parsing | percentile 3 value, field name can be changed with PCT1 seeconfiguration |

minResTime | long | Parsing | Minimum response time. |

meanResTime | long | Parsing | Mean response time. |

medianResTime | long | Parsing | Median response time. |

maxResTime | long | Parsing | MAximum response time. |

- Logstash instance can't parse CSV file with different header Format, as first header will be used for all file, ifyou have files with different format you should use each time a new instance or restart the instance.

- Change sincedb file can't done on logstash with Elasticsearch without building image.

- Label with suffix '-{number}' will be considered as subresult, so don't prefix label with '-{number}' or disablesubresult flag with PARSE_WITH_FLAG_SUBRESULT.

About

Jmeter JTL parsing with Logstash for Elasticseacrh and Influxdb ...

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Uh oh!

There was an error while loading.Please reload this page.

Contributors3

Uh oh!

There was an error while loading.Please reload this page.