- Notifications

You must be signed in to change notification settings - Fork3

alphadl/OOP-eval

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

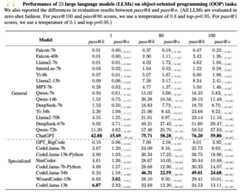

OOP is a code generation benchmark toquantify the object-oriented programming ability of language Large Language Models (LLMs), and the details can be seen in our paper "OOP: Object-Oriented Programming Evaluation Benchmark for Large Language Models | [HuggingFace Link]".We collect code snippets from theLeetCode,open-source repositories on GitHub,Stack Overflow, andCodewars, and all the test samples have undergone carefully designed post-processing.

We show that 🔎:

⚠️ Despite excelling in functional programming (FP), e.g., HumanEval and MBPP, code-specialized LLMs like WizardCoder lag in our OOP compared to proprietary models like ChatGPT;- 🚀 The poor performance of all advanced LLMs on our OOP benchmark highlights a critical need for improvements in this field.

📢 News: [May 15, 2024] OOP has been accepted by ACL 2024 Findings.

- OOP consists of 431 instances;

- OOP contains three difficulty levels: Simple-level OOP, Moderate-level OOP, and Difficult-level OOP.

Please cite the paper and star this repo if you use OOP and find it helpful.Feel free to contactwangshuai123@whu.edu.cn or open an issue if you have any questions.

@inproceedings{wang2024oop,title={OOP:Object-OrientedProgrammingEvaluationBenchmarkforLargeLanguageModels},author={Wang,ShuaiandDing,LiangandShen,LiandLuo,YongandDu,BoandTao,Dacheng},booktitle={FindingsoftheAssociationforComputationalLinguistics:ACL2024},year={2024}}

About

The first Object-Oriented Programming (OOP) Evaluaion Benchmark for LLMs

Topics

Resources

Uh oh!

There was an error while loading.Please reload this page.