Uh oh!

There was an error while loading.Please reload this page.

- Notifications

You must be signed in to change notification settings - Fork495

Uh oh!

There was an error while loading.Please reload this page.

Uh oh!

There was an error while loading.Please reload this page.

-

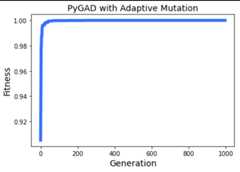

I'm coming back to pyGAD after some time of absence and based on a distinct use case I think to have seen something that I recognized earlier. It is how fast the best solution converges to a known set of values. Soit does find the result, but given the initial speed by random probing and after that the long phase of close to no advance I thought about a custom mutation function (one of the features of pyGAD), but maybe this is also more generally interesting also for others. In my case I defined a fixed gene space with all possible image coordinates as integer values like: So there are 100^6 (1000000000000) possible solutions in total and I think that the randomness to find new values is sometimes rounded to 0 (is this true?), or the wrong gene is slightly changed (adaptively), so that the GA has hard times to finish the run quicker. My idea would be right now to write a mutation function in which new solutions are created by something like agene step shifting. In my example I have 6 genes, so I would create up to 12 new solutions, where the gene values (lastly only one at a time) is modified to the next higher and also to the next lower value possible by the gene_spac - definition above. Would it be possible to do something like this i.e. if there was no fitness advance for 5 generations or so? Any thoughts on this are much appreciated.. |

BetaWas this translation helpful?Give feedback.

All reactions

👍 1

Replies: 1 comment 1 reply

-

Thanks,@rengel8 for your suggestion. If you have It is definitely interesting. So, you would like to do mutation in circular queue (similar to how round robin scheduling works to assign tasks to CPU). We can create:

It will not be a problem to get them supported as independent mutation operators. It would be a good idea to change the mutation operators at the middle. For now, you can do experiments on whether changing the type of mutation/crossover would help to make faster progress. Here is the defon_generation(ga_instance):print("Generation"+str(ga_instance.generations_completed))best_fitness=ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1]last_5_generation_idx=ga_instance.generations_completed-5iflast_5_generation_idx>=0:last_5_generation_fitness=ga_instance.best_solutions_fitness[last_5_generation_idx]if (last_5_generation_fitness-best_fitness)==0:ga_instance.mutation=ga_instance.scramble_mutationifga_instance.generations_completed==20:ga_instance.crossover=ga_instance.two_points_crossover |

BetaWas this translation helpful?Give feedback.

All reactions

👍 1

-

Thank you Ahmed for your exhaustive reply on this. Maybe one issue is also that random (here randint) might not create a different gene during mutation at all in some cases, due to rounding to integer?) and/or changes genes that were already "fine". I used adaptive mutation to reduce the randomness for higher fitness-values, but one solution right now would only be to give it more generations and patience so far. So I will try you suggestion soon and report back. What I also do is keeping the results for executed solutions to save a lot of time, which is a great feature for calculating more intense fitness evaluations. |

BetaWas this translation helpful?Give feedback.

All reactions

👍 1