- Notifications

You must be signed in to change notification settings - Fork10

Extensions for the DALEX package

ModelOriented/DALEXtra

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

TheDALEXtra package is an extension pack forDALEX package. It containsvarious tools for XAI (eXplainable Artificial Intelligence) that canhelp us inspect and improve our model. Functionalities of theDALEXtracould be divided into two areas.

- Champion-Challenger analysis

- Lets us compare two or more Machine-Learning models, determinatewhich one is better and improve both of them.

- Funnel Plot of performance measures as an innovative approach tomeasure comparison.

- Automatic HTML report.

- Cross language comparison

- Creating explainers for models created in different languges sothey can be explained using R tools likeDrWhy.AI family.

- Currently supported arePythonscikit-learn andkeras,Javah2o,Rxgboost,mlr,mlr3 andtidymodels.

# Install the development version from GitHub:# it is recommended to install latest version of DALEX from GitHubdevtools::install_github("ModelOriented/DALEX")# install.packages("devtools")devtools::install_github("ModelOriented/DALEXtra")

or latest CRAN version

install.packages("DALEX")install.packages("DALEXtra")

Other packages useful with explanations.

devtools::install_github("ModelOriented/ingredients")devtools::install_github("ModelOriented/iBreakDown")devtools::install_github("ModelOriented/shapper")devtools::install_github("ModelOriented/auditor")devtools::install_github("ModelOriented/modelStudio")

Above packages can be used along withexplain object to createexplanations (ingredients, iBreakDown, shapper), audit our model(auditor) or automate the model exploration process (modelStudio).

Without any doubts, comparison of models, especially black-box ones isa very important use case nowadays. Every day new models are being createdand we need tools that can allow us to determinate which one is better.For this purpose we present Champion-Challenger analysis. It is set offunctions that creates comparisons of models and later can be gatheredup to create one report with generic comments. Example of report can befoundhere.As you can see any explanation that has genericplot() function can beplotted.

Core of our analysis is funnel plot. It lets us find subsets of datawhere one of the models is significantly better than the other ones. Thatability is insanely useful, when we have models that have similiaroverall performance and we want to know which one should we use.

library("mlr") library("DALEXtra")task<-mlr::makeRegrTask(id="R",data=apartments,target="m2.price" )learner_lm<-mlr::makeLearner("regr.lm" )model_lm<-mlr::train(learner_lm,task)explainer_lm<- explain_mlr(model_lm,apartmentsTest,apartmentsTest$m2.price,label="LM",verbose=FALSE,precalculate=FALSE)learner_rf<-mlr::makeLearner("regr.randomForest" )model_rf<-mlr::train(learner_rf,task)explainer_rf<- explain_mlr(model_rf,apartmentsTest,apartmentsTest$m2.price,label="RF",verbose=FALSE,precalculate=FALSE)plot_data<- funnel_measure(explainer_lm,explainer_rf,partition_data= cbind(apartmentsTest,"m2.per.room"=apartmentsTest$surface/apartmentsTest$no.rooms),nbins=5,measure_function=DALEX::loss_root_mean_square,show_info=FALSE)

plot(plot_data)[[1]]

Here we will present a short use case for our package and itscompatibility with Python.

In order to be able to use some features associated withDALEXtra,Anaconda is needed. The easiest way to get it, is visitingAnacondawebsite. And choosing proper OSas it stands in the following picture. There is no big difference bewtween Python versions when downloadingAnaconda. You can always create virtual environment with any version ofPython no matter which version was downloaded first.

There is no big difference bewtween Python versions when downloadingAnaconda. You can always create virtual environment with any version ofPython no matter which version was downloaded first.

Crucial thing is adding conda to PATH environment variable when usingWindows. You can do it during the installation, by marking thischeckbox.

or, if conda is already installed, followthoseinstructions.

While using unix-like OS, adding conda to PATH is not required.

First we need provide the data, explainer is useless without them. The thingis that Python object does not store training data so we always have to providea dataset. Feel free to use those attached toDALEX package or thosestored inDALEXtra files.

titanic_test<- read.csv(system.file("extdata","titanic_test.csv",package="DALEXtra"))

Keep in mind that dataframe includes target variable (18th column) andscikit-learn models cannot work with it.

Creating explainer from scikit-learn Python model is very simple thankstoDALEXtra. The only thing you need to provide is path to pickle and,if necessary, something that lets recognize Python environment. It maybe a .yml file with packages specification, name of existing condaenvironment or path to Python virtual environment. Execution ofscikitlearn_explain only with .pkl file and data will cause usage ofdefault Python.

library(DALEXtra)explainer<- explain_scikitlearn(system.file("extdata","scikitlearn.pkl",package="DALEXtra"),yml= system.file("extdata","testing_environment.yml",package="DALEXtra"),data=titanic_test[,1:17],y=titanic_test$survived,colorize=FALSE)

## Preparation of a new explainer is initiated## -> model label : scikitlearn_model ( default )## -> data : 524 rows 17 cols ## -> target variable : 524 values ## -> predict function : yhat.scikitlearn_model will be used ( default )## -> predicted values : numerical, min = 0.02086126 , mean = 0.288584 , max = 0.9119996 ## -> model_info : package reticulate , ver. 1.16 , task classification ( default ) ## -> residual function : difference between y and yhat ( default )## -> residuals : numerical, min = -0.8669431 , mean = 0.02248468 , max = 0.9791387 ## A new explainer has been created!Now with explainer ready we can use any ofDrWhy.Aiuniverse tools to make explanations. Here is a small demo.

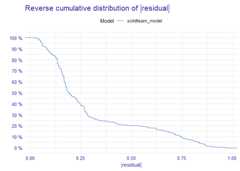

library(DALEX)plot(model_performance(explainer))

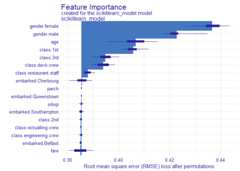

library(ingredients)plot(feature_importance(explainer))

describe(feature_importance(explainer))## The number of important variables for scikitlearn_model's prediction is 3 out of 17. ## Variables gender.female, gender.male, age have the highest importantance.library(iBreakDown)plot(break_down(explainer,titanic_test[2,1:17]))

describe(break_down(explainer,titanic_test[2,1:17]))

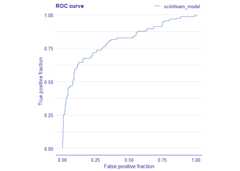

## Scikitlearn_model predicts, that the prediction for the selected instance is 0.132 which is lower than the average model prediction.## ## The most important variable that decrease the prediction is class.3rd.## ## Other variables are with less importance. The contribution of all other variables is -0.108.library(auditor)eval<- model_evaluation(explainer)plot_roc(eval)

# Predictions with newdatapredict(explainer,titanic_test[1:10,1:17])

## [1] 0.3565896 0.1321947 0.7638813 0.1037486 0.1265221 0.2949228 0.1421281## [8] 0.1421281 0.4154695 0.1321947Work on this package was financially supported by theNCN Opus grant 2016/21/B/ST6/02176.

About

Extensions for the DALEX package

Topics

Resources

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Uh oh!

There was an error while loading.Please reload this page.

Contributors8

Uh oh!

There was an error while loading.Please reload this page.