- Notifications

You must be signed in to change notification settings - Fork174

Official code of "HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation", CVPR 2021

License

jeffffffli/HybrIK

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

This repo contains the code of our papers:

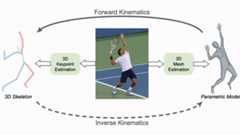

HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation, In CVPR 2021

HybrIK-X: Hybrid Analytical-Neural Inverse Kinematics for Whole-body Mesh Recovery, TPAMI 2025

[2024/12/31] HybrIK-X is accepted by TPAMI!

[2023/06/02] Demo code for whole-bodyHybrIK-X is released.

[2022/12/03] HybrIK for Blenderadd-on is now available for download. The output of HybrIK can be imported to Blender and saved asfbx.

[2022/08/16]Pretrained model with HRNet-W48 backbone is available.

[2022/07/31] Training code with predicted camera is released.

[2022/07/25]HybrIK is now supported inAlphapose! Multi-person demo with pose-tracking is available.

[2022/04/26] Achieve SOTA results by adding the 3DPW dataset for training.

[2022/04/25] The demo code is released!

HybrIK and HybrIK-X are based on a hybrid inverse kinematics (IK) to convert accurate 3D keypoints to parametric body meshes.

# 1. Create a conda virtual environment.conda create -n hybrik python=3.8 -yconda activate hybrik# 2. Install PyTorchconda install pytorch==1.9.1 torchvision==0.10.1 -c pytorch# 3. Install PyTorch3D (Optional, only for visualization)conda install -c fvcore -c iopath -c conda-forge fvcore iopathconda install -c bottler nvidiacubpip install git+ssh://git@github.com/facebookresearch/pytorch3d.git@stable# 4. Pull our codegit clone https://github.com/Jeff-sjtu/HybrIK.gitcd HybrIK# 5. Installpip install pycocotoolspython setup.py develop# or "pip install -e ."

Download necessary model files from [Google Drive |Baidu (code:2u3c) ] and un-zip them in the${ROOT} directory.

| Backbone | Training Data | PA-MPJPE (3DPW) | MPJPE (3DPW) | PA-MPJPE (Human3.6M) | MPJPE (Human3.6M) | Download | Config |

|---|---|---|---|---|---|---|---|

| ResNet-34 | w/ 3DPW | 44.5 | 72.4 | 33.8 | 55.5 | model | cfg |

| HRNet-W48 | w/o 3DPW | 48.6 | 88.0 | 29.5 | 50.4 | model | cfg |

| HRNet-W48 | w/ 3DPW | 41.8 | 71.3 | 29.8 | 47.1 | model | cfg |

| Backbone | MVE (AGORA Test) | MPJPE (AGORA Test) | Download | Config |

|---|---|---|---|---|

| HRNet-W48 | 134.1 | 127.5 | model | cfg |

| HRNet-W48 +RLE | 112.1 | 107.6 | model | cfg |

First make sure you download the pretrained model (with predicted camera) and place it in the${ROOT}/pretrained_models directory, i.e.,./pretrained_models/hybrik_hrnet.pth and./pretrained_models/hybrikx_rle_hrnet.pth.

- Visualize HybrIK onvideos (run in single frame) and save results:

python scripts/demo_video.py --video-name examples/dance.mp4 --out-dir res_dance --save-pk --save-img

The saved results in./res_dance/res.pk can be imported to Blender with ouradd-on.

- Visualize HybrIK onimages:

python scripts/demo_image.py --img-dir examples --out-dir res

python scripts/demo_video_x.py --video-name examples/dance.mp4 --out-dir res_dance --save-pk --save-img

DownloadHuman3.6M,MPI-INF-3DHP,3DPW andMSCOCO datasets. You need to follow directory structure of thedata as below. Thanks to the great job done by Moonet al., we use the Human3.6M images provided inPoseNet.

|-- data`-- |-- h36m `-- |-- annotations `-- images`-- |-- pw3d `-- |-- json `-- imageFiles`-- |-- 3dhp `-- |-- annotation_mpi_inf_3dhp_train.json |-- annotation_mpi_inf_3dhp_test.json |-- mpi_inf_3dhp_train_set `-- mpi_inf_3dhp_test_set`-- |-- coco `-- |-- annotations | |-- person_keypoints_train2017.json | `-- person_keypoints_val2017.json |-- train2017 `-- val2017- Download Human3.6M parsed annotations. [Google |Baidu ]

- Download 3DPW parsed annotations. [Google |Baidu ]

- Download MPI-INF-3DHP parsed annotations. [Google |Baidu ]

./scripts/train_smpl_cam.sh test_3dpw configs/256x192_adam_lr1e-3-res34_smpl_3d_cam_2x_mix_w_pw3d.yaml

Download the pretrained model (ResNet-34 orHRNet-W48).

./scripts/validate_smpl_cam.sh ./configs/256x192_adam_lr1e-3-hrw48_cam_2x_w_pw3d_3dhp.yaml ./pretrained_hrnet.pth

If our code helps your research, please consider citing the following paper:

@inproceedings{li2021hybrik, title={Hybrik: A hybrid analytical-neural inverse kinematics solution for 3d human pose and shape estimation}, author={Li, Jiefeng and Xu, Chao and Chen, Zhicun and Bian, Siyuan and Yang, Lixin and Lu, Cewu}, booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, pages={3383--3393}, year={2021}}@article{li2023hybrik, title={HybrIK-X: Hybrid Analytical-Neural Inverse Kinematics for Whole-body Mesh Recovery}, author={Li, Jiefeng and Bian, Siyuan and Xu, Chao and Chen, Zhicun and Yang, Lixin and Lu, Cewu}, journal={arXiv preprint arXiv:2304.05690}, year={2023}}About

Official code of "HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation", CVPR 2021

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.