Design a Distributed Job Scheduler - System Design Interview

Adistributed job scheduler is a system designed tomanage,schedule, andexecute tasks (referred to as "jobs") acrossmultiple computers or nodes in a distributed network.

Distributed job schedulers are used for automating and managing large-scale tasks like batch processing, report generation, and orchestrating complex workflows across multiple nodes.

In this article, we will walk through the process of designing a scalable distributed job scheduling service that can handle millions of tasks, and ensure high availability.

1. Requirements Gathering

Before diving into the design, let’s outline thefunctional andnon-functional requirements.

Functional Requirements:

Users can submitone-time orperiodic jobs for execution.

Users cancancel the submitted jobs.

The system shoulddistribute jobs across multiple worker nodes for execution.

The system should provide monitoring ofjob status (queued, running, completed, failed).

The system shouldprevent the same job from being executed multiple times concurrently.

Non-Functional Requirements:

Scalability: The system should be able to schedule and execute millions of jobs.

High Availability: The system should be fault-tolerant with no single point of failure. If a worker node fails, the system should reschedule the job to other available nodes.

Latency: Jobs should be scheduled and executed with minimal delay.

Consistency: Job results should be consistent, ensuring that jobs are executed once (or with minimal duplication).

Additional Requirements (Out of Scope):

Job prioritization: The system should support scheduling based on job priority.

Job dependencies: The system should handle jobs with dependencies.

2. High Level Design

At a high level, our distributed job scheduler will consist of the following components:

1. Job Submission Service

The Job Submission Service is theentry point for clients to interact with the system.

It provides an interface for users or services tosubmit,update, orcancel jobs via APIs.

This layer exposes aRESTful API that accepts job details such as:

Job name

Frequency (One-time, Daily)

Execution time

Job payload (task details)

It saves job metadata (e.g.,execution_time,frequency,status = pending) in theJob Store (a database) and returns a uniqueJob ID to the client.

2.Job Store

The Job Store is responsible forpersisting job information and maintaining the current state of all jobs and workers in the system.

TheJob Store contains following database tables:

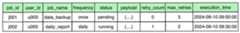

Job Table

This table stores the metadata of the job, including job id, user id, frequency, payload, execution time, retry count and status (pending, running, completed, failed).

Job Execution Table

Jobs can be executed multiple times in case of failures.

This table tracks the execution attempts for each job, storing information like execution id, start time, end time, worker id, status and error message.

If a job fails and is retried, each attempt will be logged here.

Job Schedules

TheSchedules Table stores scheduling details for each job, including thenext_run_time.

Forone-time jobs, the

next_run_timeis the same as the job’s execution time, and thelast_run_timeremains null.Forrecurring jobs, the

next_run_timeis updated after each execution to reflect the next scheduled run.

Worker Table

TheWorker Node Table stores information about each worker node, including its ip address, status, last heartbeat, capacity and current load.

3.Scheduling Service

TheScheduling Service is responsible for selecting jobs for execution based on theirnext_run_time in theJob Schedules Table.

It periodically queries the table for jobs scheduled to run at the current minute:

SELECT * FROM JobSchedulesTable WHERE next_run_time = 1726110000;Once the due jobs are retrieved, they are pushed to theDistributed Job Queue for worker nodes to execute.

Simultaneously, the status inJob Table is updated toSCHEDULED.

4. Distributed Job Queue

TheDistributed Job Queue(e.g., Kafka, RabbitMQ) acts as a buffer between theScheduling Service and theExecution Service, ensuring that jobs are distributed efficiently to available worker nodes.

It holds the jobs and allows the execution service to pull jobs and assign it to worker nodes.

5.Execution Service

The Execution Service is responsible forrunning the jobs on worker nodes and updating the results in the Job Store.

It consists of acoordinator and a pool ofworker nodes.

Coordinator

Acoordinator (ororchestrator) node takes responsibility for:

Assigning jobs: Distributes jobs from the queue to the available worker nodes.

Managing worker nodes: Tracks the status, health, capacity, and workload of active workers.

Handling worker node failures: Detects when a worker node fails and reassigns its jobs to other healthy nodes.

Load balancing: Ensures the workload is evenly distributed across worker nodes based on available resources and capacity.

Worker Nodes

Worker nodes are responsible for executing jobs and updating theJob Store with the results (e.g., completed, failed, output).

When a worker is assigned a job, it creates a new entry in theJob Execution Tablewith the job’s status set to

runningand begins execution.After execution is finished, the worker updates the job’s final status (e.g., completed or failed) along with any output in both theJobs andJob Execution Table.

If a worker fails during execution, the coordinator re-queues the job in the distributed job queue, allowing another worker to pick it up and complete it.

3. System API Design

Here are some of the important API’s we can have in our system.

1.Submit Job (POST/jobs)

2.Get Job Status (GET/jobs/{job_id})

3.Cancel Job (DELETE/jobs/{job_id})

4.List Pending Jobs (GET/jobs?status=pending&user_id=u003)

5.Get Jobs Running on a Worker (GET/job/executions?worker_id=w001)

4. Deep Dive into Key Components

4.1 SQL vs NoSQL

To choose the right database for our needs, let's consider some factors that can affect our choice:

We need to store millions of jobs every day.

Read and Write queries are around the same.

Data is structured with fixed schema.

We don’t require ACID transactions or complex joins.

Both SQL and NoSQL databases could meet these needs, but given the scale and nature of the workload, aNoSQL database likeDynamoDB orCassandra could be a better fit, especially when handlingmillions of jobs per day and supportinghigh-throughput writes and reads.

4.2 Scaling Scheduling Service

TheScheduling service periodically checks the theJob Schedules Table every minute for pending jobs and pushes them to the job queue for execution.

For example, the following query retrieves all jobs due for execution at the current minute:

SELECT * FROM JobSchedulesTable WHERE next_run_time = 1726110000;Optimizing reads from

JobSchedulesTable:Since we are querying JobSchedulesTable using the

next_run_timecolumn, it’s a good idea to partition the table on thenext_run_timecolumn to efficiently retrieve all jobs that are scheduled to run at a specific minute.

If the number of jobs in any minute is small, a single node is enough.

However, during peak periods, such as when 50,000 jobs need to be processed in a single minute, relying on one node can lead to delays in execution.

The node may become overloaded and slow down, creating performance bottlenecks.

Additionally, having only one node introduces asingle point of failure.

If that node becomes unavailable due to a crash or other issue, no jobs will be scheduled or executed until the node is restored, leading to system downtime.

To address this, we need a distributed architecture wheremultiple worker nodes handle job scheduling tasks in parallel, all coordinated by acentral node.

But how can we ensure that jobs are not processed by multiple workers at the same time?

The solution is to divide jobs intosegments. Each worker processes only a specific subset of jobs from theJobSchedulesTable by focusing on assigned segments.

This is achieved by adding an extra column calledsegment.

Thesegment column logically groups jobs (e.g.,segment=1,segment=2, etc.), ensuring that no two workers handle the same job simultaneously.

Acoordinator node manages the distribution of workload by assigning different segments to worker nodes.

It also monitors the health of the workers usingheartbeats orhealth checks.

In cases of worker node failure, the addition of new workers, or spikes in traffic, the coordinator dynamically rebalances the workload by adjusting segment assignments.

Each worker node queries theJobSchedulesTable using bothnext_run_time and its assigned segments to retrieve the jobs it is responsible for processing.

Here's an example of a query a worker node might execute:

SELECT * FROM JobSchedulesTable WHERE next_run_time = 1726110000 AND segment in (1,2);4.3 Handling failure of Jobs

When a job fails during execution, the worker node increments theretry_count in theJobTable.

If the

retry_countis still below themax_retriesthreshold, the worker retries the job from the beginning.Once the

retry_countreaches themax_retrieslimit, the job is marked as failed and will not be executed again, with its status updated tofailed.

Note: After a job fails, the worker node should not immediately retry the job, especially if the failure was caused by a transient issue (e.g., network failure).

Instead, the system retries the job after a delay, which increasesexponentially with each subsequent retry (e.g., 1 minute, 5 minutes, 10 minutes).

4.4 Handling failure of Worker nodes in Execution Service

Worker nodes are responsible for executing jobs assigned to them by the coordinator in theExecution Service.

When a worker node fails, the system must detect the failure, reassign the pending jobs to healthy nodes, and ensure that jobs are not lost or duplicated.

There are several techniques for detecting failures:

Heartbeat Mechanism:Each worker node periodically sends a heartbeat signal to the coordinator (every few seconds). The coordinator tracks these heartbeats and marks a worker as "unhealthy" if it doesn’t receive a heartbeat for a predefined period (e.g., 3 consecutive heartbeats missed).

Health Checks:In addition to heartbeats, the coordinator can perform periodichealth checks on each worker node. The health checks may include CPU, memory, disk space, and network connectivity to ensure the node is not overloaded.

Once a worker failure is detected, the system needs to recover and ensure that jobs assigned to the failed worker are still executed.

There are two main scenarios to handle:

Pending Jobs (Not Started)

For jobs that were assigned to a worker but not yet started, the system needs to reassign these jobs to another healthy worker.

The coordinator should re-queue them to the job queue for another worker to pick up.

In-Progress Jobs

Jobs that were being executed when the worker failed need to be handled carefully to prevent partial execution or data loss.

One technique is to usejob checkpointing, where a worker periodically saves the progress of long-running jobs to a persistent store (like a database). If the worker fails, another worker can restart the job from the last checkpoint.

If a job was partially executed but not completed, the coordinator should mark the job as "failed" and re-queue it to the job queue for retry by another worker.

4.5Addressing Single Points of Failure

We are using acoordinator node in both theScheduling andExecution service.

To prevent thecoordinator from becoming asingle point of failure, deploy multiplecoordinator nodes with aleader-election mechanism.

This ensures that one node is the active leader, while others are on standby. If the leader fails, a new leader is elected, and the system continues to function without disruption.

Leader Election: Use a consensus algorithm likeRaft orPaxos to elect a leader from the pool of coordinators. Tools likeZookeeper oretcd are commonly used for managing distributed leader elections.

Failover: If the leader coordinator fails, the other coordinators detect the failure and elect a new leader. The new leader takes over responsibilities immediately, ensuring continuity in job scheduling, worker management, and health monitoring.

Data Synchronization: All coordinators should have access to the sameshared state (e.g., job scheduling data and worker health information). This can be stored in adistributed database (e.g., Cassandra, DynamoDB). This ensures that when a new leader takes over, it has the latest data to work with.

4.6 Rate Limiting

Rate Limiting at the Job Submission Level

If too many job submissions are made to the scheduling system at once, the system may become overloaded, leading to degraded performance, timeouts, or even failure of the scheduling service.

Implement rate limits at the client level to ensure no single client can overwhelm the system.

For example, restrict each client to a maximum of 1,000 job submissions per minute.

Rate Limiting at the Job Queue Level

Even if the job submission rate is controlled, the system might be overwhelmed if thejob queue (e.g., Kafka, RabbitMQ) is flooded with too many jobs, which can slow down worker nodes or lead to message backlog.

Limit the rate at which jobs are pushed into the distributed job queue. This can be achieved by implementingqueue-level throttling, where only a certain number of jobs are allowed to enter the queue per second or minute.

Rate Limiting at the Worker Node Level

If the system allows too many jobs to be executed simultaneously by worker nodes, it can overwhelm the underlying infrastructure (e.g., CPU, memory, database), causing performance degradation or crashes.

Implement rate limiting at the worker node level to prevent any single worker from taking on too many jobs at once.

Setmaximum concurrency limits on worker nodes to control how many jobs each worker can execute concurrently.

Thank you for reading!

If you found it valuable, hit a like ❤️ and consider subscribing for more such content every week.

If you have any questions or suggestions, leave a comment.

This post is public so feel free to share it.

P.S. If you’re enjoying this newsletter and want to get even more value, consider becoming apaid subscriber.

As a paid subscriber, you'll unlock allpremium articles and gain full access to allpremium courses onalgomaster.io.

There aregroup discounts,gift options, andreferral bonuses available.

Checkout myYoutube channel for more in-depth content.

Follow me onLinkedIn,X andMedium to stay updated.

Checkout myGitHub repositories for free interview preparation resources.

I hope you have a lovely day!

See you soon,

Ashish