- Notifications

You must be signed in to change notification settings - Fork1

unit-mesh/autodev-codebase

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

A platform-agnostic code analysis library with semantic search capabilities and MCP (Model Context Protocol) server support. This library provides intelligent code indexing, vector-based semantic search, and can be integrated into various development tools and IDEs.

- Semantic Code Search: Vector-based code search using embeddings

- MCP Server Support: HTTP-based MCP server for IDE integration

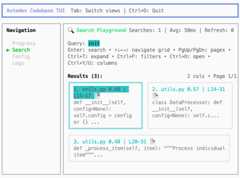

- Terminal UI: Interactive CLI with rich terminal interface

- Tree-sitter Parsing: Advanced code parsing and analysis

- Vector Storage: Qdrant vector database integration

- Flexible Embedding: Support for various embedding models via Ollama

# Install Ollama (macOS)brew install ollama# Start Ollama serviceollama serve# In a new terminal, pull the embedding modelollama pull dengcao/Qwen3-Embedding-0.6B:Q8_0

ripgrep is required for fast codebase indexing. Install it with:

# Install ripgrep (macOS)brew install ripgrep# Or on Ubuntu/Debiansudo apt-get install ripgrep# Or on Arch Linuxsudo pacman -S ripgrep

Start Qdrant using Docker:

# Start Qdrant containerdocker run -p 6333:6333 -p 6334:6334 qdrant/qdrantOr download and run Qdrant directly:

# Download and run Qdrantwget https://github.com/qdrant/qdrant/releases/latest/download/qdrant-x86_64-unknown-linux-gnu.tar.gztar -xzf qdrant-x86_64-unknown-linux-gnu.tar.gz./qdrant# Check Ollamacurl http://localhost:11434/api/tags# Check Qdrantcurl http://localhost:6333/collections

npm install -g @autodev/codebase

Alternatively, you can install it locally:

git clone https://github.com/anrgct/autodev-codebasecd autodev-codebasenpm installnpm run buildnpm linkThe CLI provides two main modes:

# Basic usage: index your current folder as the codebase.# Be cautious when running this command if you have a large number of files.codebase# With custom optionscodebase --demo# Create a local demo directory and test the indexing service, recommend for setupcodebase --path=/my/projectcodebase --path=/my/project --log-level=info

# Start long-running MCP servercd /my/projectcodebase mcp-server# With custom configurationcodebase mcp-server --port=3001 --host=localhostcodebase mcp-server --path=/workspace --port=3002

The library uses a layered configuration system, allowing you to customize settings at different levels. The priority order (highest to lowest) is:

- CLI Parameters (e.g.,

--model,--ollama-url,--qdrant-url,--config, etc.) - Project Config File (

./autodev-config.json) - Global Config File (

~/.autodev-cache/autodev-config.json) - Built-in Defaults

Settings specified at a higher level override those at lower levels. This lets you tailor the behavior for your environment or project as needed.

Config file locations:

- Global:

~/.autodev-cache/autodev-config.json - Project:

./autodev-config.json - CLI: Pass parameters directly when running commands

Create a global configuration file at~/.autodev-cache/autodev-config.json:

{"isEnabled":true,"embedder": {"provider":"ollama","model":"dengcao/Qwen3-Embedding-0.6B:Q8_0","dimension":1024,"baseUrl":"http://localhost:11434" },"qdrantUrl":"http://localhost:6333","qdrantApiKey":"your-api-key-if-needed","searchMinScore":0.4}Create a project-specific configuration file at./autodev-config.json:

{"embedder": {"provider":"openai-compatible","apiKey":"sk-xxxxx","baseUrl":"http://localhost:2302/v1","model":"openai/text-embedding-3-smallnpm","dimension":1536, },"qdrantUrl":"http://localhost:6334"}| Option | Type | Description | Default |

|---|---|---|---|

isEnabled | boolean | Enable/disable code indexing feature | true |

embedder.provider | string | Embedding provider (ollama,openai,openai-compatible) | ollama |

embedder.model | string | Embedding model name | dengcao/Qwen3-Embedding-0.6B:Q8_0 |

embedder.dimension | number | Vector dimension size | 1024 |

embedder.baseUrl | string | Provider API base URL | http://localhost:11434 |

embedder.apiKey | string | API key (for OpenAI/compatible providers) | - |

qdrantUrl | string | Qdrant vector database URL | http://localhost:6333 |

qdrantApiKey | string | Qdrant API key (if authentication enabled) | - |

searchMinScore | number | Minimum similarity score for search results | 0.4 |

Note: TheisConfigured field is automatically calculated based on the completeness of your configuration and should not be set manually. The system will determine if the configuration is valid based on the required fields for your chosen provider.

# Use global config defaultscodebase# Override model via CLI (highest priority)codebase --model="custom-model"# Use project config with CLI overridescodebase --config=./my-config.json --qdrant-url=http://remote:6333

--path=<path>- Workspace path (default: current directory)--demo- Create demo files in workspace--force- ignore cache force re-index--ollama-url=<url>- Ollama API URL (default:http://localhost:11434)--qdrant-url=<url>- Qdrant vector DB URL (default:http://localhost:6333)--model=<model>- Embedding model (default: nomic-embed-text)--config=<path>- Config file path--storage=<path>- Storage directory path--cache=<path>- Cache directory path--log-level=<level>- Log level: error|warn|info|debug (default: error)--log-level=<level>- Log level: error|warn|info|debug (default: error)--help, -h- Show help

--port=<port>- HTTP server port (default: 3001)--host=<host>- HTTP server host (default: localhost)

Configure your IDE to connect to the MCP server:

{"mcpServers": {"codebase": {"url":"http://localhost:3001/sse" } }}For clients that do not support SSE MCP, you can use the following configuration:

{"mcpServers": {"codebase": {"command":"codebase","args": ["stdio-adapter","--server-url=http://localhost:3001/sse" ] } }}- Home Page:

http://localhost:3001- Server status and configuration - Health Check:

http://localhost:3001/health- JSON status endpoint - MCP Endpoint:

http://localhost:3001/sse- SSE/HTTP MCP protocol endpoint

search_codebase- Semantic search through your codebase- Parameters:

query(string),limit(number),filters(object) - Returns: Formatted search results with file paths, scores, and code blocks

- Parameters:

# Development mode with demo filesnpm run dev# Build for productionnpm run build# Type checkingnpm run type-check# Run TUI demonpm run demo-tui# Start MCP server demonpm run mcp-server

Mainstream Embedding Models Performance

| Model | Dimension | Avg Precision@3 | Avg Precision@5 | Good Queries (≥66.7%) | Failed Queries (0%) |

|---|---|---|---|---|---|

| siliconflow/Qwen/Qwen3-Embedding-8B | 4096 | 76.7% | 66.0% | 5/10 | 0/10 |

| siliconflow/Qwen/Qwen3-Embedding-4B | 2560 | 73.3% | 54.0% | 5/10 | 1/10 |

| voyage/voyage-code-3 | 1024 | 73.3% | 52.0% | 6/10 | 1/10 |

| siliconflow/Qwen/Qwen3-Embedding-0.6B | 1024 | 63.3% | 42.0% | 4/10 | 1/10 |

| morph-embedding-v2 | 1536 | 56.7% | 44.0% | 3/10 | 1/10 |

| openai/text-embedding-ada-002 | 1536 | 53.3% | 38.0% | 2/10 | 1/10 |

| voyage/voyage-3-large | 1024 | 53.3% | 42.0% | 3/10 | 2/10 |

| openai/text-embedding-3-large | 3072 | 46.7% | 38.0% | 1/10 | 3/10 |

| voyage/voyage-3.5 | 1024 | 43.3% | 38.0% | 1/10 | 2/10 |

| voyage/voyage-3.5-lite | 1024 | 36.7% | 28.0% | 1/10 | 2/10 |

| openai/text-embedding-3-small | 1536 | 33.3% | 28.0% | 1/10 | 4/10 |

| siliconflow/BAAI/bge-large-en-v1.5 | 1024 | 30.0% | 28.0% | 0/10 | 3/10 |

| siliconflow/Pro/BAAI/bge-m3 | 1024 | 26.7% | 24.0% | 0/10 | 2/10 |

| ollama/nomic-embed-text | 768 | 16.7% | 18.0% | 0/10 | 6/10 |

| siliconflow/netease-youdao/bce-embedding-base_v1 | 1024 | 13.3% | 16.0% | 0/10 | 6/10 |

Ollama-based Embedding Models Performance

| Model | Dimension | Precision@3 | Precision@5 | Good Queries (≥66.7%) | Failed Queries (0%) |

|---|---|---|---|---|---|

| ollama/dengcao/Qwen3-Embedding-4B:Q4_K_M | 2560 | 66.7% | 48.0% | 4/10 | 1/10 |

| ollama/dengcao/Qwen3-Embedding-0.6B:f16 | 1024 | 63.3% | 44.0% | 3/10 | 0/10 |

| ollama/dengcao/Qwen3-Embedding-0.6B:Q8_0 | 1024 | 63.3% | 44.0% | 3/10 | 0/10 |

| ollama/dengcao/Qwen3-Embedding-4B:Q8_0 | 2560 | 60.0% | 48.0% | 3/10 | 1/10 |

| lmstudio/taylor-jones/bge-code-v1-Q8_0-GGUF | 1536 | 60.0% | 54.0% | 4/10 | 1/10 |

| ollama/dengcao/Qwen3-Embedding-8B:Q4_K_M | 4096 | 56.7% | 42.0% | 2/10 | 2/10 |

| ollama/hf.co/nomic-ai/nomic-embed-code-GGUF:Q4_K_M | 3584 | 53.3% | 44.0% | 2/10 | 0/10 |

| ollama/bge-m3:f16 | 1024 | 26.7% | 24.0% | 0/10 | 2/10 |

| ollama/hf.co/nomic-ai/nomic-embed-text-v2-moe-GGUF:f16 | 768 | 26.7% | 20.0% | 0/10 | 2/10 |

| ollama/granite-embedding:278m-fp16 | 768 | 23.3% | 18.0% | 0/10 | 4/10 |

| ollama/unclemusclez/jina-embeddings-v2-base-code:f16 | 768 | 23.3% | 16.0% | 0/10 | 5/10 |

| lmstudio/awhiteside/CodeRankEmbed-Q8_0-GGUF | 768 | 23.3% | 16.0% | 0/10 | 5/10 |

| lmstudio/wsxiaoys/jina-embeddings-v2-base-code-Q8_0-GGUF | 768 | 23.3% | 16.0% | 0/10 | 5/10 |

| ollama/dengcao/Dmeta-embedding-zh:F16 | 768 | 20.0% | 20.0% | 0/10 | 6/10 |

| ollama/znbang/bge:small-en-v1.5-q8_0 | 384 | 16.7% | 16.0% | 0/10 | 6/10 |

| lmstudio/nomic-ai/nomic-embed-text-v1.5-GGUF@Q4_K_M | 768 | 16.7% | 14.0% | 0/10 | 6/10 |

| ollama/nomic-embed-text:f16 | 768 | 16.7% | 18.0% | 0/10 | 6/10 |

| ollama/snowflake-arctic-embed2:568m:f16 | 1024 | 16.7% | 18.0% | 0/10 | 5/10 |

About

Run the embedding codebase as a local MCP service to index code in your folder. Supports tools like Claude Code and Gemini CLI.

Resources

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Packages0

Languages

- TypeScript92.4%

- JavaScript7.6%