- Notifications

You must be signed in to change notification settings - Fork27

A compact Constrained Model Predictive Control (MPC) library with Active Set based Quadratic Programming (QP) solver for Teensy4/Arduino system (or any real time embedded system in general)

License

pronenewbits/Arduino_Constrained_MPC_Library

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

This is a compactsub-millisecond Constrained (linear) Model Predictive Control (MPC) library for Teensy4.0/Arduino system (or real time embedded system in general).

- It's not using Eigen (small source code - more simple to understand).

- It's not using C++ Standard Library/std (for embedded consideration).

- If you set

SYSTEM_IMPLEMENTATIONtoSYSTEM_IMPLEMENTATION_EMBEDDED_NO_PRINTinkonfig.h, the code is platform agnostic (not using any library beside these C header files:stdlib.h,stdint.h,stdbool.h,string.h, andmath.h). - There's no malloc/new/free dynamic memory allocation for real time application (but using heavy stack local variables, so you need to run it through static memory analyzer if you are really concerned about implement this in mission critical hard real time application).

The unconstrained MPC version can be found inmy other repository.

This is the continuation of my previous repoArduino_Unconstrained_MPC_Library, so you should read it before continue. As with my other repo, the main goal is for the student to learn the MPC concept (I've made decision to sacrifice speed to get best code readability I could get) while still capable of tackling real-time control system implementation. For non-trivia application, the code is computed in around886 us for naive implementation,327 us for the optimized version, and127 us for the optimized version without matrix bounds checking (even as low as13-28 us for a lower quality control output)! SeeSome Benchmark section below.

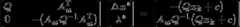

To recap, the MPC formula derivation can be described as (I'm using Jan Maciejowski'sPredictive Control with Constraints as reference, great book btw) :

As we can see here, the formulation of constrained MPC is exactly the same asthe unconstrained version. The difference is on the optimal control problem definition where the constrained version, well, has constraints.

As we can see here, the formulation of constrained MPC is exactly the same asthe unconstrained version. The difference is on the optimal control problem definition where the constrained version, well, has constraints.

We have 3 type of constraints:

constraint (i.e.

constraint (i.e. ), or the actuator slew rate constraint.

), or the actuator slew rate constraint. constraint (i.e.

constraint (i.e. ), or the actuator output constraint.

), or the actuator output constraint. constraint (i.e.

constraint (i.e. ), or the system output constraint.

), or the system output constraint.

Remark: To make the explanation simple and complete, in this implementation I implemented all constraints. But in the real implementation, you don't need to. For example, for you can implement the minimum constraint only (without the maximum constraint)

you can implement the minimum constraint only (without the maximum constraint) . Or for example if you have 3 input, you can limit only the second input

. Or for example if you have 3 input, you can limit only the second input and set free the other.Actually it is preferred (best practice) to implement hard-contraints as little as possible to ensure maximum feasible search space.

and set free the other.Actually it is preferred (best practice) to implement hard-contraints as little as possible to ensure maximum feasible search space.

The constraints formulation can be described as:

Then we can describe the full formulation of constrained MPC problem:

Note: From this point onward, sometimes I'll useMPC subscript to denote MPC variables andQP subscript to denote Quadratic Programming solver variables. But if the context is known (e.g. In the MPC subsection/Active Set subsection), I'll drop the subscript to shorten the explanation.

In the formulation above, means when we call the QP solver from MPC subroutine, we need to scale the MPC variables accordingly

means when we call the QP solver from MPC subroutine, we need to scale the MPC variables accordingly .

.

In this implementation, I use (one ofseveral) QP solver calledActive Set methods (ASM). The big idea of active set algorithm is searching the optimal value by solving the QP problem (with inequality constraints) as QP problem with equality constraints (EQP). More concretely, we search

value by solving the QP problem (with inequality constraints) as QP problem with equality constraints (EQP). More concretely, we search by iterating through EQP subproblem, and construct the EQP subproblem (more specifically the constraint matrix) from the set of QP constraints that is active at that iteration (hence the name).

by iterating through EQP subproblem, and construct the EQP subproblem (more specifically the constraint matrix) from the set of QP constraints that is active at that iteration (hence the name).

In the wild, many recent reseach are using the more 'sexy' solver likeInterior Point methods (IPM) because the IPM is faster for big QP problem. But in our case (i.e. MPC implementation for embedded system) we mainly deal with small (less than ten) state variables/actuator and small (less than twenty) prediction horizon (we're limited by the RAM size after all). In that case, the ASM will be faster than IPM. And by exploiting the MPC structure, we can reduce the computation time even further while increase the numerical stability (seeActive Set note: Solving the Linear Equation of KKT system subsection andThe MPC Implementation subsection below).

On theWikipedia page, the general structure of an active set algorithm is:

Find a feasible starting pointrepeat until "optimal enough" solve the equality problem defined by the active set (approximately) compute the Lagrange multipliers of the active set remove a subset of the constraints with negative Lagrange multipliers search for infeasible constraintsend repeatUsing above structure, the active set algorithm used in this implementation can be described as:

Remark: If the global optimum is inside the feasible region (i.e. the global optimum doesn't violate any constraints) and is not on the edge of any constraint, then it means there is no constraint active and the active set matrix disappear. The KKT system equation simply becomes:

disappear. The KKT system equation simply becomes:

As , then the optimal control calculation becomes

, then the optimal control calculation becomes which exactly is the equation forunconstrained MPC! With this we can prove that the constrained MPC using active set solver is a generalization of unconstrained MPC (math is pretty nifty huh).

which exactly is the equation forunconstrained MPC! With this we can prove that the constrained MPC using active set solver is a generalization of unconstrained MPC (math is pretty nifty huh).

The termination condition on the last line of algorithm above is calledKarush–Kuhn–Tucker conditions. As the condition is applied on the solution of the KKT system on EQP subproblem (first line on iteration), then we can move the termination check just after we solve the KKT system.

is calledKarush–Kuhn–Tucker conditions. As the condition is applied on the solution of the KKT system on EQP subproblem (first line on iteration), then we can move the termination check just after we solve the KKT system.

The simplest way to implement the active set is by using an array of boolean, where the size of the array is as big as the number of constraints. That way, the operation of adding or removing thei-th constraint into/from

is by using an array of boolean, where the size of the array is as big as the number of constraints. That way, the operation of adding or removing thei-th constraint into/from (i.e. the

(i.e. the and

and operations) simply becomes the operation of setting thei-th value of the array as

operations) simply becomes the operation of setting thei-th value of the array astrue orfalse, accordingly.

todo: calculate the starting point using linear programming. For now we set the starting point as zero (ok if the null space of x is inside the feasible search space, e.g. du_mpc,min < 0 and du_mpc,max > 0).

The most obvious way to solve the KKT system is by inverting the LHS matrix of the KKT equation (e.g. using Gauss-Jordan elimination):

Another not so obvious but very efficient solution is by usingSchur complement of the LHS matrix. Exploiting the fact that we can make the matrix as a symmetric positive definite matrix, and moreover, a constant matrix.

matrix as a symmetric positive definite matrix, and moreover, a constant matrix.

Both approach to solve the KKT system is implemented below (asnaive implementation andSchur complement implementation).

Unlike unconstrained MPC (where the solver isbasically a gain matrix), the constrained MPC solver use iteration to calculate the optimal control value (QP in worst case is NP-hard). It means in a real-time system, in worst case we need to terminate the iteration before the optimal control value can be calculated.

The nifty thing about ASM is that once we have optimal variable candidate that is inside feasible region, the next iteration of the variable

candidate that is inside feasible region, the next iteration of the variable will be both:a) Also inside the feasible region; andb) More optimal than the previous iteration.

will be both:a) Also inside the feasible region; andb) More optimal than the previous iteration.

Using that fact, we can easily add another termination condition beside KKT condition

beside KKT condition to make sure the MPC calculation still satisfy the real-time requirement. The other option is to use a real-time timer as the termination condition. We then can use the not-the-most-optimal-but-still-feasible solution as the controller output.

to make sure the MPC calculation still satisfy the real-time requirement. The other option is to use a real-time timer as the termination condition. We then can use the not-the-most-optimal-but-still-feasible solution as the controller output.

The Active Set pseudo code then can be redescribed as:

For more explanation about ASM,these slides or these pages (here andhere) are quite good at explaining the mechanism behind active set for quadratic programming problem. But for more in-depth treatment of this algorithm, the best resource is of courseNocedal & Wright's Numerical Optimization, chapter 16. Just be aware, the tutorials I linked (and many QP formulation) use inequality constraint in the form while we use

while we use (just multiply the inequality with

(just multiply the inequality with to change from one form to another).

to change from one form to another).

The implementations of the MPC control calculation consist of two main implementations, each of the implementation is self contained in each folder and calculate the same control output (theoretically).

The Naive Implementation algorithm is just a direct implementation of the MPC derivation above. The MPC algorithm can be described as (the source code can be found in "mpc_constrained_naive_engl" folder, especially see "mpc.cpp" file):

The active set subroutine is exactlythe same as explained above where the KKT solution is calculated by matrix inversion (the code is also inside "mpc.cpp" file):

There are several things we can do to lower the computation cost:

- The LHS of constraint calculation at (MPC_5a)-(MPC_5c) of naive implementation is constant. We should move them into MPC initialization step.

- (Some of calculation inside)

Gmatrix at (MPC_4) of naive implementation (specifically the portion) is actually constant. We should move them into MPC initialization step.

portion) is actually constant. We should move them into MPC initialization step. - If we limit the selection of the MPC

QandRweight matrices as diagonal and all its entry positive (in fact we've been doing it sincethe unconstrained implementation), then is also a symmetric positive definite matrix (here I denote

is also a symmetric positive definite matrix (here I denoteQandRweight matrices withMPCsubscript to differentiate them withQmatrix of QP solver).

Based on the last point, we can speed up the solution calculation of the KKT system:

First we apply block Gaussian elimination to the KKT system using as the pivot. Then the KKT system will becomes:

as the pivot. Then the KKT system will becomes:

Where the left matrix of the LHS equation is theSchur complement of , and the solution of KKT system then can be calculated as:

, and the solution of KKT system then can be calculated as:

At first glance, the calculation become more complicated than the naive inversion, but:

- The

matrix is constant, so also its inverse.

matrix is constant, so also its inverse. - As the

is positive definite, so is the matrix

is positive definite, so is the matrix . It means we can useCholesky decomposition to solve the first equation instead of calculating the inversion of it. Whenever applicable, the Cholesky decomposition is faster and more stable numerically than Gaussian elimination.

. It means we can useCholesky decomposition to solve the first equation instead of calculating the inversion of it. Whenever applicable, the Cholesky decomposition is faster and more stable numerically than Gaussian elimination. - In many cases, the active set matrix

dimension is smaller than

dimension is smaller than dimension, so the matrix

dimension, so the matrix will be smaller than

will be smaller than and obviously smaller than

and obviously smaller than , making the computation even more faster, relative to the naive inversion.

, making the computation even more faster, relative to the naive inversion. - For the MPC calculation, the KKT matrix

is the biggest matrix constructed. By avoid constructing it, we can reduce the memory used by the MPC.

is the biggest matrix constructed. By avoid constructing it, we can reduce the memory used by the MPC.

* Asstated on the unconstrained version, if we don't take care of it, the (although a constant) is often ill conditioned. So care must be taken to make sure the

(although a constant) is often ill conditioned. So care must be taken to make sure the will behave as good as possible.

will behave as good as possible.

The MPC algorithm then can be described as (the source code can be found in "mpc_constrained_schur_engl" folder, especially see "mpc.cpp" file):

Where the active set subroutine can be described as (the code is also inside "mpc.cpp" file):

The MPC code is self contained and can be accessed in the foldermpc_constrained_naive_engl ormpc_constrained_schur_engl. Inside you will find these files:

matrix.h/cpp: The backbone of all my code in this account. This files contain the class for Matrix operation.mpc.h/cpp: The source files of the MPC Class.konfig.h: The configuration file.mpc_constrained_*_engl.ino: The arduino main file (for naive/schur version).

For custom implementation, typically you only need to modifykonfig.h and*.ino files. Where basically you need to:

- Set the length of

X, U, Zvectors and sampling timedtinkonfig.h, depend on your model. - Set the MPC parameters like

Hp (Prediction Horizon)orHu (Control Horizon)inkonfig.h, depend on your application. - Enable/disable and set the MPC constraints parameters like

DU,U, orZinkonfig.h, depend on your application. - Define the (linear) matrix system

A, B, Cand MPC initialization valueweightQ, weightRin the*.inofile.

After that, you only need to initialize the MPC class, set the non-zero initialization matrix by callingMPC::vReInit(A, B, C, weightQ, weightR) function at initialization, and call the functionMPC::bUpdate(SP, x, u) at every sampling time to calculate the control valueu(k).

*For Arduino configuration (SYSTEM_IMPLEMENTATION is set toSYSTEM_IMPLEMENTATION_EMBEDDED_ARDUINO inkonfig.h):The code is tested on compiler Arduino IDE 1.8.10 and hardware Teensy 4.0 Platform.

*For PC configuration (SYSTEM_IMPLEMENTATION is set toSYSTEM_IMPLEMENTATION_PC inkonfig.h):The code is tested on compiler Qt Creator 4.8.2 and typical PC Platform.

Important note: For Teensy 4.0, I encounter RAM limitation where theMATRIX_MAXIMUM_SIZE can't be more than 28 (if you are using double precision) or 40 (if using single precision). If you already set more than that, your Teensy might be unable to be programmed (stack overflow make the bootloader program goes awry?). The solution is simply to change theMATRIX_MAXIMUM_SIZE to be less than that, compile & upload the code from the compiler. The IDE then will protest that it cannot find the Teensy board. DON'T PANIC. Click the program button on the Teensy board to force the bootloader to restart and download the firmware from the computer.

(The maximum matrix size 28 very much limit theHP, Hu, and constraints implementation, I guess500 kB of Teensy stack is not enough for constrained MPC huh...)next step: implementmemory pool for Matrix library!

To demonstrate the code, I've made the MPC control a state-space model (HIL style) for Jet Transport Aircraft (ref:https://www.mathworks.com/help/control/ug/mimo-state-space-models.html#buv3tp8-1), where the configuration is (4 state, 2 input, 2 output LTI system) + Hp=4 & Hu=3. The compiler is Arduino IDE 1.8.10 with default setting (compiler optimization setting: faster) and the hardware is Teensy 4.0. Note that I'm somewhat 'cheating' by using the state variablex directly from the HIL system into the MPC calculation (in the real world it should be inferred using some kind of observer. But for benchmark purpose, the result should be the same).

These are the results of simulating the model with several constraint configurations (the calculation benchmark below):

- The result for no-constraint. The MPC behave likethe unconstrained version.

- The result with slew rate constraints added

: Maximum 4 active-set iterations. Take attention that the

: Maximum 4 active-set iterations. Take attention that the signals become slower to change.

signals become slower to change.

- The result when slew rate constraintsand output constrains added

: Maximum 5 active-set iterations. Take attention that beside the

: Maximum 5 active-set iterations. Take attention that beside the signals become slower to change, the output

signals become slower to change, the output at ~300th sampling time don't overshoot anymore.

at ~300th sampling time don't overshoot anymore.

All result are plotted usingScilab.

The most interesting result came from analyzing the computation needed by the MPC, the data are collected using this code snippet:

u64compuTime = micros();MPC_HIL.bUpdate(SP, x, u);u64compuTime = (micros() - u64compuTime); _maxu64compuTime < u64compuTime? _maxu64compuTime = u64compuTime: 0;The benchmark data variable_maxu64compuTime, in microseconds (lower is better):

(Nice)

As can be seen here, the optimized version using Schur complement implementation gives computational boost up to 2 to 3 times compared the naive version. Even if we optimize the naive version by doingthe first two optimization of the second implementation (moving the LHS constraint and some ofG matrix calculation into initialization step), we can only cut the computation time around 40 us (try it!). It means the bulk of the speed up came from the choice of the KKT system solver. Note that using Schur complement method (some publication called it 'Range-space methods') is not the only way to solve the KKT system of EQP, there are Null-Space methods (where the matrix doesn't need to be positive definite), Conjugate gradient method, etc. Refer to mathematical optimization textbooks to read more on this topic.

matrix doesn't need to be positive definite), Conjugate gradient method, etc. Refer to mathematical optimization textbooks to read more on this topic.

The interesting thing happens when we disabled the matrix bounds checking inkonfig.h (if you customize/change the plant system, you need to harden the system before disable it), we can get further increase throughput 2 to 4 times compared with bounds checking enabled, where the best result is127 us for a fully functioning constrained MPC. That means this MPC library is capable to tackle a reasonably complex very fast system at several hundred (or even thousand) Hz sampling rate (and in a $20 hardware form!).

Using static analyzer, the biggest matrix constructed is matrix where the worst-case size is

matrix where the worst-case size is(2xHuxM) + (2xHpxZ) when we enable constraints. The matrix maximum size will be

constraints. The matrix maximum size will be(2x4x2)+(2x3x2) = 28. This is theMATRIX_MAXIMUM_SIZE value we used in this benchmark.

Note that when we use the naive version, the worst case matrix size is actually the dimension of which is

which is(HpxZ) + (2xHuxM) + (2xHpxZ) or 36 (we don't use it here because of stack overflow).

Sometimes I just want to see how far I can push this system to the extreme. Using the same model (Jet Transport Aircraft), the lowestHp &Hu values that still capable to tracking the set point areHp=3 andHu=1 (with degraded transient response). The biggest matrix is with

with constraints at the size

constraints at the size(2*Hu*M)+(2*Hp*Z) x (Hu*M) = (2*1*2)+(2*3*2)x(1*2) = 16x2 matrix.

The benchmark data variable_maxu64compuTime, in microseconds are (lower is better):

| Constraints Configuration | Maximum Active Set iteration | Implementation using Schur Complement | Schur Complement without Bounds Checking |

|---|---|---|---|

| No-constraint | 1 | 11 us | 4 us |

| 2 | 26 us | 13 us |

| 2 | 52 us | 28 us |

(Noice) With this we're entering the tens to (almost) hundred kHz application (*⌄*)

This is the result:And when zoomed for and

and constraints (the MPC still able to control the system while still comply with the constraints):

constraints (the MPC still able to control the system while still comply with the constraints):

Note that this benchmark still usingzero vector as the ASM starting value. Expect additional 20-30% of computation time when we use linear programming to search for the starting point (will be implemented soon!).

I hope you can test & validate my result or inform me if there are some bugs / mathematical error you encounter along the way! (or if you notice some grammar error in the documentation).

I published the code under CC0 license, effectively placed the code on public domain. But it will be great if you can tell me if you use the code, for what/why. That means a lot to me and give me motivation to expand the work (⌒▽⌒)

About

A compact Constrained Model Predictive Control (MPC) library with Active Set based Quadratic Programming (QP) solver for Teensy4/Arduino system (or any real time embedded system in general)

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.