- Notifications

You must be signed in to change notification settings - Fork49

The fastest JavaScript BPE Tokenizer Encoder Decoder for OpenAI's GPT models (gpt-5, gpt-o*, gpt-4o, etc.). Port of OpenAI's tiktoken with additional features.

License

niieani/gpt-tokenizer

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

gpt-tokenizer is a Token Byte Pair Encoder/Decoder supporting all OpenAI's models (including GPT-5, GPT-4o, o1, o3, o4, GPT-4.1 and older models like GPT-3.5, GPT-4).It's thefastest, smallest and lowest footprint GPT tokenizer available for all JavaScript environments and is written in TypeScript.

Try it out in theplayground!

This library has been trusted by:

- CodeRabbit (sponsor 🩷)

- Microsoft (Teams,GenAIScript)

- Elastic (Kibana)

- Effect TS

- Rivet by Ironclad

Please consider🩷 sponsoring the project if you find it useful.

It is the most feature-complete, open-source GPT tokenizer on NPM. This package is a port of OpenAI'stiktoken, with some additional, unique features sprinkled on top:

- Support for easily tokenizing chats thanks to the

encodeChatfunction - Support for all current OpenAI models (available encodings:

r50k_base,p50k_base,p50k_edit,cl100k_baseando200k_base) - Can be loaded and work synchronously! (i.e. in non async/await contexts)

- Generator function versions of both the decoder and encoder functions

- Provides the ability to decode an asynchronous stream of data (using

decodeAsyncGeneratoranddecodeGeneratorwith any iterable input) - No global cache (no accidental memory leaks, as with the original GPT-3-Encoder implementation)

- Includes a highly performant

isWithinTokenLimitfunction to assess token limit without encoding the entire text/chat - Built-in cost estimation with the

estimateCostfunction for calculating API usage costs - Full library of OpenAI models with comprehensive pricing information (see

src/models.tsandsrc/models.gen.ts) - Improves overall performance by eliminating transitive arrays

- Type-safe (written in TypeScript)

- Works in the browser out-of-the-box

npm install gpt-tokenizer

<scriptsrc="https://unpkg.com/gpt-tokenizer"></script><script>// the package is now available as a global:const{ encode, decode}=GPTTokenizer_cl100k_base</script>

If you wish to use a custom encoding, fetch the relevant script.

- https://unpkg.com/gpt-tokenizer/dist/o200k_base.js (for all modern models, such as

gpt-5,gpt-4o,gpt-4.1,o1and others) - https://unpkg.com/gpt-tokenizer/dist/cl100k_base.js (for

gpt-4andgpt-3.5) - https://unpkg.com/gpt-tokenizer/dist/p50k_base.js

- https://unpkg.com/gpt-tokenizer/dist/p50k_edit.js

- https://unpkg.com/gpt-tokenizer/dist/r50k_base.js

The global name is a concatenation:GPTTokenizer_${encoding}.

Refer tosupported models and their encodings section for more information.

The playground is published under a memorable URL:https://gpt-tokenizer.dev/

The library provides various functions to transform text into (and from) a sequence of integers (tokens) that can be fed into an LLM model. The transformation is done using a Byte Pair Encoding (BPE) algorithm used by OpenAI.

import{encode,encodeChat,decode,isWithinTokenLimit,encodeGenerator,decodeGenerator,decodeAsyncGenerator,ALL_SPECIAL_TOKENS,}from'gpt-tokenizer'// note: depending on the model, import from the respective file, e.g.:// import {...} from 'gpt-tokenizer/model/gpt-4o'consttext='Hello, world!'consttokenLimit=10// Encode text into tokensconsttokens=encode(text)// Decode tokens back into textconstdecodedText=decode(tokens)// Check if text is within the token limit// returns false if the limit is exceeded, otherwise returns the actual number of tokens (truthy value)constwithinTokenLimit=isWithinTokenLimit(text,tokenLimit)// Allow special tokens when neededconstwithinTokenLimitWithSpecial=isWithinTokenLimit(text,tokenLimit,{allowedSpecial:ALL_SPECIAL_TOKENS,})// Example chat:constchat=[{role:'system',content:'You are a helpful assistant.'},{role:'assistant',content:'gpt-tokenizer is awesome.'},]asconst// Encode chat into tokensconstchatTokens=encodeChat(chat)// Check if chat is within the token limitconstchatWithinTokenLimit=isWithinTokenLimit(chat,tokenLimit)constchatWithinTokenLimitWithSpecial=isWithinTokenLimit(chat,tokenLimit,{allowedSpecial:ALL_SPECIAL_TOKENS,})// Encode text using generatorfor(consttokenChunkofencodeGenerator(text)){console.log(tokenChunk)}// Decode tokens using generatorfor(consttextChunkofdecodeGenerator(tokens)){console.log(textChunk)}// Decode tokens using async generator// (assuming `asyncTokens` is an AsyncIterableIterator<number>)forawait(consttextChunkofdecodeAsyncGenerator(asyncTokens)){console.log(textChunk)}

By default, importing fromgpt-tokenizer useso200k_base encoding, used by all modern OpenAI models, includinggpt-4o,gpt-4.1,o1, etc.

To get a tokenizer for a different model, import it directly, for example:

import{encode,decode,isWithinTokenLimit,// etc...}from'gpt-tokenizer/model/gpt-3.5-turbo'

If you're dealing with a resolver that doesn't support package.jsonexports resolution, you might need to import from the respectivecjs oresm directory, e.g.:

import{encode,decode,isWithinTokenLimit,// etc...}from'gpt-tokenizer/cjs/model/gpt-3.5-turbo'

If you don't mind loading the tokenizer asynchronously, you can use a dynamic import inside your function, like so:

const{ encode, decode, isWithinTokenLimit,// etc...}=awaitimport('gpt-tokenizer/model/gpt-3.5-turbo')

If your model isn't supported by the package, but you know which BPE encoding it uses, you can load the encoding directly, e.g.:

import{encode,decode,isWithinTokenLimit,// etc...}from'gpt-tokenizer/encoding/cl100k_base'

We support all OpenAI models, including the latest ones, with the following encodings:

o-series models, likeo1-*,o3-*ando4-*(o200k_base)gpt-4o(o200k_base)gpt-4-*(cl100k_base)gpt-3.5-*(cl100k_base)text-davinci-003(p50k_base)text-davinci-002(p50k_base)text-davinci-001(r50k_base)- ...and many other models, seemodels.ts for an up-to-date list of supported models and their encodings.

If you don't see the model you're looking for, the default encoding is probably the one you want.

Encodes the given text into a sequence of tokens. Use this method when you need to transform a piece of text into the token format that the GPT models can process.

The optionalencodeOptions parameter allows you to specify special token handling (seespecial tokens).

Example:

import{encode}from'gpt-tokenizer'consttext='Hello, world!'consttokens=encode(text)

Decodes a sequence of tokens back into text. Use this method when you want to convert the output tokens from GPT models back into human-readable text.

Example:

import{decode}from'gpt-tokenizer'consttokens=[18435,198,23132,328]consttext=decode(tokens)

isWithinTokenLimit(text: string | Iterable<ChatMessage>, tokenLimit: number, encodeOptions?: EncodeOptions): false | number

Checks if the input is within the token limit. Returnsfalse if the limit is exceeded, otherwise returns the number of tokens. Use this method to quickly check if a given text or chat is within the token limit imposed by GPT models, without encoding the entire input. The optionalencodeOptions parameter lets you configure special token handling.

Example:

import{isWithinTokenLimit,ALL_SPECIAL_TOKENS}from'gpt-tokenizer'consttext='Hello, world!'consttokenLimit=10constwithinTokenLimit=isWithinTokenLimit(text,tokenLimit)constwithinTokenLimitWithSpecial=isWithinTokenLimit(text,tokenLimit,{allowedSpecial:ALL_SPECIAL_TOKENS,})

Counts the number of tokens in the input text or chat. Use this method when you need to determine the number of tokens without checking against a limit.The optionalencodeOptions parameter allows you to specify custom sets of allowed or disallowed special tokens.

Example:

import{countTokens}from'gpt-tokenizer'consttext='Hello, world!'consttokenCount=countTokens(text)

Encodes the given chat into a sequence of tokens. The optionalencodeOptions parameter lets you configure special token handling.

If you didn't import the model version directly, or ifmodel wasn't provided during initialization, it must be provided here to correctly tokenize the chat for a given model. Use this method when you need to transform a chat into the token format that the GPT models can process.

Example:

import{encodeChat}from'gpt-tokenizer'constchat=[{role:'system',content:'You are a helpful assistant.'},{role:'assistant',content:'gpt-tokenizer is awesome.'},]consttokens=encodeChat(chat)

Note that if you encode an empty chat, it will still contain the minimum number of special tokens.

Encodes the given text using a generator, yielding chunks of tokens.Use this method when you want to encode text in chunks, which can be useful for processing large texts or streaming data.

Example:

import{encodeGenerator}from'gpt-tokenizer'consttext='Hello, world!'consttokens=[]for(consttokenChunkofencodeGenerator(text)){tokens.push(...tokenChunk)}

encodeChatGenerator(chat: Iterator<ChatMessage>, model?: ModelName): Generator<number[], void, undefined>

Same asencodeChat, but uses a generator as output, and may use any iterator as the inputchat.

Decodes a sequence of tokens using a generator, yielding chunks of decoded text.Use this method when you want to decode tokens in chunks, which can be useful for processing large outputs or streaming data.

Example:

import{decodeGenerator}from'gpt-tokenizer'consttokens=[18435,198,23132,328]letdecodedText=''for(consttextChunkofdecodeGenerator(tokens)){decodedText+=textChunk}

Decodes a sequence of tokens asynchronously using a generator, yielding chunks of decoded text. Use this method when you want to decode tokens in chunks asynchronously, which can be useful for processing large outputs or streaming data in an asynchronous context.

Example:

import{decodeAsyncGenerator}from'gpt-tokenizer'asyncfunctionprocessTokens(asyncTokensIterator){letdecodedText=''forawait(consttextChunkofdecodeAsyncGenerator(asyncTokensIterator)){decodedText+=textChunk}}

Estimates the cost of processing a given number of tokens using the model's pricing data. This function calculates costs for different API usage types (main API, batch API) and cached tokens when available.

The function returns aPriceData object with the following structure:

main: Main API pricing withinput,output,cached_input, andcached_outputcostsbatch: Batch API pricing with the same cost categories

All costs are calculated in USD based on the token count provided.

Example:

import{estimateCost}from'gpt-tokenizer/model/gpt-4o'consttokenCount=1000constcostEstimate=estimateCost(tokenCount)console.log('Main API input cost:',costEstimate.main?.input)console.log('Main API output cost:',costEstimate.main?.output)console.log('Batch API input cost:',costEstimate.batch?.input)

Note: The model spec must be available either through the model-specific import or by passing it as the second parameter. Cost information may not be available for all models.

There are a few special tokens that are used by the GPT models.Note that not all models support all of these tokens.

By default,all special tokens are disallowed.

Theencode,encodeGenerator,encodeChat,encodeChatGenerator,countTokens, andisWithinTokenLimit functions accept anEncodeOptions parameter to customize special token handling:

gpt-tokenizer allows you to specify custom sets of allowed special tokens when encoding text. To do this, pass aSet containing the allowed special tokens as a parameter to theencode function:

import{EndOfPrompt,EndOfText,FimMiddle,FimPrefix,FimSuffix,ImStart,ImEnd,ImSep,encode,}from'gpt-tokenizer'constinputText=`Some Text${EndOfPrompt}`constallowedSpecialTokens=newSet([EndOfPrompt])constencoded=encode(inputText,{ allowedSpecialTokens})constexpectedEncoded=[8538,2991,220,100276]expect(encoded).toBe(expectedEncoded)

You may also use a special shorthand for either disallowing or allowing all special tokens, by passing in the string'all', e.g.{ allowedSpecial: 'all' }.

Similarly, you can specify custom sets of disallowed special tokens when encoding text. Pass aSetcontaining the disallowed special tokens as a parameter to theencode function:

import{encode,EndOfText}from'gpt-tokenizer'constinputText=`Some Text${EndOfText}`constdisallowedSpecial=newSet([EndOfText])// throws an error:constencoded=encode(inputText,{ disallowedSpecial})

In this example, an Error is thrown, because the input text contains a disallowed special token.

If bothallowedSpecialTokens anddisallowedSpecial are provided,disallowedSpecial takes precedence.

The tokenizer uses an LRU (Least Recently Used) cache to improve encoding performance for similar strings. By default, it stores up to 100,000 merged token pairs. You can adjust this value to optimize for your specific use case:

- Increasing the cache size will make encoding similar strings faster but consume more memory

- Setting it to 0 will disable caching completely

- For applications processing many unique strings, a smaller cache might be more efficient

You can modify the cache size using thesetMergeCacheSize function:

import{setMergeCacheSize}from'gpt-tokenizer'// Set to 5000 entriessetMergeCacheSize(5000)// Disable caching completelysetMergeCacheSize(0)

The cache is persisted between encoding calls. To explicitly clear the cache (e.g. to free up memory), use theclearMergeCache function:

import{clearMergeCache}from'gpt-tokenizer'clearMergeCache()

gpt-tokenizer includes a set of test cases in theTestPlans.txt file to ensure its compatibility with OpenAI's Pythontiktoken library. These test cases validate the functionality and behavior ofgpt-tokenizer, providing a reliable reference for developers.

Running the unit tests and verifying the test cases helps maintain consistency between the library and the original Python implementation.

gpt-tokenizer provides comprehensive data about all OpenAI models through themodels export fromgpt-tokenizer/models. This includes detailed information about context windows, costs, training data cutoffs, and deprecation status.

The data is regularly maintained to match OpenAI's official documentation. Contributions to keep this data up-to-date are welcome - if you notice any discrepancies or have updates, please feel free to open a PR.

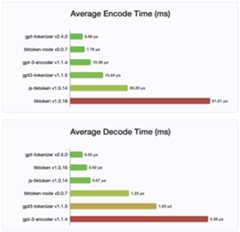

Since version 2.4.0,gpt-tokenizer is the fastest tokenizer implementation available on NPM. It's even faster than the available WASM/node binding implementations.It has the fastest encoding, decoding time and a tiny memory footprint. It also initializes faster than all other implementations.

The encodings themselves are also the smallest in size, due to the compact format they are stored in.

MIT

Contributions are welcome! Please open a pull request or an issue to discuss your bug reports, or use the discussions feature for ideas or any other inquiries.

Thanks to @dmitry-brazhenko'sSharpToken, whose code was served as a reference for the port.

Hope you find thegpt-tokenizer useful in your projects!

About

The fastest JavaScript BPE Tokenizer Encoder Decoder for OpenAI's GPT models (gpt-5, gpt-o*, gpt-4o, etc.). Port of OpenAI's tiktoken with additional features.

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.