- Notifications

You must be signed in to change notification settings - Fork58

Deep Learning sample programs using PyTorch in C++

License

koba-jon/pytorch_cpp

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Requirements:LibTorch,OpenCV,OpenMP,Boost,Gnuplot,libpng/png++/zlib

$ git clone https://github.com/koba-jon/pytorch_cpp.git$ cd pytorch_cpp$ sudo apt install g++-8(1) Change Directory (Model:AE1d)

$ cd Dimensionality_Reduction/AE1d(2) Build

$ mkdir build$ cd build$ cmake ..$ make -j4$ cd ..(3) Dataset Setting (Dataset:Normal Distribution Dataset)

$ cd datasets$ git clone https://huggingface.co/datasets/koba-jon/normal_distribution_dataset$ ln -s normal_distribution_dataset/NormalDistribution ./NormalDistribution$ cd ..(4) Training

$ sh scripts/train.sh(5) Test

$ sh scripts/test.sh12/06/2025: Release ofv2.9.1.4

12/01/2025: Release ofv2.9.1.3

12/01/2025: Implementation ofPatchCore

11/29/2025: Release ofv2.9.1.2

11/29/2025: Implementation ofPaDiM

11/27/2025: Implementation ofWideResNet

11/27/2025: Release ofv2.9.1.1

11/24/2025: Implementation ofESRGAN

11/21/2025: Implementation ofSRGAN

11/19/2025: Implementation ofDiT

See more...

11/14/2025: Release ofv2.9.1

11/01/2025: Implementation ofNeRF and3DGS

10/16/2025: Release ofv2.9.0

10/16/2025: Implementation ofPixelSNAIL-Gray andPixelSNAIL-RGB

10/14/2025: Implementation ofYOLOv8

10/13/2025: Implementation ofYOLOv5

10/09/2025: Implementation ofRF2d

10/08/2025: Implementation ofFM2d

10/08/2025: Implementation ofLDM

10/04/2025: Implementation ofGlow

10/01/2025: Implementation ofReal-NVP2d

09/28/2025: Implementation ofPlanar-Flow2d andRadial-Flow2d

09/25/2025: Release ofv2.8.0.2

09/22/2025: Implementation ofPixelCNN-Gray andPixelCNN-RGB

09/18/2025: Implementation ofVQ-VAE-2

09/16/2025: Implementation ofVQ-VAE

09/14/2025: Implementation ofPNDM2d

09/14/2025: Release ofv2.8.0.1

09/12/2025: Implementation ofSimCLR

09/11/2025: Implementation ofMAE

09/10/2025: Implementation of EMA forDDPM2d andDDIM2d

09/08/2025: Implementation ofEfficientNet

09/07/2025: Implementation ofCycleGAN

09/05/2025: Implementation ofViT

09/04/2025: Release ofv2.8.0

09/04/2025: Implementation ofDDIM2d

09/04/2025: Implementation ofDDPM2d

06/27/2023: Release ofv2.0.1

06/27/2023: Create the heatmap for Anomaly Detection

05/07/2023: Release ofv2.0.0

03/01/2023: Release ofv1.13.1

09/12/2022: Release ofv1.12.1

08/04/2022: Release ofv1.12.0

03/18/2022: Release ofv1.11.0

02/10/2022: Release ofv1.10.2

02/09/2022: Implementation ofYOLOv3

01/09/2022: Release ofv1.10.1

01/09/2022: Fixed execution error in test on CPU package

11/12/2021: Release ofv1.10.0

09/27/2021: Release ofv1.9.1

09/27/2021: Support for using different devices between training and test

09/06/2021: Improved accuracy of time measurement using GPU

06/19/2021: Release ofv1.9.0

03/29/2021: Release ofv1.8.1

03/18/2021: Implementation ofDiscriminator from DCGAN

03/17/2021: Implementation ofAE1d

03/16/2021: Release ofv1.8.0

03/15/2021: Implementation ofYOLOv2

02/11/2021: Implementation ofYOLOv1

01/21/2021: Release ofv1.7.1

10/30/2020: Release ofv1.7.0

10/04/2020: Implementation ofSkip-GANomaly2d

10/03/2020: Implementation ofGANomaly2d

09/29/2020: Implementation ofEGBAD2d

09/28/2020: Implementation ofAnoGAN2d

09/27/2020: Implementation ofSegNet

09/26/2020: Implementation ofDAE2d

09/13/2020: Implementation ofResNet

09/07/2020: Implementation ofVGGNet

09/05/2020: Implementation ofAlexNet

09/02/2020: Implementation ofWAE2d GAN andWAE2d MMD

08/30/2020: Release ofv1.6.0

06/26/2020: Implementation ofDAGMM2d

06/26/2020: Release ofv1.5.1

06/26/2020: Implementation ofVAE2d andDCGAN

06/01/2020: Implementation ofPix2Pix

05/29/2020: Implementation ofU-Net Classification

05/26/2020: Implementation ofU-Net Regression

04/24/2020: Release ofv1.5.0

03/23/2020: Implementation ofAE2d

| Category | Model | Paper | Conference/Journal | Code |

|---|---|---|---|---|

| CNNs | AlexNet | A. Krizhevsky et al. | NeurIPS 2012 | AlexNet |

| VGGNet | K. Simonyan et al. | ICLR 2015 | VGGNet | |

| ResNet | K. He et al. | CVPR 2016 | ResNet | |

| WideResNet | S. Zagoruyko et al. | arXiv 2016 | WideResNet | |

| Discriminator | A. Radford et al. | ICLR 2016 | Discriminator | |

| EfficientNet | M. Tan et al. | ICML 2019 | EfficientNet | |

| Transformers | Vision Transformer | A. Dosovitskiy et al. | ICLR 2021 | ViT |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| Autoencoder | G. E. Hinton et al. | Science 2006 | AE1d |

| AE2d | |||

| Denoising Autoencoder | P. Vincent et al. | ICML 2008 | DAE2d |

| Category | Model | Paper | Conference/Journal | Code |

|---|---|---|---|---|

| VAEs | Variational Autoencoder | D. P. Kingma et al. | ICLR 2014 | VAE2d |

| Wasserstein Autoencoder | I. Tolstikhin et al. | ICLR 2018 | WAE2d GAN | |

| WAE2d MMD | ||||

| VQ-VAE | A. v. d. Oord et al. | NeurIPS 2017 | VQ-VAE | |

| VQ-VAE-2 | A. Razavi et al. | NeurIPS 2019 | VQ-VAE-2 | |

| GANs | DCGAN | A. Radford et al. | ICLR 2016 | DCGAN |

| Flows | Planar Flow | D. Rezende et al. | ICML 2015 | Planar-Flow2d |

| Radial Flow | D. Rezende et al. | ICML 2015 | Radial-Flow2d | |

| Real NVP | L. Dinh et al. | ICLR 2017 | Real-NVP2d | |

| Glow | D. P. Kingma et al. | NeurIPS 2018 | Glow | |

| Diffusion Models | DDPM | J. Ho et al. | NeurIPS 2020 | DDPM2d |

| DDIM | J. Song et al. | ICLR 2021 | DDIM2d | |

| PNDM | L. Liu et al. | ICLR 2022 | PNDM2d | |

| LDM | R. Rombach et al. | CVPR 2022 | LDM | |

| Diffusion Transformer | W. Peebles et al. | ICCV 2023 | DiT | |

| Flow Matching | Flow Matching | Y. Lipman et al. | ICLR 2023 | FM2d |

| Rectified Flow | X. Liu et al. | ICLR 2023 | RF2d | |

| Autoregressive Models | PixelCNN | A. v. d. Oord et al. | ICML 2016 | PixelCNN-Gray |

| PixelCNN-RGB | ||||

| PixelSNAIL | X. Chen et al. | ICML 2018 | PixelSNAIL-Gray | |

| PixelSNAIL-RGB |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| U-Net | O. Ronneberger et al. | MICCAI 2015 | U-Net Regression |

| Pix2Pix | P. Isola et al. | CVPR 2017 | Pix2Pix |

| CycleGAN | J.-Y. Zhu et al. | ICCV 2017 | CycleGAN |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| SRGAN | C. Ledig et al. | CVPR 2017 | SRGAN |

| ESRGAN | X. Wang et al. | ECCV 2018 | ESRGAN |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| SegNet | V. Badrinarayanan et al. | CVPR 2015 | SegNet |

| U-Net | O. Ronneberger et al. | MICCAI 2015 | U-Net Classification |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| YOLOv1 | J. Redmon et al. | CVPR 2016 | YOLOv1 |

| YOLOv2 | J. Redmon et al. | CVPR 2017 | YOLOv2 |

| YOLOv3 | J. Redmon et al. | arXiv 2018 | YOLOv3 |

| YOLOv5 | Ultralytics | - | YOLOv5 |

| YOLOv8 | Ultralytics | - | YOLOv8 |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| SimCLR | T. Chen et al. | ICML 2020 | SimCLR |

| Masked Autoencoder | K. He et al. | CVPR 2022 | MAE |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| Neural Radiance Field | B. Mildenhall et al. | ECCV 2020 | NeRF |

| 3D Gaussian Splatting | B. Kerbl et al. | SIGGRAPH 2023 | 3DGS |

| Model | Paper | Conference/Journal | Code |

|---|---|---|---|

| AnoGAN | T. Schlegl et al. | IPMI 2017 | AnoGAN2d |

| DAGMM | B. Zong et al. | ICLR 2018 | DAGMM2d |

| EGBAD | H. Zenati et al. | ICLR Workshop 2018 | EGBAD2d |

| GANomaly | S. Akçay et al. | ACCV 2018 | GANomaly2d |

| Skip-GANomaly | S. Akçay et al. | IJCNN 2019 | Skip-GANomaly2d |

| PaDiM | T. Defard et al. | ICPR Workshops 2020 | PaDiM |

| PatchCore | K. Roth et al. | CVPR 2022 | PatchCore |

Details

Please select the environment to use as follows on PyTorch official.

PyTorch official :https://pytorch.org/

PyTorch Build : Stable (2.9.1)

Your OS : Linux

Package : LibTorch

Language : C++ / Java

Run this Command : Download here (cxx11 ABI)

CUDA 12.6 :https://download.pytorch.org/libtorch/cu126/libtorch-shared-with-deps-2.9.1%2Bcu126.zip

CUDA 12.8 :https://download.pytorch.org/libtorch/cu128/libtorch-shared-with-deps-2.9.1%2Bcu128.zip

CUDA 13.0 :https://download.pytorch.org/libtorch/cu130/libtorch-shared-with-deps-2.9.1%2Bcu130.zip

CPU :https://download.pytorch.org/libtorch/cpu/libtorch-shared-with-deps-2.9.1%2Bcpu.zip

version : 3.0.0 or more

This is used for pre-processing and post-processing.

Please refer to other sites for more detailed installation method.

This is used to load data in parallel.

(It may be installed on standard Linux OS.)

This is used for command line arguments, etc.

$ sudo apt install libboost-dev libboost-all-devThis is used to display loss graph.

$ sudo apt install gnuplotThis is used to load and save index-color image in semantic segmentation.

$ sudo apt install libpng-dev libpng++-dev zlib1g-devDetails

$ git clone https://github.com/koba-jon/pytorch_cpp.git$ cd pytorch_cpp$ vi utils/CMakeLists.txtPlease change the 4th line of "CMakeLists.txt" according to the path of the directory "libtorch".

The following is an example where the directory "libtorch" is located directly under the directory "HOME".

3: # LibTorch4: set(LIBTORCH_DIR $ENV{HOME}/libtorch)5: list(APPEND CMAKE_PREFIX_PATH ${LIBTORCH_DIR})If you don't have g++ version 8 or above, install it.

$ sudo apt install g++-8Please move to the directory of each model and refer to "README.md".

Details

Please create a link for the original dataset.

The following is an example of "AE2d" using "celebA" Dataset.

$ cd Dimensionality_Reduction/AE2d/datasets$ ln -s <dataset_path> ./celebA_orgYou should substitute the path of dataset for "<dataset_path>".

Please make sure you have training or test data directly under "<dataset_path>".

$ vi ../../../scripts/hold_out.shPlease edit the file for original dataset.

#!/bin/bashSCRIPT_DIR=$(cd $(dirname $0); pwd)python3 ${SCRIPT_DIR}/hold_out.py \ --input_dir "celebA_org" \ --output_dir "celebA" \ --train_rate 9 \ --valid_rate 1By running this file, you can split it into training and validation data.

$ sudo apt install python3 python3-pip$ pip3 install natsort$ sh ../../../scripts/hold_out.sh$ cd ../../..There are transform, dataset and dataloader for data input in this repository.

It corresponds to the following source code in the directory, and we can add new function to the source code below.

- transforms.cpp

- transforms.hpp

- datasets.cpp

- datasets.hpp

- dataloader.cpp

- dataloader.hpp

There are a feature to check progress for training in this repository.

We can watch the number of epoch, loss, time and speed in training.

It corresponds to the following source code in the directory.

- progress.cpp

- progress.hpp

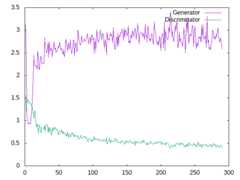

There are monitoring system for training in this repository.

We can watch output image and loss graph.

The feature to watch output image is in the "samples" in the directory "checkpoints" created during training.

The feature to watch loss graph is in the "graph" in the directory "checkpoints" created during training.

It corresponds to the following source code in the directory.

- visualizer.cpp

- visualizer.hpp

Details

You can feel free to use all source code in this repository.

(Clickhere for details.)

But if you exploit external libraries (e.g. redistribution), you should be careful.

At a minimum, the license notation at the following URL is required.

In addition, third party copyrights belong to their respective owners.

PyTorch

Official :https://pytorch.org/

License :https://github.com/pytorch/pytorch/blob/master/LICENSEOpenCV

Official :https://opencv.org/

License :https://opencv.org/license/OpenMP

Official :https://www.openmp.org/

License :https://gcc.gnu.org/onlinedocs/Boost

Official :https://www.boost.org/

License :https://www.boost.org/users/license.htmlGnuplot

Official :http://www.gnuplot.info/

License :https://sourceforge.net/p/gnuplot/gnuplot-main/ci/master/tree/Copyrightlibpng/png++/zlib

Official (libpng) :http://www.libpng.org/pub/png/libpng.html

License (libpng) :http://www.libpng.org/pub/png/src/libpng-LICENSE.txt

Official (png++) :https://www.nongnu.org/pngpp/

License (png++) :https://www.nongnu.org/pngpp/license.html

Official (zlib) :https://zlib.net/

License (zlib) :https://zlib.net/zlib_license.html

PyTorch is famous as a kind of Deep Learning Frameworks.

Among them, Python source code is overflowing on the Web, so we can easily write the source code of Deep Learning in Python.

However, there is very little source code written in C++ of compiler language.

I hope this repository will help many programmers by providing PyTorch sample programs written in C++.

If you have any problems with the source code of this repository, please feel free to "issue".

Let's have a good development and research life!

About

Deep Learning sample programs using PyTorch in C++

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.