- Notifications

You must be signed in to change notification settings - Fork81

Background Removal based on U-Net

License

eti-p-doray/unet-gan-matting

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

This project represents the final project for The INF8225: machine learning course at polytechnique Montreal.

Contributors:

- Amine El Hattami

- Étienne Pierre-Doray

- Youri Barsalou

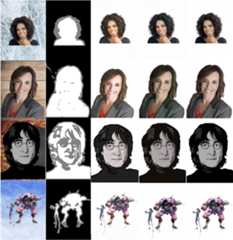

In this project we tackle on the problem of background removal through image matting. It consists of predicting the foreground of an image or a video frame. However, unlike basic background / foreground segmentation, matting takes into account the transparency of an object. Indeed, object seen on images are not always present at full opacity. Think for instance of a tinted glass box. Ideal image segmentation would give a mask telling which pixel belongs to the box and which to the rest of the image. However, ideal image matting would return a transparency mask for the box’s coordinates, such that applying a mask to the box’s original image and then onto a completely different background would allow us to see this new background through the box. The following are some of the results of our model.

From left to right: the input image, the associated input trimap, resulting extracted foreground w/o GAN, resulting extracted foreground and the ground truthThe project was written usingPython 3.6 with the following packages:

- Pillow

- tensorflow or tensorflow-gpu

- numpy

- google_images_download

- opencv-python

We also provide a requirement file to install all needed packages.

For an enviroment without GPU:

pip3 install -r requirements.txt

For an enviroment with GPU:

pip3 install -r requirements-gpu.txt

The download script uses the chromediver which is available by installing the chrome web browser. It can also be installed stand alone. Checkout the following link for more infochromedriver

This project uses a custom dataset generated by a script. The script crawl the web to retreive foreground and background images with specific filters. Note that the output of the script was manually filtered. Refer to the Experiment section in the project article for more details about the dataset generation.

The dataset generation is done in two steps:

- Download the foreground and background images

python3 scripts/download.py

- Combine the the foreground and back images:

python3 scripts/combine.py

This will create a new folder indata in thescripts directory in wich the dataset is stored.

Once the dataset is generated, the model can be trained using the following:

python3 train.py scripts/data

The training script will save a checkpoint in thelog directory after each 100 batches. it also saves a checkeck point when an exception is thrown and script terminates.

In order to try our model, we included a snapshot of our trained model (in thelog directory). That can be used as follow:

python3 eval.py <input_img_path> <trimap_img_path> <output_img_path> --checkpoint -1

About

Background Removal based on U-Net

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Uh oh!

There was an error while loading.Please reload this page.

Contributors3

Uh oh!

There was an error while loading.Please reload this page.