- Notifications

You must be signed in to change notification settings - Fork654

Open-source implementation of AlphaEvolve

License

algorithmicsuperintelligence/openevolve

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

🧬 The most advanced open-source evolutionary coding agent

Turn your LLMs into autonomous code optimizers that discover breakthrough algorithms

🚀Quick Start •Examples •System Messages •Discussions

From random search to state-of-the-art: Watch your code evolve in real-time

LLMs don't just optimize—theydiscover entirely new algorithms. No human guidance needed. | 2-3x speedups on real hardware.State-of-the-art circle packing.Breakthrough optimizations. | Full reproducibility, extensive evaluation pipelines, and scientific rigor built-in. |

OpenEvolve vs Manual Optimization:

| Aspect | Manual Optimization | OpenEvolve |

|---|---|---|

| Time to Solution | Days to weeks | Hours |

| Exploration Breadth | Limited by human creativity | Unlimited LLM creativity |

| Reproducibility | Hard to replicate | Fully deterministic |

| Multi-objective | Complex tradeoffs | Automatic Pareto optimization |

| Scaling | Doesn't scale | Parallel evolution across islands |

| Domain | Achievement | Example |

|---|---|---|

| GPU Optimization | Hardware-optimized kernel discovery | MLX Metal Kernels |

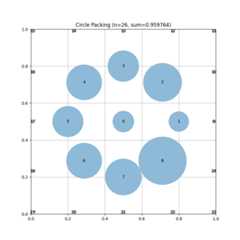

| Mathematical | State-of-the-art circle packing (n=26) | Circle Packing |

| Algorithm Design | Adaptive sorting algorithms | Rust Adaptive Sort |

| Scientific Computing | Automated filter design | Signal Processing |

| Multi-Language | Python, Rust, R, Metal shaders | All Examples |

Get from zero to evolving code in30 seconds:

# Install OpenEvolvepip install openevolve# The example uses Google Gemini by default (free tier available)# Get your API key from: https://aistudio.google.com/apikeyexport OPENAI_API_KEY="your-gemini-api-key"# Yes, use OPENAI_API_KEY env var# Run your first evolution!python openevolve-run.py examples/function_minimization/initial_program.py \ examples/function_minimization/evaluator.py \ --config examples/function_minimization/config.yaml \ --iterations 50

Note: The example config uses Gemini by default, but you can use any OpenAI-compatible provider by modifying theconfig.yaml. See theconfigs for full configuration options.

OpenEvolve can be used as a library without any external files:

fromopenevolveimportrun_evolution,evolve_function# Evolution with inline code (no files needed!)result=run_evolution(initial_program=''' def fibonacci(n): if n <= 1: return n return fibonacci(n-1) + fibonacci(n-2) ''',evaluator=lambdapath: {"score":benchmark_fib(path)},iterations=100)# Evolve Python functions directlydefbubble_sort(arr):foriinrange(len(arr)):forjinrange(len(arr)-1):ifarr[j]>arr[j+1]:arr[j],arr[j+1]=arr[j+1],arr[j]returnarrresult=evolve_function(bubble_sort,test_cases=[([3,1,2], [1,2,3]), ([5,2,8], [2,5,8])],iterations=50)print(f"Evolved sorting algorithm:{result.best_code}")

Prefer Docker? See theInstallation & Setup section for Docker options.

Circle Packing: From Random to State-of-the-Art

Watch OpenEvolve discover optimal circle packing in real-time:

| Generation 1 | Generation 190 | Generation 460 (Final) |

|---|---|---|

|  |  |

| Random placement | Learning structure | State-of-the-art result |

Result: Matches published benchmarks for n=26 circle packing problem.

GPU Kernel Evolution

Before (Baseline):

// Standard attention implementationkernelvoidattention_baseline(/* ...*/) {// Generic matrix multiplicationfloat sum =0.0;for (int i =0; i < seq_len; i++) { sum += query[tid] * key[i]; }}

After Evolution (2.8x faster):

// OpenEvolve discovered optimizationkernelvoidattention_evolved(/* ...*/) {// Hardware-aware tiling + unified memory optimization threadgroupfloat shared_mem[256];// ... evolved algorithm exploiting Apple Silicon architecture}

Performance Impact: 2.8x speedup on Apple M1 Pro, maintaining numerical accuracy.

OpenEvolve implements a sophisticatedevolutionary coding pipeline that goes far beyond simple optimization:

- Quality-Diversity Evolution: Maintains diverse populations across feature dimensions

- Island-Based Architecture: Multiple populations prevent premature convergence

- LLM Ensemble: Multiple models with intelligent fallback strategies

- Artifact Side-Channel: Error feedback improves subsequent generations

Scientific Reproducibility

- Comprehensive Seeding: Every component (LLM, database, evaluation) is seeded

- Default Seed=42: Immediate reproducible results out of the box

- Deterministic Evolution: Exact reproduction of runs across machines

- Component Isolation: Hash-based isolation prevents cross-contamination

Advanced LLM Integration

- Universal API: Works with OpenAI, Google, local models, and proxies

- Intelligent Ensembles: Weighted combinations with sophisticated fallback

- Test-Time Compute: Enhanced reasoning through proxy systems (seeOptiLLM setup)

- Plugin Ecosystem: Support for advanced reasoning plugins

Evolution Algorithm Innovations

- Double Selection: Different programs for performance vs inspiration

- Adaptive Feature Dimensions: Custom quality-diversity metrics

- Migration Patterns: Ring topology with controlled gene flow

- Multi-Strategy Sampling: Elite, diverse, and exploratory selection

| Use Case | Why OpenEvolve Excels |

|---|---|

| Performance Optimization | Discovers hardware-specific optimizations humans miss |

| Algorithm Discovery | Finds novel approaches to classic problems |

| Scientific Computing | Automates tedious manual tuning processes |

| Competitive Programming | Generates multiple solution strategies |

| Multi-Objective Problems | Pareto-optimal solutions across dimensions |

- Python: 3.10+

- LLM Access: Any OpenAI-compatible API

- Optional: Docker for containerized runs

📦 PyPI (Recommended)

pip install openevolve

🔧 Development Install

git clone https://github.com/codelion/openevolve.gitcd openevolvepip install -e".[dev]"

🐳 Docker

# Pull the imagedocker pull ghcr.io/codelion/openevolve:latest# Run an exampledocker run --rm -v$(pwd):/app ghcr.io/codelion/openevolve:latest \ examples/function_minimization/initial_program.py \ examples/function_minimization/evaluator.py --iterations 100

Cost depends on your LLM provider and iterations:

- o3: ~$0.15-0.60 per iteration (depending on code size)

- o3-mini: ~$0.03-0.12 per iteration (more cost-effective)

- Gemini-2.5-Pro: ~$0.08-0.30 per iteration

- Gemini-2.5-Flash: ~$0.01-0.05 per iteration (fastest and cheapest)

- Local models: Nearly free after setup

- OptiLLM: Use cheaper models with test-time compute for better results

Cost-saving tips:

- Start with fewer iterations (100-200)

- Use o3-mini, Gemini-2.5-Flash or local models for exploration

- Use cascade evaluation to filter bad programs early

- Configure smaller population sizes initially

OpenEvolve works withany OpenAI-compatible API:

🔥 OpenAI (Direct)

export OPENAI_API_KEY="sk-..."# Uses OpenAI endpoints by default

🤖 Google Gemini

# config.yamlllm:api_base:"https://generativelanguage.googleapis.com/v1beta/openai/"model:"gemini-2.5-pro"

export OPENAI_API_KEY="your-gemini-api-key"

🏠 Local Models (Ollama/vLLM)

# config.yamlllm:api_base:"http://localhost:11434/v1"# Ollamamodel:"codellama:7b"

⚡ OptiLLM (Advanced)

For maximum flexibility with rate limiting, model routing, and test-time compute:

# Install OptiLLMpip install optillm# Start OptiLLM proxyoptillm --port 8000# Point OpenEvolve to OptiLLMexport OPENAI_API_KEY="your-actual-key"

llm:api_base:"http://localhost:8000/v1"model:"moa&readurls-o3"# Test-time compute + web access

| Project | Domain | Achievement | Demo |

|---|---|---|---|

| Function Minimization | Optimization | Random → Simulated Annealing | View Results |

| MLX GPU Kernels | Hardware | Apple Silicon optimization | Benchmarks |

| Rust Adaptive Sort | Algorithms | Data-aware sorting | Code Evolution |

| Symbolic Regression | Science | Automated equation discovery | LLM-SRBench |

| Web Scraper + OptiLLM | AI Integration | Test-time compute optimization | Smart Scraping |

Watch OpenEvolve evolve from random search to sophisticated optimization:

# Initial Program (Random Search)defminimize_function(func,bounds,max_evals=1000):best_x,best_val=None,float('inf')for_inrange(max_evals):x=random_point_in_bounds(bounds)val=func(x)ifval<best_val:best_x,best_val=x,valreturnbest_x,best_val

Evolution Process

# Evolved Program (Simulated Annealing + Adaptive Cooling)defminimize_function(func,bounds,max_evals=1000):x=random_point_in_bounds(bounds)temp=adaptive_initial_temperature(func,bounds)foriinrange(max_evals):neighbor=generate_neighbor(x,temp,bounds)delta=func(neighbor)-func(x)ifdelta<0orrandom.random()<exp(-delta/temp):x=neighbortemp*=adaptive_cooling_rate(i,max_evals)# Dynamic coolingreturnx,func(x)

Performance: 100x improvement in convergence speed!

Prompt Evolution

Evolve prompts instead of code for better LLM performance. See theLLM Prompt Optimization example for a complete case study with HotpotQA achieving +23% accuracy improvement.

🏁 Competitive Programming

Automatic solution generation for programming contests:

# Problem: Find maximum subarray sum# OpenEvolve discovers multiple approaches:# Evolution Path 1: Brute Force → Kadane's Algorithm# Evolution Path 2: Divide & Conquer → Optimized Kadane's# Evolution Path 3: Dynamic Programming → Space-Optimized DP

OpenEvolve offers extensive configuration for advanced users:

# Advanced Configuration Examplemax_iterations:1000random_seed:42# Full reproducibilityllm:# Ensemble configurationmodels: -name:"gemini-2.5-pro"weight:0.6 -name:"gemini-2.5-flash"weight:0.4temperature:0.7database:# MAP-Elites quality-diversitypopulation_size:500num_islands:5# Parallel evolutionmigration_interval:20feature_dimensions:["complexity", "diversity", "performance"]evaluator:enable_artifacts:true# Error feedback to LLMcascade_evaluation:true# Multi-stage testinguse_llm_feedback:true# AI code quality assessmentprompt:# Sophisticated inspiration systemnum_top_programs:3# Best performersnum_diverse_programs:2# Creative explorationinclude_artifacts:true# Execution feedback# Custom templatestemplate_dir:"custom_prompts/"use_template_stochasticity:true# Randomized prompts

🎯 Feature Engineering

Control how programs are organized in the quality-diversity grid:

database:feature_dimensions: -"complexity"# Built-in: code length -"diversity"# Built-in: structural diversity -"performance"# Custom: from your evaluator -"memory_usage"# Custom: from your evaluatorfeature_bins:complexity:10# 10 complexity levelsperformance:20# 20 performance bucketsmemory_usage:15# 15 memory usage categories

Important: Return raw values from evaluator, OpenEvolve handles binning automatically.

🎨 Custom Prompt Templates

Advanced prompt engineering with custom templates:

prompt:template_dir:"custom_templates/"use_template_stochasticity:truetemplate_variations:greeting: -"Let's enhance this code:" -"Time to optimize:" -"Improving the algorithm:"improvement_suggestion: -"Here's how we could improve this code:" -"I suggest the following improvements:" -"We can enhance this code by:"

How it works: Place{greeting} or{improvement_suggestion} placeholders in your templates, and OpenEvolve will randomly choose from the variations for each generation, adding diversity to prompts.

Seeprompt examples for complete template customization.

System messages are the secret to successful evolution. They guide the LLM's understanding of your domain, constraints, and optimization goals. A well-crafted system message can be the difference between random mutations and targeted improvements.

The system message in your config.yaml is arguably the most important component for evolution success:

- Domain Expertise: Provides LLM with specific knowledge about your problem space

- Constraint Awareness: Defines what can and cannot be changed during evolution

- Optimization Focus: Guides the LLM toward meaningful improvements

- Error Prevention: Helps avoid common pitfalls and compilation errors

Based on successful OpenEvolve implementations, system messages are best created through iteration:

🔄 Step-by-Step Process

Phase 1: Initial Draft

- Start with a basic system message describing your goal

- Run 20-50 evolution iterations to observe behavior

- Note where the system gets "stuck" or makes poor choices

Phase 2: Refinement

- Add specific guidance based on observed issues

- Include domain-specific terminology and concepts

- Define clear constraints and optimization targets

- Run another batch of iterations

Phase 3: Specialization

- Add detailed examples of good vs bad approaches

- Include specific library/framework guidance

- Add error avoidance patterns you've observed

- Fine-tune based on artifact feedback

Phase 4: Optimization

- Consider using OpenEvolve itself to optimize your prompt

- Measure improvements using combined score metrics

prompt:system_message:| You are an expert programmer specializing in optimization algorithms. Your task is to improve a function minimization algorithm to find the global minimum reliably, escaping local minima that might trap simple algorithms.

prompt:system_message:| You are an expert prompt engineer. Your task is to revise prompts for LLMs. Your improvements should: * Clarify vague instructions and eliminate ambiguity * Strengthen alignment between prompt and desired task outcome * Improve robustness against edge cases * Include formatting instructions and examples where helpful * Avoid unnecessary verbosity Return only the improved prompt text without explanations.

prompt:system_message:| You are an expert Metal GPU programmer specializing in custom attention kernels for Apple Silicon. # TARGET: Optimize Metal Kernel for Grouped Query Attention (GQA) # HARDWARE: Apple M-series GPUs with unified memory architecture # GOAL: 5-15% performance improvement # OPTIMIZATION OPPORTUNITIES: **1. Memory Access Pattern Optimization:** - Coalesced access patterns for Apple Silicon - Vectorized loading using SIMD - Pre-compute frequently used indices **2. Algorithm Fusion:** - Combine max finding with score computation - Reduce number of passes through data # CONSTRAINTS - CRITICAL SAFETY RULES: **MUST NOT CHANGE:** ❌ Kernel function signature ❌ Template parameter names or types ❌ Overall algorithm correctness **ALLOWED TO OPTIMIZE:** ✅ Memory access patterns and indexing ✅ Computation order and efficiency ✅ Vectorization and SIMD utilization ✅ Apple Silicon specific optimizations

🎨 Prompt Engineering Patterns

Structure Your Message: Start with role definition → Define task/context → List optimization opportunities → Set constraints → Success criteria

Use Specific Examples:

# Good: "Focus on reducing memory allocations. Example: Replace `new Vector()` with pre-allocated arrays."# Avoid: "Make the code faster"

Include Domain Knowledge:

# Good: "For GPU kernels: 1) Memory coalescing 2) Occupancy 3) Shared memory usage"# Avoid: "Optimize the algorithm"

Set Clear Boundaries:

system_message:| MUST NOT CHANGE: ❌ Function signatures ❌ Algorithm correctness ❌ External API ALLOWED: ✅ Internal implementation ✅ Data structures ✅ Performance optimizations

🔬 Advanced Techniques

Artifact-Driven Iteration: Enable artifacts in config → Include common error patterns in system message → Add guidance based on stderr/warning patterns

Multi-Phase Evolution: Start broad ("Explore different algorithmic approaches"), then focus ("Given successful simulated annealing, focus on parameter tuning")

Template Stochasticity: See theConfiguration section for complete template variation examples.

You can use OpenEvolve to evolve your system messages themselves! This powerful technique lets you optimize prompts for better LLM performance automatically.

See theLLM Prompt Optimization example for a complete implementation, including the HotpotQA case study with +23% accuracy improvement.

- Too Vague: "Make the code better" → Specify exactly what "better" means

- Too Restrictive: Over-constraining can prevent useful optimizations

- Missing Context: Include relevant domain knowledge and terminology

- No Examples: Concrete examples guide LLM better than abstract descriptions

- Ignoring Artifacts: Don't refine prompts based on error feedback

Artifacts side-channel provides rich feedback to accelerate evolution:

# Evaluator can return execution contextfromopenevolve.evaluation_resultimportEvaluationResultreturnEvaluationResult(metrics={"performance":0.85,"correctness":1.0},artifacts={"stderr":"Warning: suboptimal memory access pattern","profiling_data": {...},"llm_feedback":"Code is correct but could use better variable names","build_warnings": ["unused variable x"] })

Next generation prompt automatically includes:

##Previous Execution Feedback⚠️ Warning: suboptimal memory access pattern💡 LLM Feedback: Code is correct but could use better variable names🔧 Build Warnings: unused variable xThis creates afeedback loop where each generation learns from previous mistakes!

Real-time evolution tracking with interactive web interface:

# Install visualization dependenciespip install -r scripts/requirements.txt# Launch interactive visualizerpython scripts/visualizer.py# Or visualize specific checkpointpython scripts/visualizer.py --path examples/function_minimization/openevolve_output/checkpoints/checkpoint_100/

Features:

- 🌳Evolution tree with parent-child relationships

- 📈Performance tracking across generations

- 🔍Code diff viewer showing mutations

- 📊MAP-Elites grid visualization

- 🎯Multi-metric analysis with custom dimensions

- Multi-Modal Evolution: Images, audio, and text simultaneously

- Federated Learning: Distributed evolution across multiple machines

- AutoML Integration: Hyperparameter and architecture evolution

- Benchmark Suite: Standardized evaluation across domains

- Self-Modifying Prompts: Evolution modifies its own prompting strategy

- Cross-Language Evolution: Python → Rust → C++ optimization chains

- Neurosymbolic Reasoning: Combine neural and symbolic approaches

- Human-AI Collaboration: Interactive evolution with human feedback

Want to contribute? Check out ourroadmap discussions!

💰 How much does it cost to run?

See theCost Estimation section in Installation & Setup for detailed pricing information and cost-saving tips.

🆚 How does this compare to manual optimization?

| Aspect | Manual | OpenEvolve |

|---|---|---|

| Initial Learning | Weeks to understand domain | Minutes to start |

| Solution Quality | Depends on expertise | Consistently explores novel approaches |

| Time Investment | Days-weeks per optimization | Hours for complete evolution |

| Reproducibility | Hard to replicate exact process | Perfect reproduction with seeds |

| Scaling | Doesn't scale beyond human capacity | Parallel evolution across islands |

OpenEvolve shines when you need to explore large solution spaces or optimize for multiple objectives simultaneously.

🔧 Can I use my own LLM?

Yes! OpenEvolve supports any OpenAI-compatible API:

- Commercial: OpenAI, Google, Cohere

- Local: Ollama, vLLM, LM Studio, text-generation-webui

- Advanced: OptiLLM for routing and test-time compute

Just set theapi_base in your config to point to your endpoint.

🚨 What if evolution gets stuck?

Built-in mechanisms prevent stagnation:

- Island migration: Fresh genes from other populations

- Temperature control: Exploration vs exploitation balance

- Diversity maintenance: MAP-Elites prevents convergence

- Artifact feedback: Error messages guide improvements

- Template stochasticity: Randomized prompts break patterns

Manual interventions:

- Increase

num_diverse_programsfor more exploration - Add custom feature dimensions to diversify search

- Use template variations to randomize prompts

- Adjust migration intervals for more cross-pollination

📈 How do I measure success?

Multiple success metrics:

- Primary Metric: Your evaluator's

combined_scoreor metric average - Convergence: Best score improvement over time

- Diversity: MAP-Elites grid coverage

- Efficiency: Iterations to reach target performance

- Robustness: Performance across different test cases

Use the visualizer to track all metrics in real-time and identify when evolution has converged.

Thanks to all our amazing contributors who make OpenEvolve possible!

We welcome contributions! Here's how to get started:

- 🍴Fork the repository

- 🌿Create your feature branch:

git checkout -b feat-amazing-feature - ✨Add your changes and tests

- ✅Test everything:

python -m unittest discover tests - 📝Commit with a clear message

- 🚀Push and create a Pull Request

New to open source? Check out ourContributing Guide and look forgood-first-issue labels!

Articles & Blog Posts About OpenEvolve:

- Towards Open Evolutionary Agents - Evolution of coding agents and the open-source movement

- OpenEvolve: GPU Kernel Discovery - Automated discovery of optimized GPU kernels

- OpenEvolve: Evolutionary Coding with LLMs - Introduction to evolutionary algorithm discovery using large language models

If you use OpenEvolve in your research, please cite:

@software{openevolve,title ={OpenEvolve: an open-source evolutionary coding agent},author ={Asankhaya Sharma},year ={2025},publisher ={GitHub},url ={https://github.com/codelion/openevolve}}

About

Open-source implementation of AlphaEvolve

Topics

Resources

License

Contributing

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Packages0

Uh oh!

There was an error while loading.Please reload this page.