- Notifications

You must be signed in to change notification settings - Fork0

Unlock the potential of latent diffusion models with MNIST! 🚀 Dive into reconstructing and generating digits using cutting-edge techniques like Autoencoders with Channel Attention Blocks and DDPMs. Perfect for enthusiasts of computer vision, deep learning, and generative modeling! 🌌✨

MahanVeisi8/Latent-Diffusion-MNIST-DDPM-using-Autoencoder

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Welcome to this project exploringDiffusion Models on theMNIST dataset! 🚀

This repository focuses on generating and reconstructing handwritten digits by integrating:

- Autoencoders with Convolutional Attention Blocks (CABs)

- Denoising Diffusion Probabilistic Models (DDPM) using U-Net

This project aims to reconstruct MNIST digits by encoding them into a latent space and progressively denoising them through aDiffusion Model.

- 🧠Latent Space Representations - Using attention mechanisms for better feature extraction.

- 🌀Diffusion Process - Forward and reverse diffusion to model the data distribution.

- 📊Visualization - Monitoring performance through SSIM/PSNR scores and latent space scatter plots.

- Encoder compresses MNIST digits into latent representations using convolutional layers and attention.

- Decoder reconstructs the digits from latent space.

CABs refine the feature maps by:

- 🌐Global Average Pooling to extract spatial information.

- 🔄Two Conv2D Layers to scale the feature channels.

- ✨Sigmoid Activation to apply attention.

classCALayer(nn.Module):def__init__(self,channel,reduction=16,bias=False):super(CALayer,self).__init__()self.avg_pool=nn.AdaptiveAvgPool2d(1)self.conv_du=nn.Sequential(nn.Conv2d(channel,channel//reduction,1,bias=bias),nn.ReLU(inplace=True),nn.Conv2d(channel//reduction,channel,1,bias=bias),nn.Sigmoid() )defforward(self,x):y=self.avg_pool(x)y=self.conv_du(y)returnx*y

- 🌪️Forward Diffusion gradually adds noise to the latent representation.

- 🌟Reverse Diffusion predicts and removes noise step by step to reconstruct the original image.

The core of the diffusion model is a U-Net, enhanced with:

- Residual Blocks

- Attention Mechanisms

- Time Embeddings for each diffusion step

The project features multiple visual outputs that highlight the training and performance of the model.

A side-by-side comparison oforiginal vsreconstructed images. HighSSIM andPSNR scores indicate effective reconstructions.

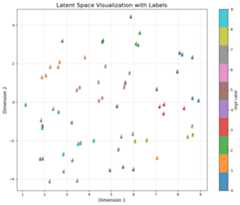

Projection of latent space usingt-SNE for a batch and the full test dataset.

Latent Space with Labels (One Batch) | Latent Space (Full Test Dataset) |

Tracking the loss of the diffusion model over epochs.

Loss Plot:

Images progress from noisy states (left) to denoised outputs (right), demonstrating the stepwise denoising process.

The project appliesunconditional latent diffusion inspired by classic DDPMs but focuses on the latent space. Below is a simplified breakdown of the key concepts:

Where:

- (x_t) is the latent at timestep (t)

- (α_t) represents noise schedule

- (ε) is the random noise

Reverse Process (Denoising):

This iterative denoising helps reconstruct the original data.

Here are a few ideas to extend this project:

- 🧱Larger U-Net Models for higher quality image synthesis

- 🔄Dynamic Diffusion Schedules to speed up convergence

- 📈Experiment with Other Datasets like Fashion MNIST or CIFAR-10

About

Unlock the potential of latent diffusion models with MNIST! 🚀 Dive into reconstructing and generating digits using cutting-edge techniques like Autoencoders with Channel Attention Blocks and DDPMs. Perfect for enthusiasts of computer vision, deep learning, and generative modeling! 🌌✨

Topics

Resources

Uh oh!

There was an error while loading.Please reload this page.